>

>

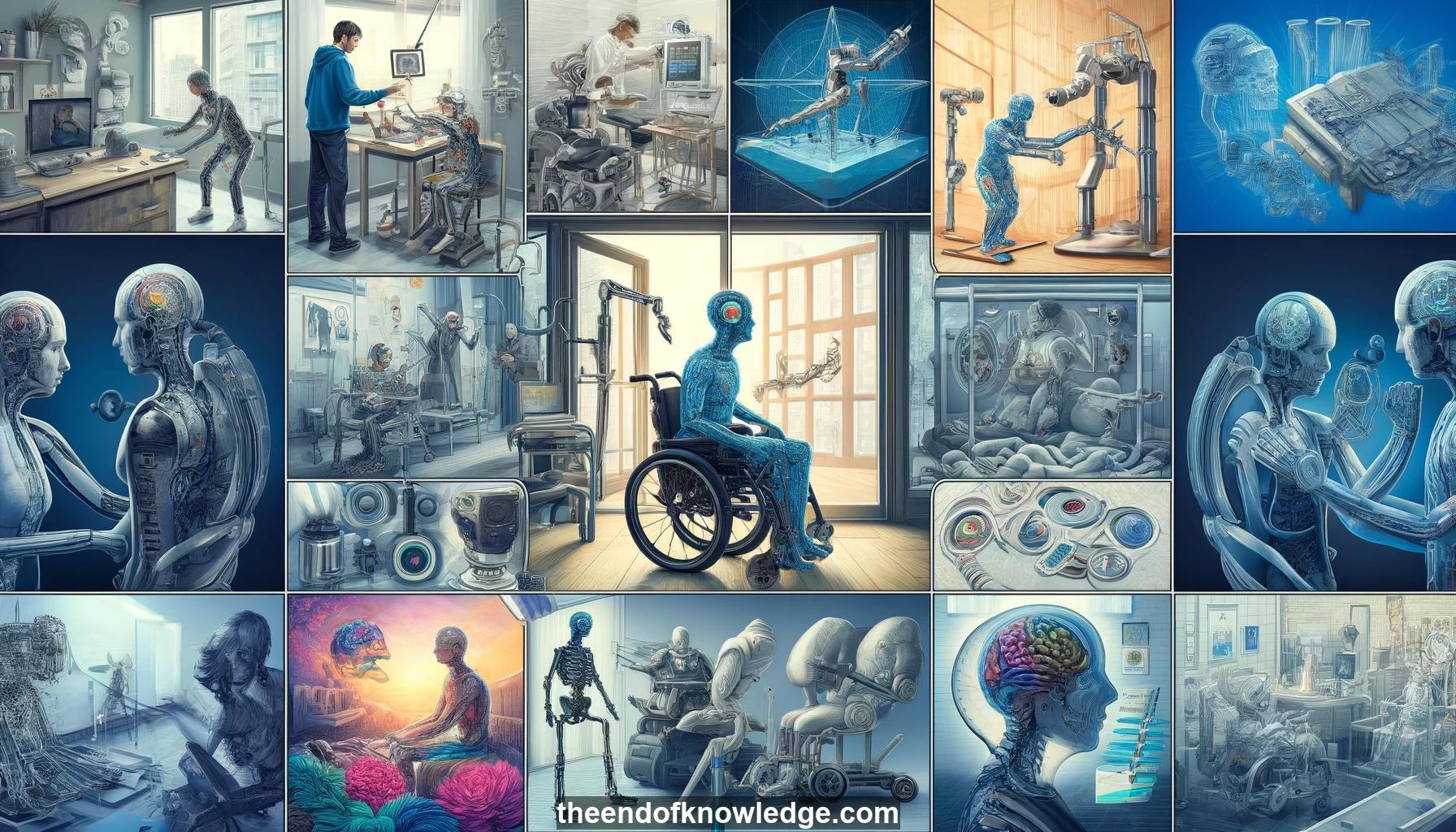

Concept Graph & Resume using Claude 3.5 Sonnet | Chat GPT4o | Llama 3:

Resume:

1.- Rehabilitation uses machines to restore lost function and assist with gaps from injury/disease. Capturing control signals is a key challenge.

2.- Higher amputation levels have fewer residual muscles for EMG control of prosthetic arms, yet require controlling more complex movements.

3.- Power wheelchairs are commonly used assistive machines. Control interfaces range from proportional joysticks to non-proportional switches like sip-and-puff.

4.- Navigating a wheelchair through a doorway is challenging even for experts, especially with limited interfaces like a head array.

5.- Robotic arms require 6-dimensional control of position and orientation. Interfaces can't provide this simultaneously, so control modes are used.

6.- People with the most limited interfaces who would benefit most from robotic arms have the hardest time operating them.

7.- Adding sensors and AI to make assistive machines into assistive robots can help, but customizing level of autonomy is critical.

8.- Shared control paradigms blend human and machine control in various ways. Studies compare autonomy to shared control and teleoperation.

9.- When comparing shared control paradigms, task metrics don't vary much but user preferences do. Providing options is important.

10.- Preferences change when the control interface changes, even with the same autonomy. Customization to the individual is needed.

11.- An exploratory study let users verbally customize a piecewise linear function blending their control with the robotic arm's autonomy.

12.- The customized shared control eliminated mode switches and enabled smooth 6D control compared to unassisted teleoperation for the user.

13.- The customized paradigm eliminated performance differences between participants with spinal cord injuries and uninjured participants seen with other paradigms.

14.- User customizations considered factors besides standard robot metrics like minimizing time and effort. Users have useful insights to capture.

15.- Challenges in adapting autonomy to users include: type of feedback signal, information filtering by impairment/interface, trusting the human, and temporality.

16.- Providing demonstrations to robots is hard for people with motor impairments. Large-scale teleoperation studies aim to characterize usage.

17.- Wheelchair obstacle course, clinical assessment, and VR command following and trajectory following tasks were used as baselines.

18.- Command following success differed between subject groups, but not response time. Interface affected response time but not success.

19.- Daily interface usage (expertise) affected response time but not success in spinally-injured users, so adaptation over time is expected.

20.- Teleoperation involves task-level human commands that don't always match the actual control signals issued due to the interface layer.

21.- A graphical model represents transitions between intended and measured sip-and-puff commands for a robot. Data informs intended input distributions.

22.- Filtering or correcting inferred unintended commands based on the model enables task success and reduces mode switches in a pilot.

23.- Task-agnostic safety-aware shared control analyzes system safety and rejects or overrides unsafe human commands while maintaining human control.

24.- The shared control learns models of the joint human-robot system to inform its autonomous interventions when needed for safety.

25.- Safety-aware shared control enables task completion and improved demonstration quality for learning compared to unassisted human control.

26.- A learned autonomous controller from shared control demos can reproduce the task, addressing covariance shift, unlike learning from unassisted demos.

27.- A body-machine interface maps high-dimensional residual body motions to low-dimensional control signals, traditionally with PCA, for various applications.

28.- Gradually engaging and fading robot autonomy aims to bootstrap human motor learning to achieve high-DoF control with limited body motions.

29.- A pilot will determine rates of co-adaptation between the human and autonomy to parameterize a full 20-participant, 20-session study.

30.- Key factors in devising suitably adaptive assistive shared autonomy are feedback, information filtering, trust, temporal adaptation, and co-adaptation.

Knowledge Vault built byDavid Vivancos 2024