>

>

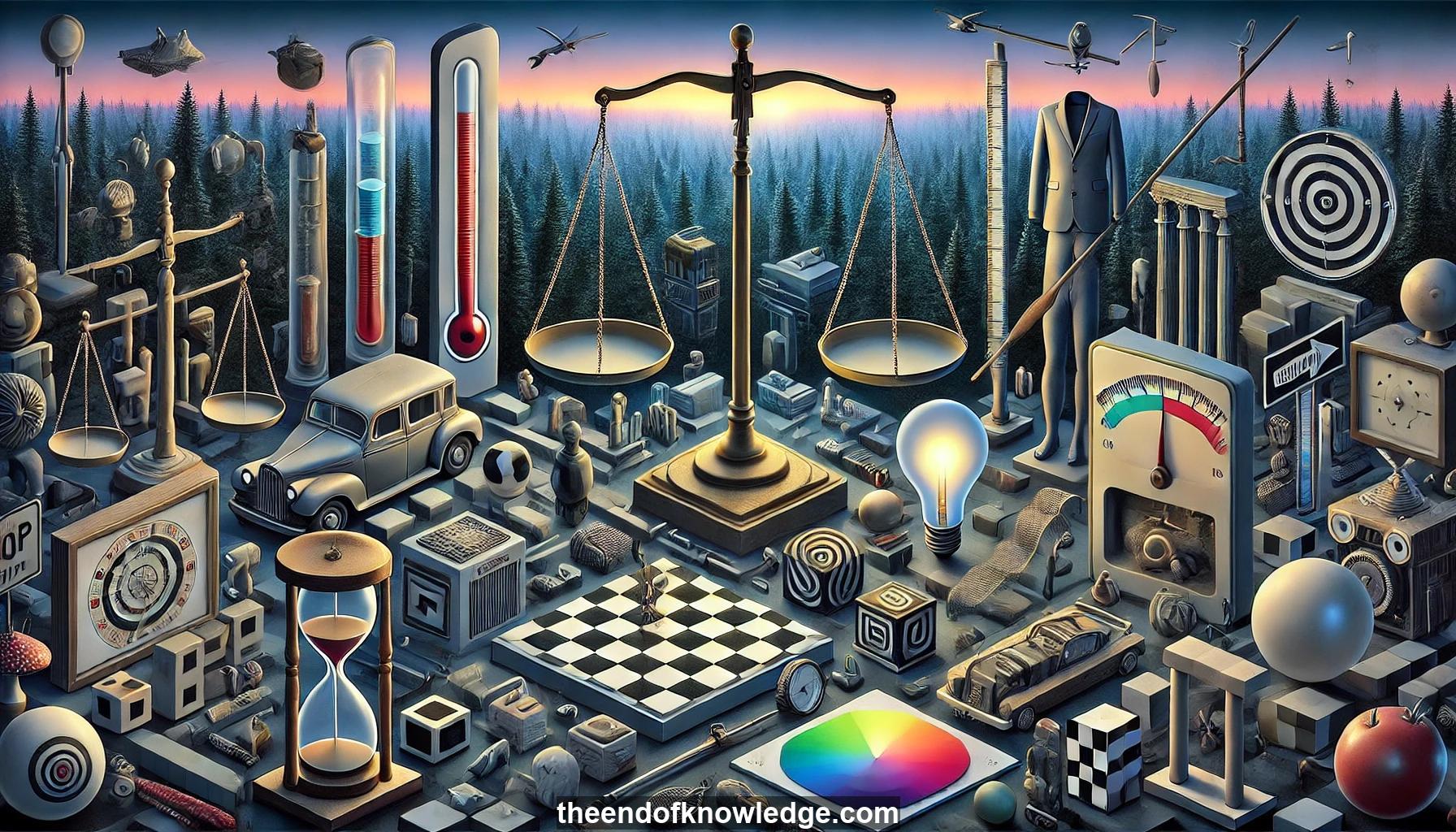

Concept Graph & Resume using Claude 3.5 Sonnet | Chat GPT4o | Llama 3:

Resume:

1.- Calibration: Ensuring predicted probabilities match actual probabilities of outcomes.

2.- Deep learning models: Often overconfident in predictions compared to older neural networks.

3.- Temperature scaling: Simple method to calibrate deep neural networks by dividing logits by a constant.

4.- Fairness: Ensuring equal treatment across different demographic groups in machine learning predictions.

5.- Group calibration: Calibrating predictions separately for different demographic groups.

6.- Impossibility theorem: Cannot achieve both group-wise calibration and equal false positive/negative rates across demographics.

7.- Adversarial examples: Imperceptible changes to inputs that cause machine learning models to misclassify with high confidence.

8.- White box attacks: Creating adversarial examples with access to model gradients.

9.- Black box attacks: Creating adversarial examples without access to model internals, only predictions.

10.- Simple Black Box Attack (SimBA): Efficient method for creating adversarial examples with limited queries to target model.

11.- Robustness to noise: Natural images maintain classification under small random perturbations.

12.- Detecting adversarial examples: Leveraging differences in noise robustness between natural and adversarial images.

13.- Over-optimization: Adversarial examples pushed far into misclassified region to evade detection.

14.- Adversarial transferability: Difficulty in creating new adversarial examples from existing ones.

15.- Gray box attacks: Adversary unaware of detection method being used.

16.- White box attacks against detection: Adversary aware of and optimizing against specific detection method.

17.- False positive/negative rates: Metrics for evaluating fairness and detection performance.

18.- Expected Calibration Error (ECE): Measure of calibration quality, comparing predicted to actual probabilities.

19.- Overfitting: Phenomenon where model performs well on training data but poorly on new data.

20.- Log likelihood: Objective function often used in training that can lead to overconfidence.

21.- DenseNet: Deep learning architecture mentioned as an example of modern neural networks.

22.- COMPASS system: Automated system for predicting criminal recidivism, used as example in fairness discussion.

23.- Feature extractors: Components of machine learning models that can be exploited by adversarial examples.

24.- Gradient descent: Optimization method used in creating white box adversarial examples.

25.- Google Cloud API: Example of a black box model that can be attacked with limited queries.

26.- Gaussian noise: Random perturbations used to test robustness of images and detect adversarial examples.

27.- PGD and Carlini-Wagner attacks: Common methods for generating adversarial examples.

28.- Logits: Unnormalized outputs of neural networks before final activation function.

29.- Softmax: Function used to convert logits into probability distributions.

30.- Fairness constraints: Conditions imposed on models to ensure equal treatment across demographics.

Knowledge Vault built byDavid Vivancos 2024