>

>

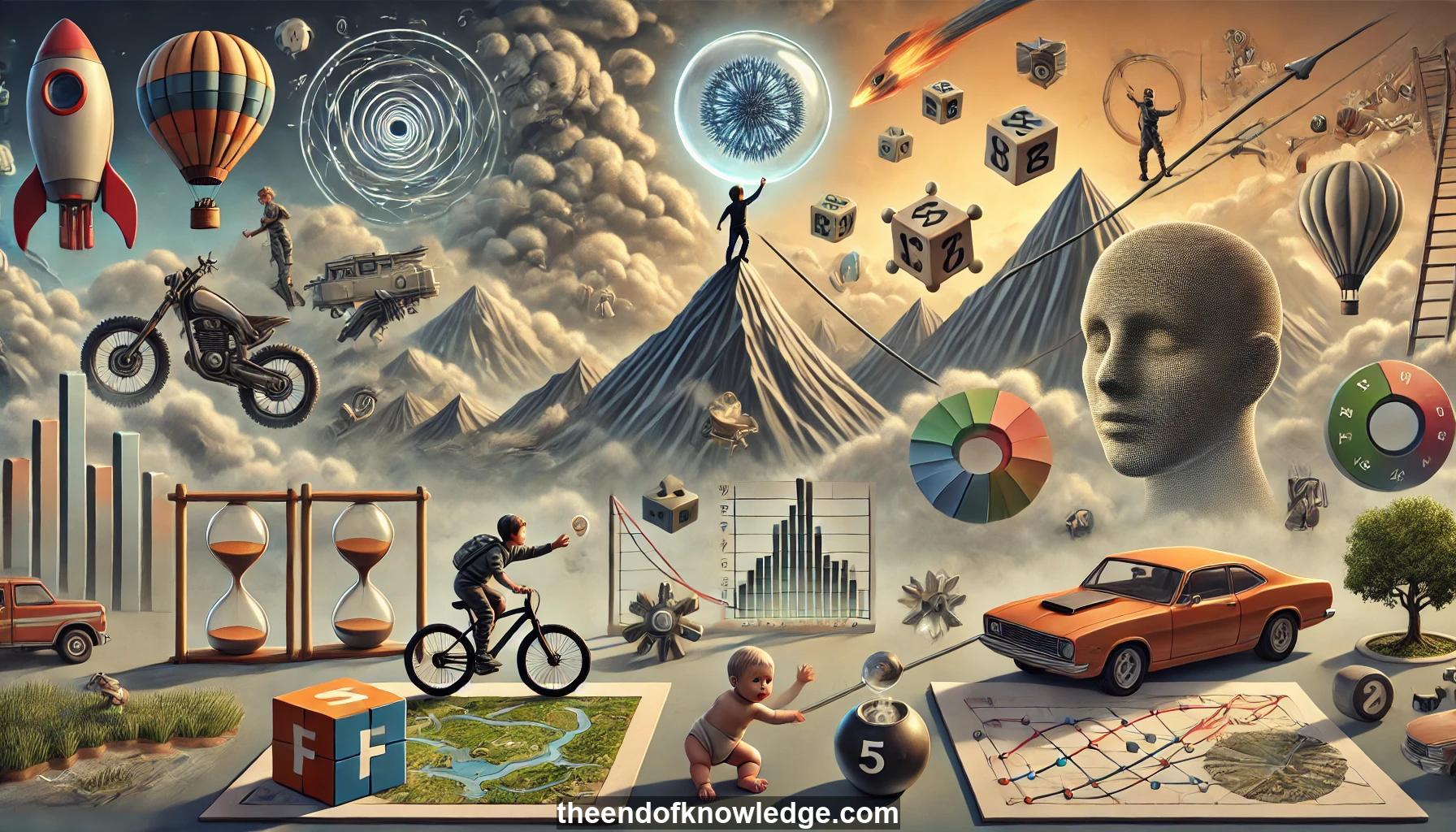

Concept Graph & Resume using Claude 3.5 Sonnet | Chat GPT4o | Llama 3:

Resume:

1.- Self-supervised learning: Learning from unlabeled data by predicting parts of the input from other parts, without human-curated labels.

2.- Supervised learning limitations: Requires large labeled datasets, which aren't always available for all problems.

3.- Reinforcement learning inefficiency: Requires many trials, impractical for real-world applications like self-driving cars.

4.- Human learning efficiency: Humans learn quickly with few samples, suggesting we're missing something in machine learning approaches.

5.- Learning world models: Key to improving AI is learning models of how the world works to enable efficient learning.

6.- Baby learning stages: Babies learn basic concepts like object permanence and gravity over time through world interaction.

7.- Self-supervised learning definition: Training large networks to understand the world through prediction tasks on unlabeled data.

8.- Prediction under uncertainty: Challenge in self-supervised learning for continuous, high-dimensional data like images or video.

9.- Latent variable models: Using additional variables to model uncertainty and generate multiple possible outputs.

10.- Energy-based learning: Formulating self-supervised learning as learning energy functions that capture data dependencies.

11.- Contrastive methods: Pushing down energy of data points while pushing up energy of points outside the data manifold.

12.- Regularized latent variables: Limiting the volume of low-energy space by regularizing latent variables.

13.- Sparse coding: Early example of regularized latent variable systems for learning data representations.

14.- Predictive sparse decomposition: Training a predictor to estimate optimal sparse codes, avoiding expensive optimization.

15.- Hierarchical representations: Learning multi-layer sparse codes for complex data like images, an ongoing research area.

16.- Model-based reinforcement learning: Using learned world models to accelerate skill acquisition, especially in motor tasks.

17.- Optimal control theory: Classical approach to control using differentiable world models, basis for some modern AI techniques.

18.- Autonomous driving example: Learning to drive in simulation using a world model trained on real driving data.

19.- Video prediction model: Neural network trained to predict future frames in driving videos, handling uncertainty with latent variables.

20.- Regularizing latent variables: Using techniques like dropout to prevent latent variables from capturing too much information.

21.- Variational autoencoder similarity: Adding noise to encoder output to limit information content, similar to VAEs.

22.- Policy network training: Using the learned world model to train a driving policy through backpropagation, without real-world interaction.

23.- Inverse curiosity: Encouraging the agent to stay in areas where its world model is accurate, avoiding unreliable predictions.

24.- Handling uncertainty in continuous spaces: Key technical challenge in self-supervised learning for high-dimensional data.

25.- GAN limitations: While useful for handling uncertainty, GANs are difficult to train reliably.

26.- Alternatives to GANs: Seeking more reliable methods for learning under uncertainty in high-dimensional spaces.

27.- Model-based RL resurgence: Renewed interest in model-based reinforcement learning, despite previous theoretical limitations.

28.- Classification workaround: Some self-supervised methods avoid uncertainty by turning prediction into classification tasks.

29.- Jigsaw puzzle example: Self-supervised task of predicting relative positions of image patches, avoiding pixel-level prediction.

30.- Future direction: Need to directly address the problem of learning under uncertainty in continuous, high-dimensional spaces.

Knowledge Vault built byDavid Vivancos 2024