>

>

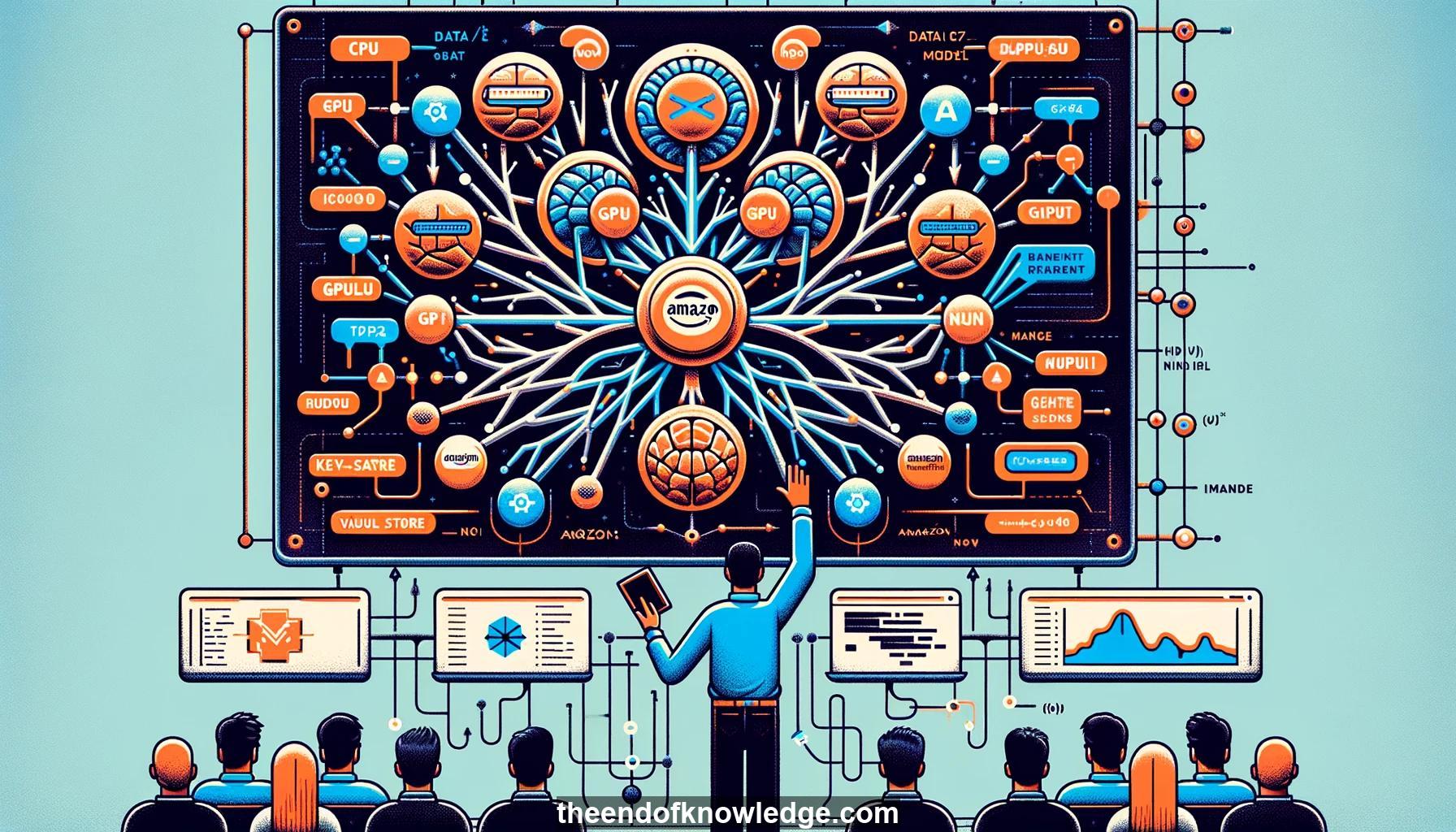

Concept Graph & Resume using Claude 3.5 Sonnet | Chat GPT4o | Llama 3:

Resume:

1.- The tutorial is on distributed learning with MXNet by Alex Smolak and Arakan from Amazon.

2.- Take a picture of the slide with installation instructions as a reference.

3.- Raise your hand if you have issues during the installation process and someone will assist you.

4.- Introduction to the speakers Alex and Arakan who work for Amazon, but not in package delivery.

5.- Get started with MXNet on luong.mxnet.io.

6.- MXNet combines benefits of symbolic frameworks (Caffe, Keras) and imperative frameworks (PyTorch, TensorFlow).

7.- MXNet offers performance of symbolic frameworks and flexibility of imperative frameworks for deep learning.

8.- MXNet's NDArray handles multi-device execution on CPU, GPU, and more using non-locking, lazy evaluation.

9.- Device context in MXNet allows easy data movement between devices.

10.- MXNet doesn't allow incrementing individual elements in NDArrays to enforce parallelism and performance.

11.- MXNet's autograd allows computing gradients of dynamic graphs and running optimization.

12.- Example of defining and training a simple linear regression model in MXNet.

13.- Using MXNet's autograd to automatically compute gradients for optimization, even for dynamic graphs with control flow.

14.- Moving to multi-layer perceptrons, a type of neural network, as a more sophisticated example.

15.- Example code defining a multi-layer neural network architecture using MXNet's sequential layer API.

16.- The code allows flexibly defining networks in a procedural manner while getting symbolic framework performance.

17.- Discussion of activation functions, loss functions, and gradient-based optimization for training neural networks.

18.- Generating synthetic data and preparing it for training a neural network.

19.- Setting up the model, loss function, optimizer and fitting the model to the generated training data.

20.- Evaluating the trained model's predictions and loss on test data to assess performance.

21.- The MXNet code allows concisely defining models that can be automatically trained via gradient descent.

22.- More complex architectures like convolutional neural networks can be defined using a similar API.

23.- Pre-defined neural network architectures are also available in MXNet's model zoo for common tasks.

24.- MXNet can utilize multiple GPUs and machines for distributed training of large models.

25.- Performance comparisons showing MXNet's speed relative to other popular deep learning frameworks.

26.- Distributed key-value store enables easy parameter synchronization for distributed training.

27.- Discussion of strategies for efficient multi-GPU training, like data and model parallelism.

28.- Latest MXNet release includes new optimizations for fast distributed training on large clusters.

29.- Additional features in MXNet include support for sparse data, HDF5 format, and optimized math operations.

30.- Pointers to MXNet tutorials, examples, and resources to learn more and get started quickly.

Knowledge Vault built byDavid Vivancos 2024