>

>

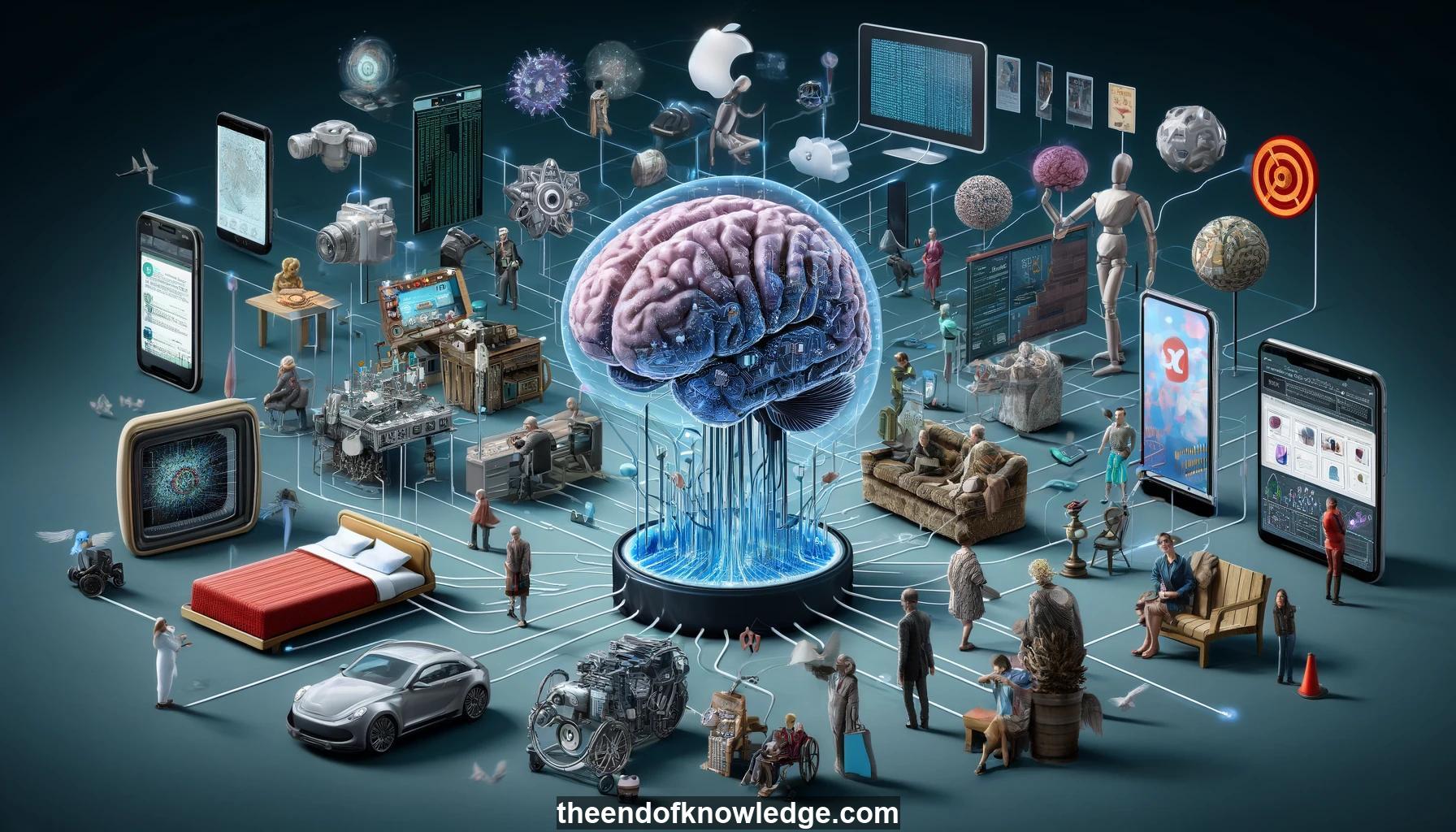

Concept Graph & Resume using Claude 3.5 Sonnet | Chat GPT4o | Llama 3:

Resume:

1.- Latanya Sweeney discusses how AI designers shape society through technology design decisions, often accidentally and without oversight.

2.- Early photography and phone recording laws in the U.S. were shaped by technology design choices like lacking a mute button.

3.- Sleep Number bed sensors gather intimate data without user control, while Apple Watch stores data locally, reflecting different design values.

4.- Designers are the new policymakers in a technocracy, even if unelected, as their solutions are market-driven without much oversight.

5.- Sweeney's early work showed how purportedly anonymized health data could actually identify individuals by linking to publicly available information.

6.- In the late 1990s, AI focused more on computing mathematical ideals rather than mimicking human behavior. Modern AI applications were formerly research.

7.- Sweeney found her name generated online ads implying she had an arrest record, which happened more for "black-sounding" vs "white-sounding" names.

8.- This ad delivery disparity could violate U.S. anti-discrimination laws if it led to unequal treatment, e.g. in employment decisions.

9.- A similar ad delivery bias appeared on websites aimed at black audiences, potentially violating credit reporting fairness regulations.

10.- Health data in the U.S. flows in complex ways, with only half the pathways covered by HIPAA medical privacy rules.

11.- Washington state sold hospital data cheaply in a way that could be re-identified, until Sweeney's research prompted a law change.

12.- Technology is changing fundamental societal values and institutions in often accidental ways, as designers focus on products over broader impacts.

13.- Sweeney's experiments demonstrating these issues have helped shore up advocates, regulators and journalists in understanding and addressing technological shifts.

14.- She started a Harvard class where students conducted impactful experiments revealing unforeseen technology consequences and presented findings to D.C. policymakers.

15.- Example student projects included algorithms to proactively catch online fraud, price discrimination by zip code demographics, and privacy issues.

16.- Student Aron exposed how Facebook leaked user locations, prompting swift change, but also faced personal retaliation from Facebook.

17.- Designers can minimize unforeseen harms by proactively considering how things could go wrong and seeking outside perspectives early on.

18.- Product managers bridging design teams and broader organizational concerns are well-positioned to spur proactive consideration of potential downsides.

19.- Academics tend to punt these considerations to commercialization, but incorporating them into foundational research would lead to better outcomes.

20.- Discrimination is legally complex - offering a student discount is allowed, systematically charging more by race is not.

21.- Language models may reflect societal biases in training data - designers must choose whether to try to change or entrench norms.

22.- Some worry publicizing tech vulnerabilities enables abuse, but Sweeney found shining a light often spurs responsible fixes that wouldn't happen otherwise.

23.- Datasets used for machine learning may have inherent unknown biases that get perpetuated - ongoing external auditing is needed.

24.- While co-design with users is valuable, the core responsibility lies with technologists themselves to proactively "bake in" social considerations.

25.- Facial recognition hit major turbulence in 2001 between Super Bowl surveillance backlash and 9/11 increasing acceptance - trajectory can shift quickly.

26.- Carnegie Mellon workshops on building in privacy protections had limited success - the onus is on core designers themselves.

27.- A forthcoming paper shows vulnerabilities in 36 state election websites during the 2016 presidential election.

28.- The top-level message is that while not everything can be anticipated, harms found through external audits should be met with constructive fixes.

29.- AI technology designers have immense power in a global technocracy to shape societal rules and values in lasting ways.

30.- Proactive steps by AI designers to envision and experiment around potential harms is crucial to responsibly wielding this influence for good.

Knowledge Vault built byDavid Vivancos 2024