>

>

Concept Graph & Resume using Claude 3.5 Sonnet | Chat GPT4o | Llama 3:

Resume:

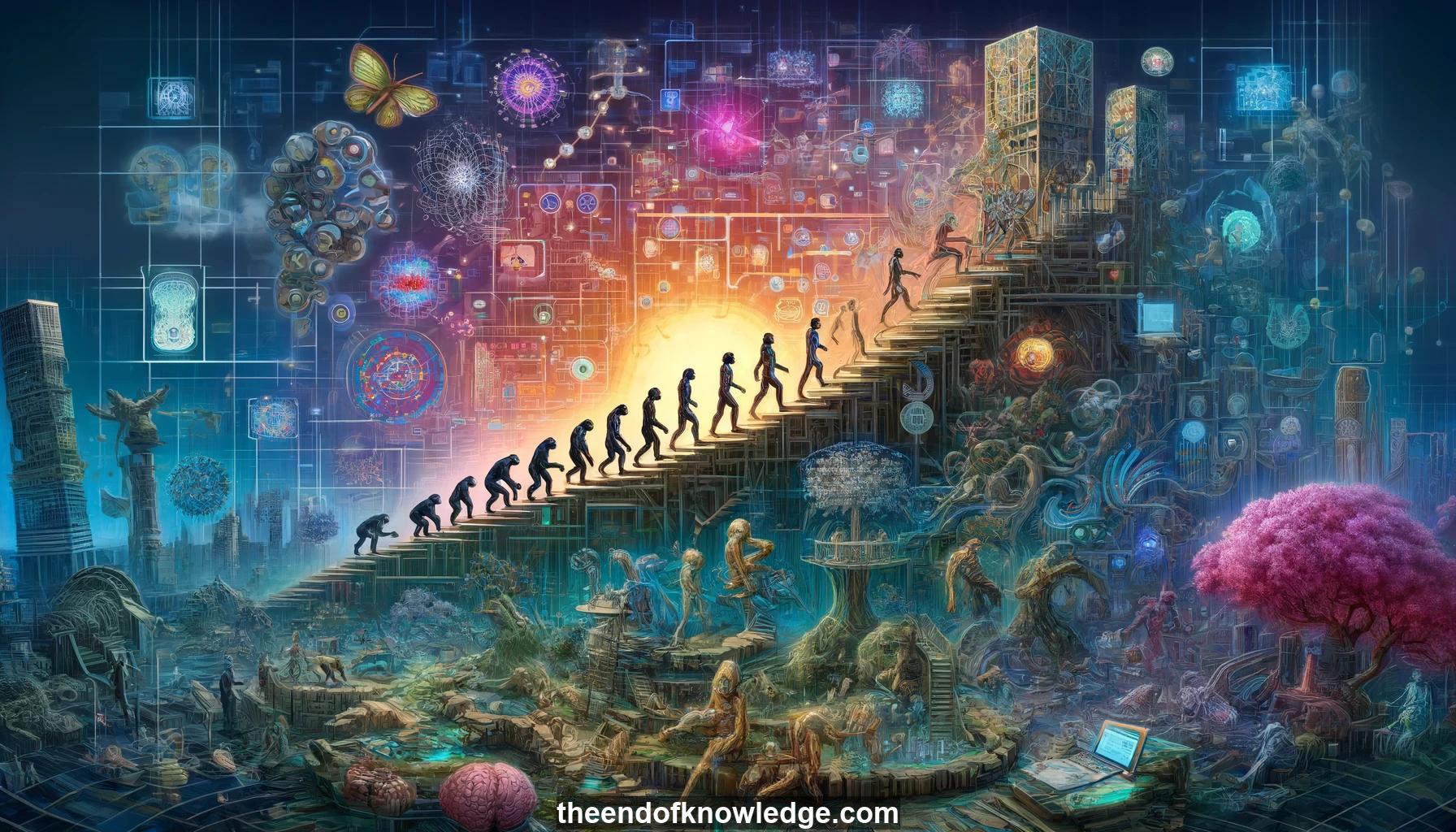

1.- Intelligent creatures evolved through increasing complexity in organisms and their environments.

2.- Artificial intelligence requires both complex algorithms for power and simple ones for generality.

3.- Environments for AI training should have rich variability, grounding in physics, and task complexity to elicit diverse behaviors.

4.- Human games are often repurposed as AI training environments due to their challenges, diversity, and built-in reward functions.

5.- Montezuma's Revenge is a challenging Atari game for AI due to sparse, delayed rewards and the need for human-like concepts.

6.- Feudal reinforcement learning uses a hierarchy of policies with increasingly abstract policies higher up and more temporal resolution lower down.

7.- Feudal Networks for Deep RL adapt feudal learning principles using neural networks with a manager setting goals and a worker achieving them.

8.- Elastic Weight Consolidation allows neural networks to learn new tasks while retaining performance on previous tasks by constraining important weights.

9.- Progressive Neural Networks avoid catastrophic forgetting by adding columns for new tasks with lateral connections to previous frozen columns.

10.- DISTRAL trains task-specific policies while keeping them close to a shared policy, allowing transfer while avoiding divergence or collapse.

11.- Auxiliary tasks like depth prediction and loop closure classification provide stable gradients that scaffold and speed up reinforcement learning.

12.- UNREAL agents learn both a standard policy and one predicting pixel changes, with experience replay improving data efficiency.

13.- Navigation tasks in 3D mazes test AI's ability to explore, memorize, and locate goals using only visual inputs.

14.- Navigation agents benefit from memory, auxiliary tasks like depth prediction, and structured architectures separating representation learning and locomotion.

15.- StreetLearn turns Google Street View of New York City into an interactive RL environment for training navigation at scale.

16.- The StreetLearn navigation agent has specialized pathways for visual processing, goal representation, and locomotion, enabling both task-specific and general navigation.

17.- The StreetLearn navigation agent can localize itself and navigate to goals in NYC using only 84x84 pixel RGB images.

18.- Continuous control tasks in diverse parkour environments test the physical capabilities of simulated agents.

19.- Separating proprioceptive and exteroceptive inputs facilitates learning robust and transferable locomotion skills in simulated agents.

20.- Curricula that progress from easier to harder terrain during an episode lead to better overall learning and transfer.

21.- Humanoid agents develop idiosyncratic but effective and robust locomotion strategies when trained with simple rewards like forward progress.

22.- Complex simulated environments provide a platform for developing AI systems with potential for real-world applications.

23.- Large-scale GPU clusters enable experiments with computationally-intensive RL algorithms using RGB visual inputs.

24.- Simplified representations and transfer learning can make challenging RL domains more accessible for research with limited compute.

25.- Sample efficiency remains a significant challenge for applying deep RL to domains like dialogue and robotics where data is expensive.

26.- Hierarchical RL with multiple levels of temporal abstraction is a promising approach for devising useful and achievable subgoals.

27.- Current AI achievements are steps towards AGI, but debate remains over the definition and remaining challenges.

28.- Adaptive computation, memory and attention within neural networks are key ingredients making progress towards more capable and general AI systems.

29.- Generalizing from simulation to the real world is an important frontier for AI systems aiming to tackle practical applications.

30.- Ongoing research aims to make AI systems more sample-efficient, flexible, and robust in complex environments through architectural and algorithmic innovations.

Knowledge Vault built byDavid Vivancos 2024