>

>

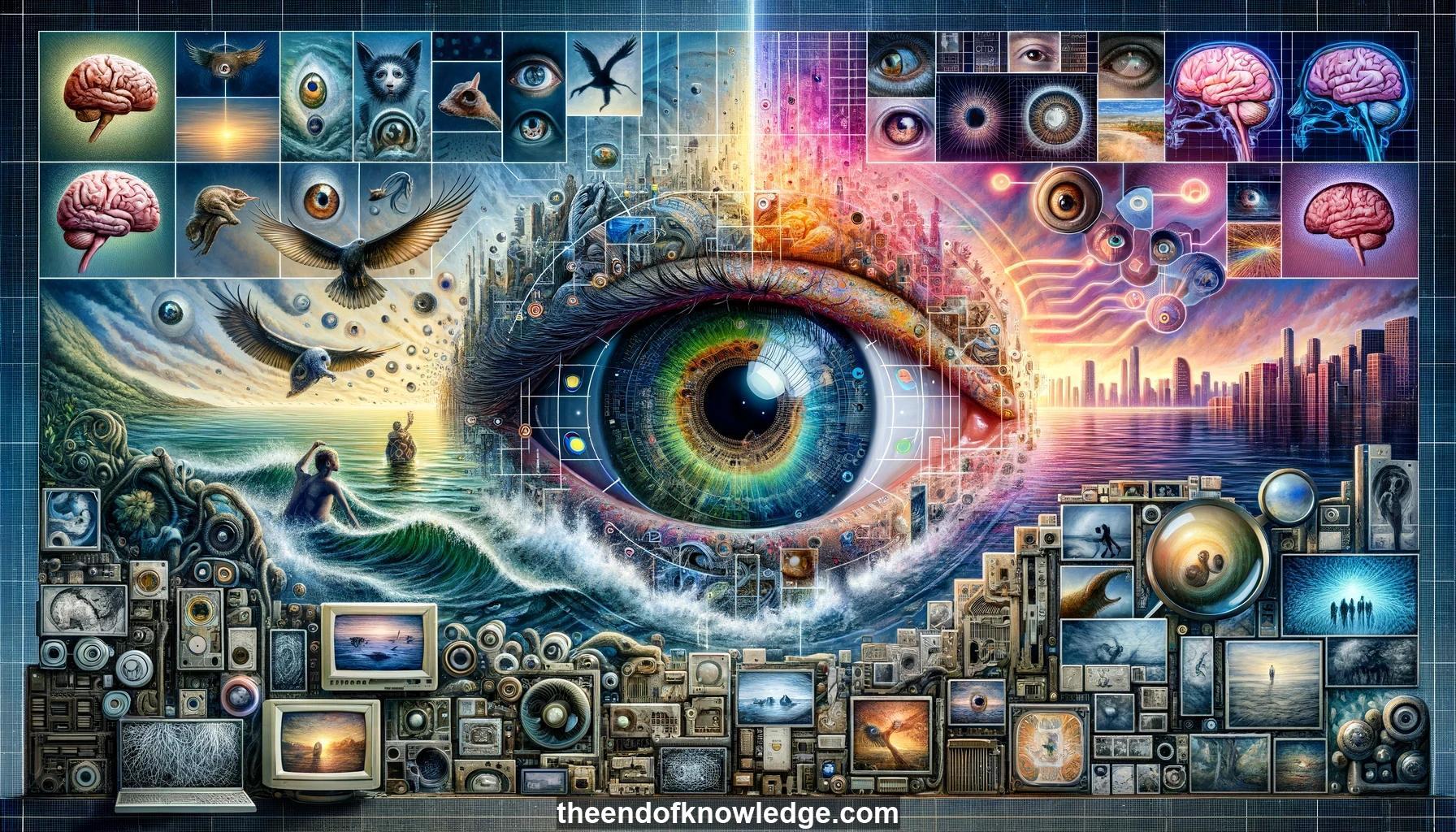

Concept Graph & Resume using Claude 3.5 Sonnet | Chat GPT4o | Llama 3:

Resume:

1.- Fei-Fei Li is an associate professor at Stanford and director of the AI Lab, Computer Vision Lab, and Toyota Human-Centric AI Research.

2.- 540 million years ago, the onset of eyes in animals triggered an explosion in animal speciation, setting off an evolutionary arms race.

3.- The human visual system, developed over 540 million years of evolution, is the most powerful visual machinery in the known universe.

4.- Experiments show humans can understand the gist of a scene presented very briefly, typing detailed descriptions of 500ms image flashes.

5.- Total scene understanding - being able to see and understand an entire visual scene the way humans can - is a key goal for computer vision.

6.- Early computer vision work in the 1960s-80s focused on handcrafting models to explain the 3D world from 2D pictures.

7.- Vision is hard because measuring pixels is not the same as understanding scenes; the brain interprets scenes using context and prior knowledge.

8.- After 30 years of modeling, the computer vision community realized learning from big data was the path to visual intelligence.

9.- Around 2000, computer vision found machine learning, gaining tools like SVMs, graphical models, and neural networks to make real progress.

10.- One-shot learning algorithms aimed to learn to recognize objects from very few training examples, inspired by human learning.

11.- However, the human visual system actually requires extensive training - infants' eyes collect hundreds of millions of "training images" in their first years.

12.- The early 2000s saw an explosion of visual data on the internet, with over 85% of cyberspace data being pixel-based by 2016.

13.- The ImageNet project, started in 2009, collected a massive crowd-sourced dataset of 15 million labeled images across 22,000 categories.

14.- Using ImageNet, algorithms were developed to recognize objects while avoiding mistakes by backing off to more general categories when uncertain.

15.- The 2012 ImageNet competition saw a breakthrough in object recognition accuracy with deep convolutional neural networks, ushering in the deep learning renaissance.

16.- Progress on the ImageNet object detection challenge has been slower, with small, textureless objects proving very difficult for algorithms to detect.

17.- Humans excel at using context and a holistic view of an image for recognition, noticing meaningful differences while ignoring irrelevant ones.

18.- Early efforts at generating descriptions of images were limited, using small datasets and known object categories and sentence structures.

19.- Later work aimed to match images to sentences by ranking them, learning features that encoded the semantic similarity between the two.

20.- To generate descriptions directly, recurrent neural networks were used as language models, combined with convolutional neural networks representing the image.

21.- These image captioning models could generate novel descriptions of images, though still made some errors due to lack of context.

22.- The Visual Genome dataset was introduced, with richer annotations including entities, attributes, relationships, mapped to knowledge bases - interconnecting images.

23.- Using Visual Genome, a dense captioning model was developed to provide detailed, contextual descriptions of many regions within an image.

24.- The dense captioning model also enabled detection of small objects by providing context, solving a limitation of earlier object detection methods.

25.- Deep understanding of images requires going beyond labeling objects to also considering relationships, knowledge, and structure.

26.- The journey of computer vision has progressed from early modeling to big data, machine learning, rich descriptions and question-answering.

27.- However, vision is still an unsolved problem - while algorithms can detect objects and describe scenes, they lack human-level story understanding.

28.- Today's algorithms cannot match the depth of human visual understanding which effortlessly grasps stories, emotions, humor, intentions from images.

29.- While computer vision has made remarkable strides, there remains much work to be done to approach human-like visual intelligence.

30.- This progress is thanks to the work of many students and collaborators contributing to advance the state-of-the-art in visual understanding.

Knowledge Vault built byDavid Vivancos 2024