>

>

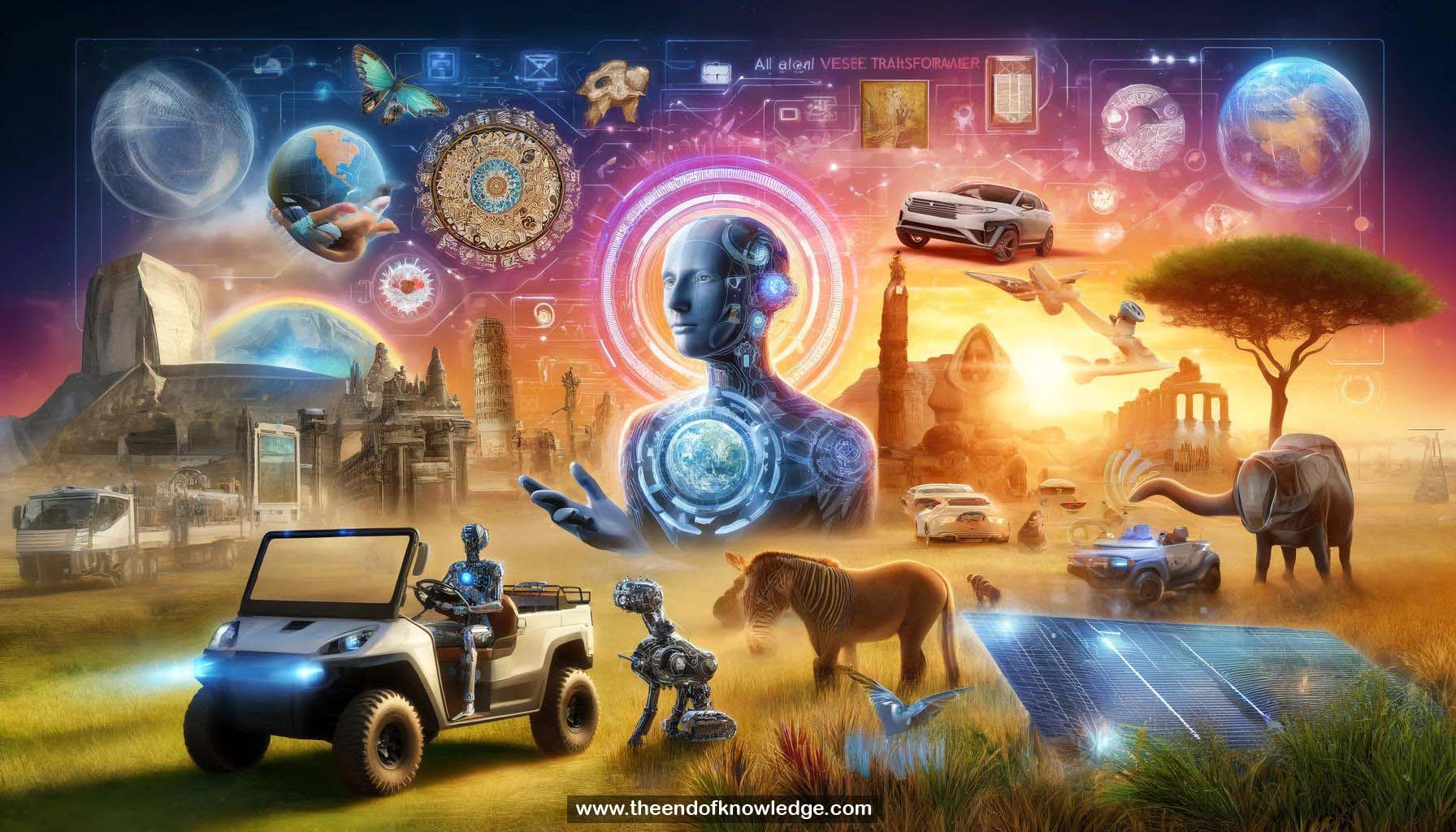

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Accessibility features like live captioning in PowerPoint enable everyone to follow along, even with strong accents.

2.- AI can now identify objects and actions in a video, translate them, generate a text summary, and have an avatar narrate it.

3.- Over the past 50 years, there have been major breakthroughs in speech recognition, language understanding, and machine translation.

4.- Hidden Markov Models provided a probabilistic framework to combine acoustic, phonetic and language knowledge for speech recognition in the 1970s.

5.- In the 1990s, IBM applied similar statistical techniques from speech recognition to pioneer statistical machine translation.

6.- In the 2010s, deep learning replaced Gaussian mixture models in speech recognition, substantially reducing error rates.

7.- Foundation models, massive models trained on huge datasets for many tasks, have become a new AI paradigm.

8.- Microsoft created a unified foundation model for speech in 2017, covering many domains, tasks and languages in one model.

9.- A single transformer model can now do speech recognition, translation, summarization and more in many languages simultaneously.

10.- Despite progress, racial disparities still exist in speech recognition error rates, which the field is working to close.

11.- Z-code is a foundation model that uses monolingual and parallel data to improve low-resource language translation.

12.- Text summarization uses an encoder-decoder architecture similar to machine translation to condense documents into short summaries.

13.- Foundation models combining language, vision, speech etc. are an industry-wide trend across big tech companies.

14.- Three key lessons from speech & language AI are: 1) Probabilistic frameworks 2) Foundation models 3) Encoder-decoder transformers

15.- Computer vision faces challenges of 2D/3D signals, ambiguity of interpretation, and a diverse range of tasks.

16.- Florence is a computer vision foundation model developed by Microsoft, trained on 1 billion images.

17.- Florence uses a Swin transformer image encoder and a transformer text encoder, combining supervised and self-supervised contrastive learning.

18.- Florence outperforms state-of-the-art models on 43 out of 44 computer vision benchmarks, even in zero-shot settings.

19.- Unlike the 22K labels of ImageNet, Florence can classify and caption images with 400K open-ended concepts.

20.- Florence uses semantic language understanding to enable open-ended visual search beyond predefined classification labels.

21.- Combining Florence and GPT-3 allows generating creative stories about images that go beyond literal description.

22.- Florence enables searching personal photos by visual concepts without relying on captions or user signals.

23.- Florence achieves state-of-the-art results on tasks like human matting and image segmentation, even for non-human objects.

24.- Self-supervised learning allows Florence to pseudo-label data and iteratively improve its own image segmentation.

25.- An encoder-decoder architecture allows Florence to excel at image captioning, including for text within images.

26.- Florence's image captioning goes beyond literal description to infer implicit attributes like player jersey letters.

27.- Florence powers accessibility tools like Seeing AI that help vision-impaired users interpret objects in photos.

28.- Florence achieves super-human performance on benchmarks like text-based image captioning and visual question answering.

29.- Multimodal AI combining vision, language, speech etc. still has room for advancement by learning from real-world embodied experiences.

30.- The speaker fielded audience questions and offered to discuss further after the session concluded due to time constraints.

Knowledge Vault built byDavid Vivancos 2024