>

>

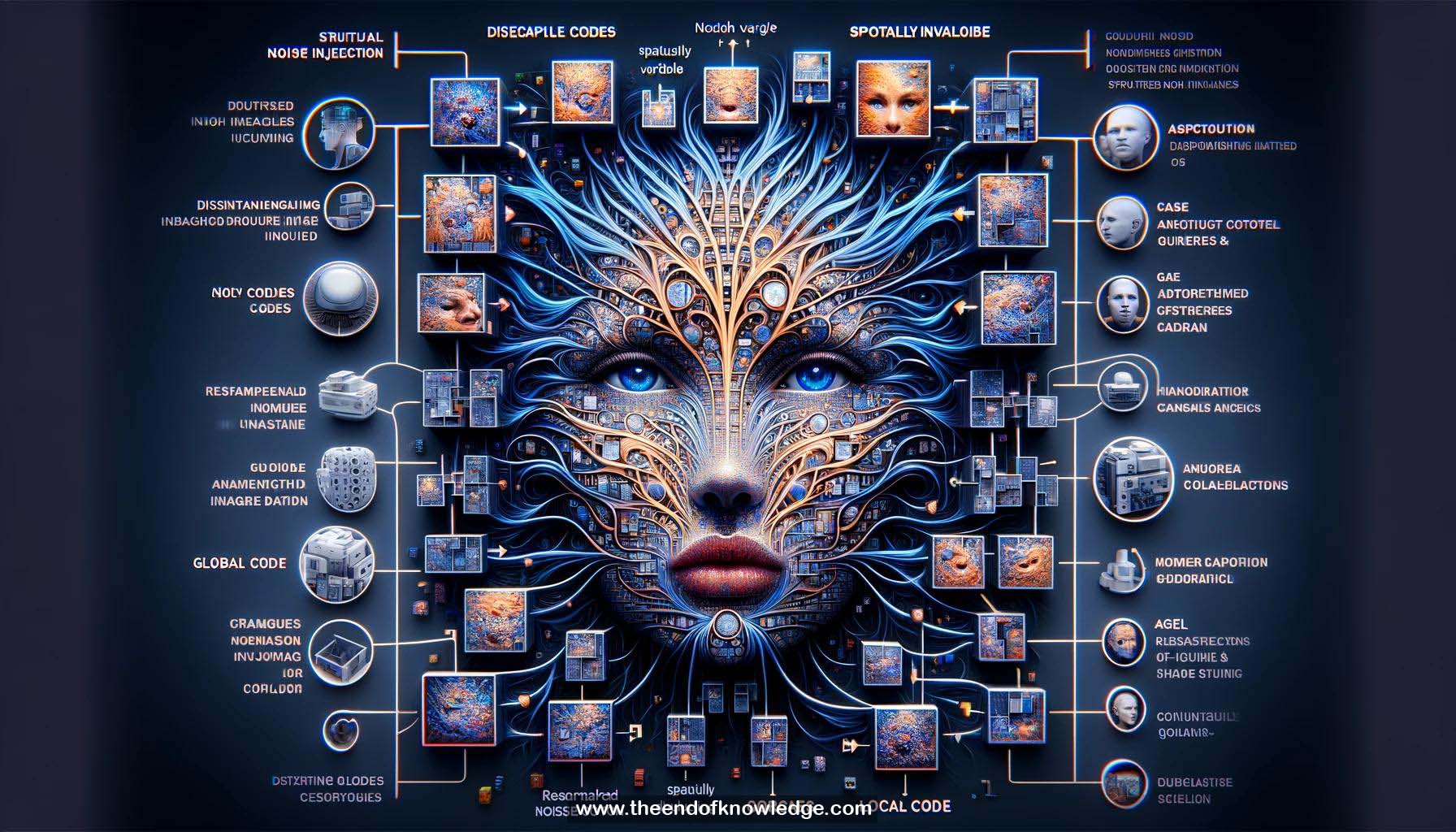

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Disentangled image generation through structured noise injection enables editing of randomly generated images.

2.- Networks generate realistic images but fail at editing.

3.- Goal: Restrict influence of noise code entries to specific image regions, separate global/stylistic details from local details.

4.- Two ways of using input noise codes: direct mapping (DCGAN) and instance normalization parameter computation (style-based generators).

5.- Spatial correspondence exists between input tensor and final image.

6.- Proposed architecture achieves high disentanglement using two input noise codes: spatially variable and spatially invariable.

7.- Spatially variable codes allow resampling of specific image regions.

8.- Spatially invariable code defines most style and color information.

9.- Spatial disentanglement achieved by structuring spatially variable codes with local, shared, and global codes.

10.- Each cell of spatially variable code has unique local code, shared code with neighbors, and shared global code.

11.- Global and shared codes encode information spanning multiple locations (pose, accessories).

12.- Each cell has independent fully connected layer, guaranteeing local code independence after mapping.

13.- Spatially invariable code contains unique local code, leveraged for expressing stylistic information.

14.- Without spatially invariable code, local codes can change background and style in addition to local details.

15.- Method outperforms state-of-the-art StyleGAN in disentanglement scores.

16.- PathLength measures influence of interpolating spatial invariable code.

17.- Linear separability measures inaccuracy of linear attribute classifiers trained on input codes.

18.- Higher PathLength and linear separability scores indicate entangled mapping.

19.- Resampling global part of spatially variable code affects pose while maintaining likeness and background style.

20.- Resampling shared part of spatially variable code affects age, accessories, and face dimensions.

21.- Resampling local codes around mouth changes mouth shape.

22.- Resampling local codes in top rows changes hairstyle.

23.- Resampling spatially invariable codes maintains pose, age, facial expressions, and clothing shape while changing background, ethnicity, and sex.

24.- Future work: separating content and style, offering more control in spatially invariable code, determining suitable decomposition of generation process.

25.- Potential to change ethnicity while maintaining other stylistic aspects of face image.

26.- Disentanglement scores (PathLength and linear separability) used to compare methods.

27.- Spatial correspondence enables targeted editing of generated images.

28.- Architecture structured to achieve independence and control over local, shared, and global image aspects.

29.- Combination of spatially variable and invariable codes allows for fine-grained editing capabilities.

30.- Opens up possibilities for future research in complete content-style separation and controlled attribute manipulation in generated images.

Knowledge Vault built byDavid Vivancos 2024