>

>

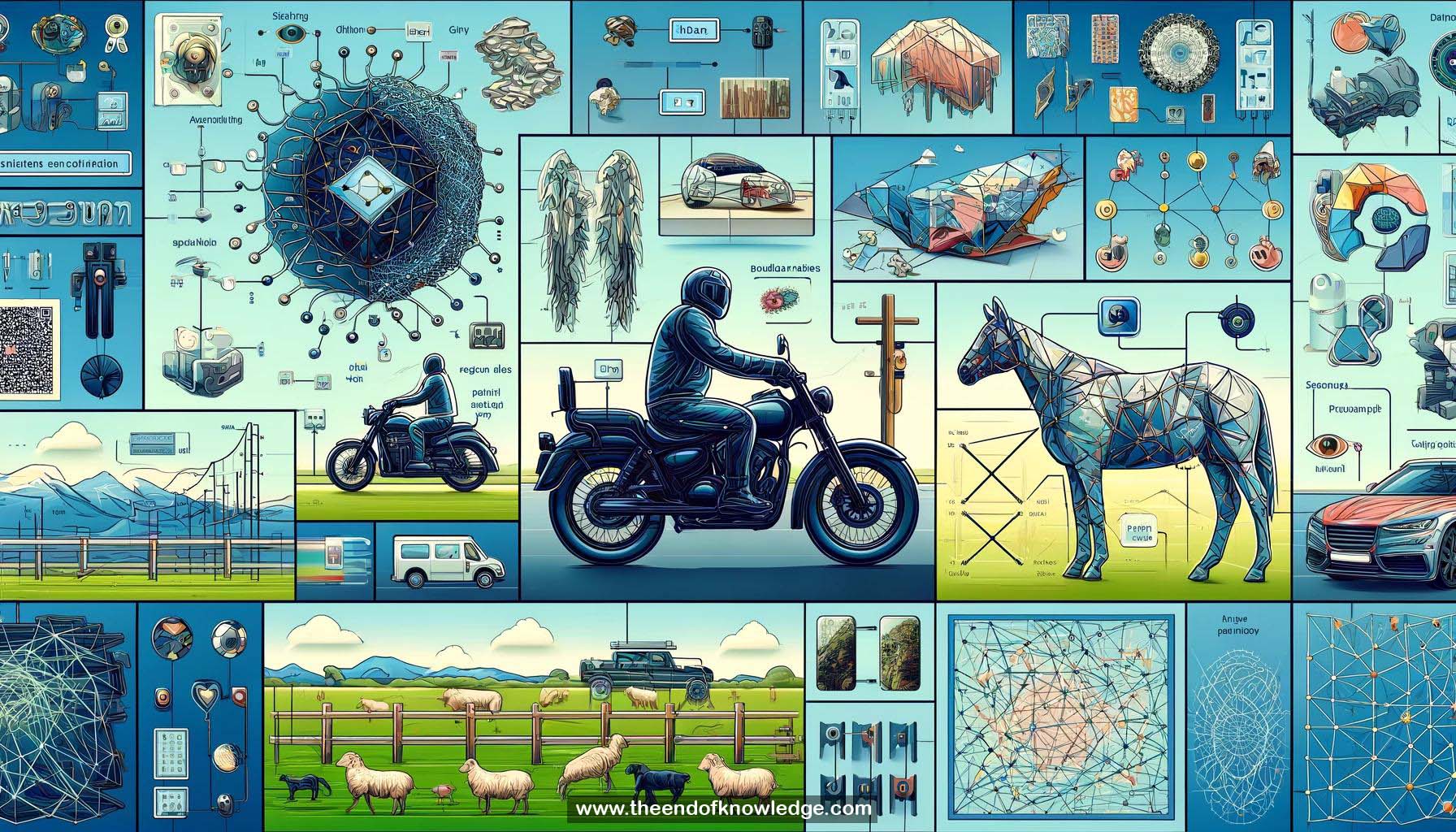

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Fully Convolutional Networks (FCNs): FCNs replace fully connected layers with convolutional layers, enabling pixel-wise predictions for image segmentation.

2.- Semantic Segmentation: Task of assigning a class label to each pixel in an image, distinguishing between different objects and background.

3.- Monocular Depth Estimation: Predicting the depth of each pixel from a single image, useful for 3D scene understanding.

4.- Boundary Prediction: Identifying the edges or boundaries of objects within an image for more accurate segmentation.

5.- End-to-End Learning: Training the network to learn from input images to desired output labels directly, optimizing all layers simultaneously.

6.- RCNN: Region-based Convolutional Neural Network used for object detection, offering bounding box predictions but not precise pixel labels.

7.- Downsampling and Upsampling: Reducing and then increasing the resolution of feature maps to match input size, crucial for accurate pixel-wise predictions.

8.- Translation Invariance: Convolutional layers preserve spatial relationships, allowing the network to handle inputs of varying sizes.

9.- Skip Layers: Connections that combine features from different network layers to improve detail and accuracy in segmentation results.

10.- Deep Jet: Combining shallow and deep features to capture both high-resolution local and low-resolution semantic information.

11.- Pooling Layers: Downsample feature maps, reducing spatial dimensions and retaining essential features.

12.- Pixel-Wise Loss: Loss function applied to each pixel, guiding the network to improve segmentation accuracy.

13.- ImageNet Pretraining: Initial training on a large dataset (ImageNet) before fine-tuning on specific tasks like segmentation.

14.- Patch Sampling: Previous method of training on image patches, contrasted with full-image training for efficiency.

15.- Dense CRF: Conditional Random Field model that refines segmentation outputs by enforcing spatial consistency.

16.- Multiscale Representation: Using image pyramids and multiscale layers to integrate local and global information.

17.- Structured Output Learning: Learning frameworks that capture dependencies between output variables, improving prediction structure.

18.- Caffe Framework: Deep learning framework used to implement and train convolutional networks.

19.- Weak Supervision: Training techniques that use less precise labels, like bounding boxes or image-level tags, instead of pixel-wise annotations.

20.- Pascal Dataset: Popular dataset for object detection and segmentation, often used for benchmarking methods.

21.- Inference Speed: Time taken to process an image and produce output predictions, important for real-time applications.

22.- Kepler GPU: Graphics Processing Unit used for accelerating deep learning computations.

23.- Multi-Task Learning: Training a single model on multiple related tasks to improve generalization and efficiency.

24.- HyperColumn: Method that combines features from multiple layers to improve segmentation detail.

25.- ZoomOut: Technique for improving feature representations by considering multiple scales and contexts.

26.- Edge Detection: Identifying edges within images to refine object boundaries in segmentation.

27.- Motion Boundaries: Using temporal changes in video to improve segmentation by identifying moving objects.

28.- Mean Intersection Over Union (IoU): Metric for evaluating segmentation accuracy by comparing predicted and ground truth areas.

29.- Ground Truth Labels: Accurate annotations used to train and evaluate the performance of segmentation models.

30.- Dense Upsampling Convolution (DUC): Technique to increase resolution of feature maps for detailed segmentation predictions.

Knowledge Vault built byDavid Vivancos 2024