>

>

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

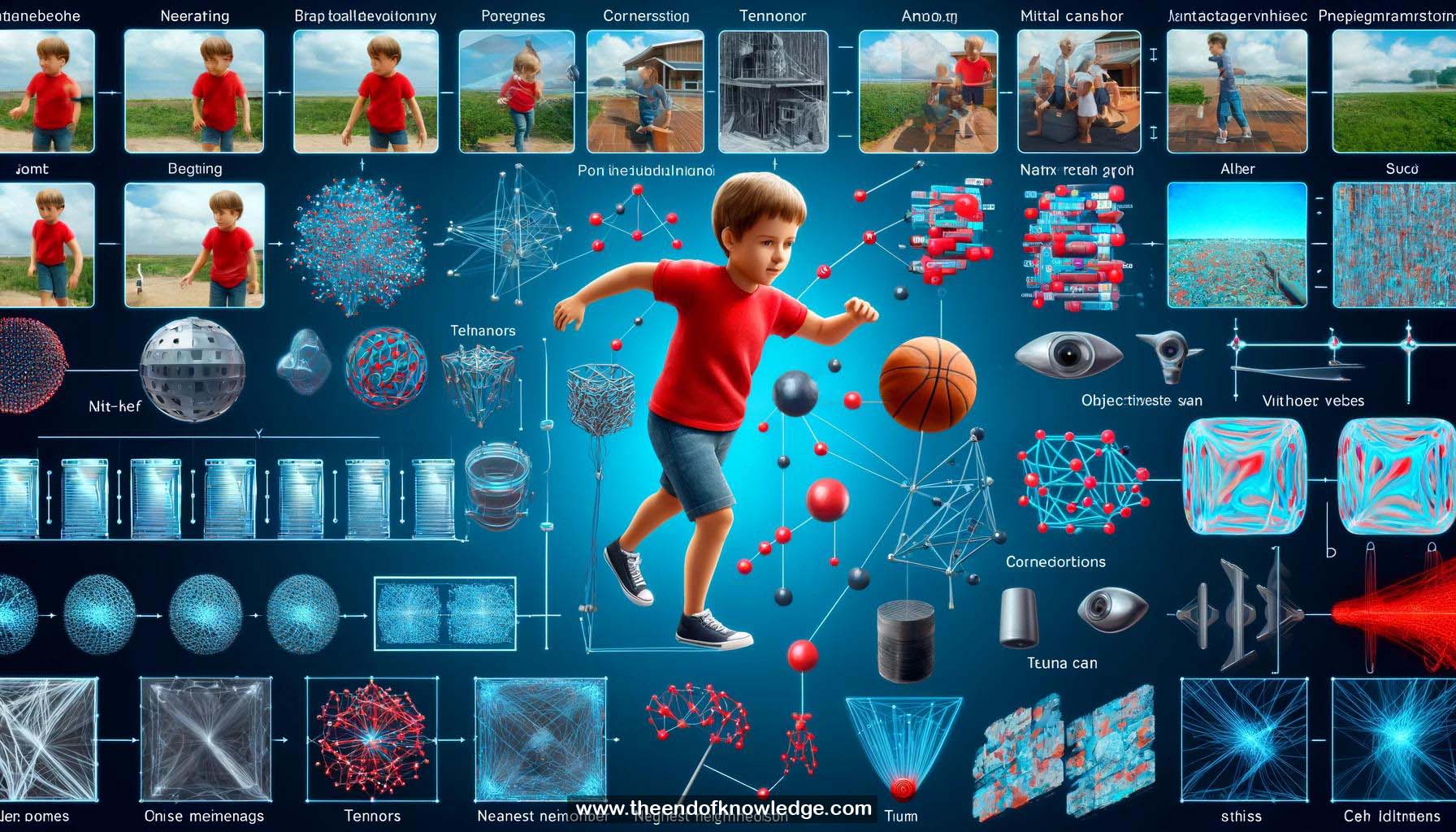

1.- Video is a 2D field changing over time with objects having correspondences across frames.

2.- Corresponding positions have similar visual/semantic features and can span arbitrary spatial and temporal ranges.

3.- Given a position, only a small portion of positions in other frames could potentially be the correspondence (sparsity and irregularity).

4.- A novel neural net architecture is proposed to address correspondence properties in videos.

5.- The representation tensor of a video is treated as a point cloud in semantic feature space.

6.- For each point, k nearest neighbors from other frames are found and considered as potential correspondences (CP module).

7.- CP module takes video representation tensor as input and computes pairwise feature distance matrix to get k nearest neighbor indices.

8.- Correspondence embedding layer concatenates semantic feature vectors and relative spatial-temporal location, processes them independently, and applies max pooling.

9.- The output tensor encodes the dynamic information of the video after max pooling selects the most interesting information.

10.- CP module is integrated into C2D ResNet architecture.

11.- Ablation studies were conducted on the number and position of CP modules and the value of k.

12.- The proposed method achieves better performance with fewer parameters compared to previous works on kinetics dataset.

13.- State-of-the-art results are achieved among published works on motion-centric datasets (Something-Something and Gesture) with fewer parameters.

14.- Visualization shows that CP module proposes reasonable correspondences like basketball, metal can, and thumb.

15.- CP module filters out wrong correspondence points and keeps correct ones during max pooling.

16.- CP module makes more changes to moving areas in the feature map.

17.- The code for the proposed method is open-sourced.

Knowledge Vault built byDavid Vivancos 2024