>

>

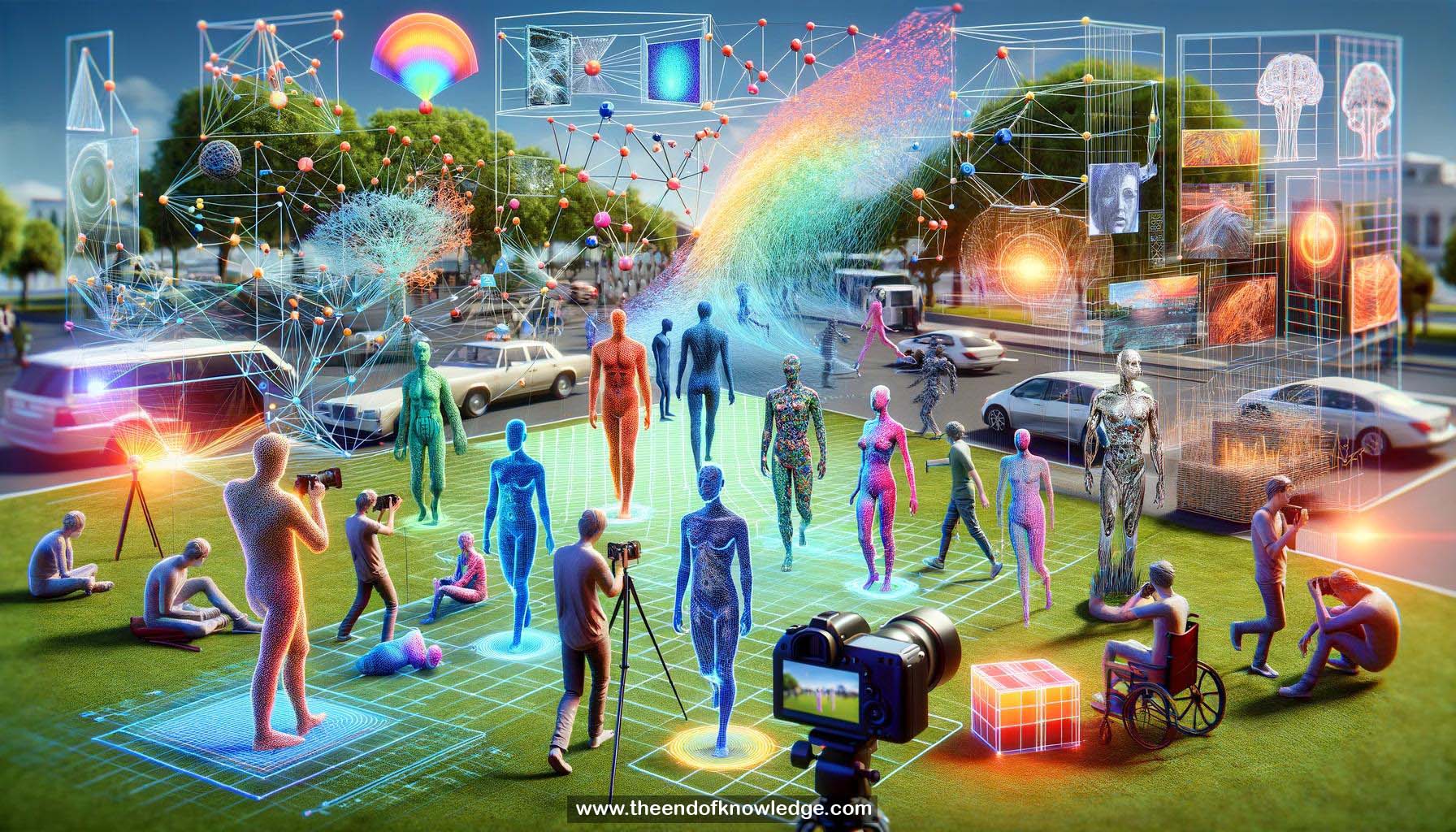

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Learning depth of moving people using frozen people dataset (Mannequin Challenge).

2.- Classical stereo algorithms assume rigid scenes, unsuitable for moving objects.

3.- Data-driven approach using Mannequin Challenge dataset with stationary people.

4.- Dataset spans various scenes, poses, and number of people.

5.- Structure-from-motion and multi-view stereo recover camera poses and depths.

6.- Multi-view stereo depth maps used as ground-truth for training neural network.

7.- Single-image depth prediction ignores 3D information in neighboring frames.

8.- Optical flow between reference and neighbor frames converted to depths using camera poses.

9.- Inaccurate depths from moving people masked out using segmentation.

10.- Full model inputs: RGB frame, segmentation mask, depths from motion parallax, confidence map.

11.- Network learns to inpaint masked human depth and refine entire scene depth.

12.- Model applied to moving people videos during inference.

13.- Outperforms baseline RGB-only, motion stereo, and single-view methods on TUM RGBD dataset.

14.- Qualitative comparison shows model's depth predictions most similar to ground truth.

15.- Accurate and coherent depth predictions on regular internet video clips.

16.- Depth predictions enable visual effects like synthetic defocus and focus pause.

17.- Synthetic objects inserted and occluded using depth predictions.

18.- Novel view synthesis using near-field and nearby frames to fill occlusions.

19.- Human regions effectively inpainted using depth predictions when camera and people move freely.

20.- Code and dataset released on the project website.

Knowledge Vault built byDavid Vivancos 2024