>

>

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

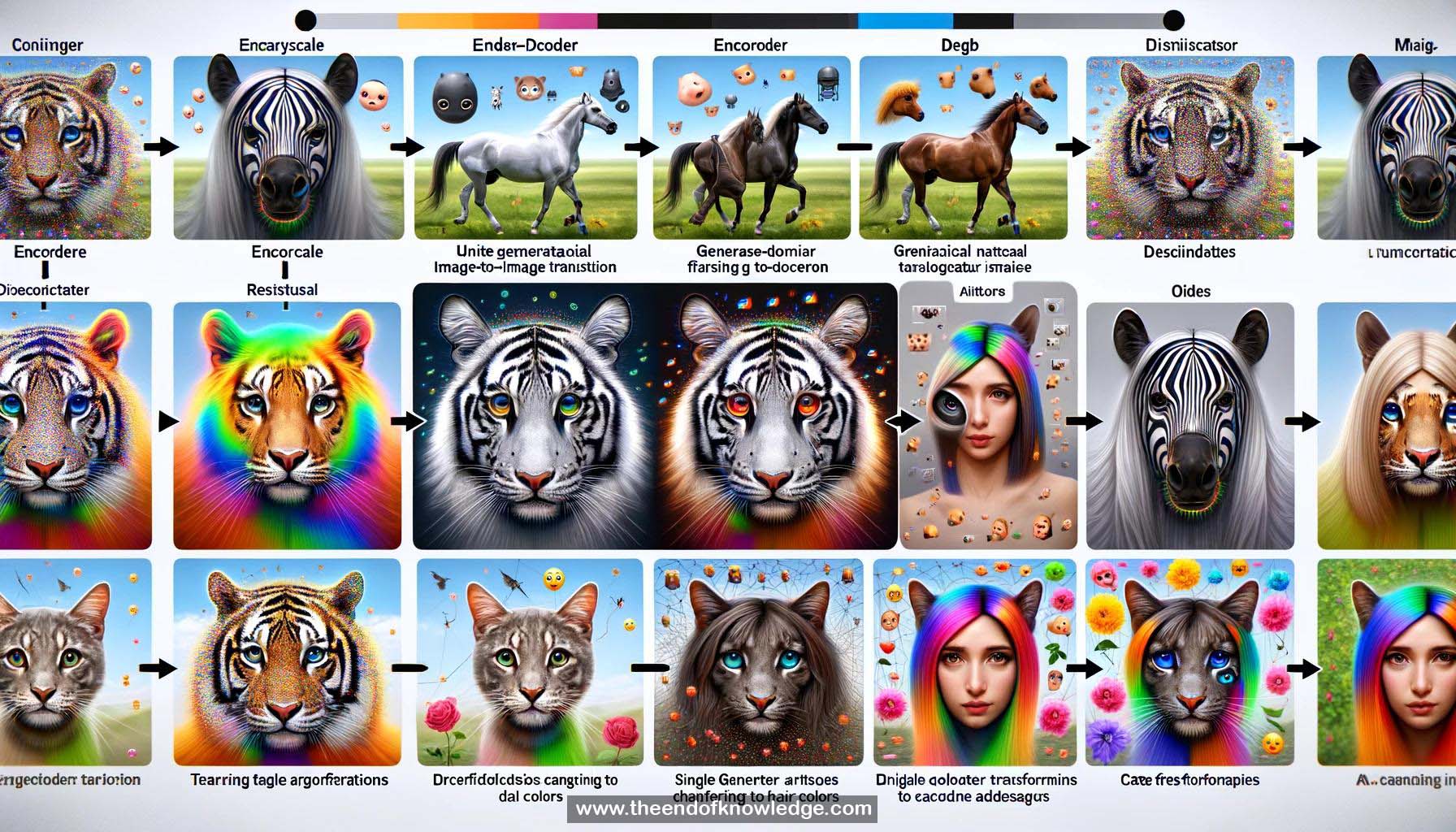

1.- Image-to-image translation: Converting images from source to target domain.

2.- Multi-domain translation: Translating between multiple domains/attributes (e.g. hair colors).

3.- Previous work limitations: Separate model per domain pair; not scalable.

4.- StarGAN: Unified model for multi-domain translation using a single generator.

5.- Generator inputs: Image + target domain label. Learns to flexibly translate to target domain.

6.- Generator architecture: Encoder-decoder with residual blocks.

7.- Adversarial loss: Makes translated images indistinguishable from real ones.

8.- Domain classification loss: Ensures translated images are properly classified to target domain.

9.- Reconstruction loss: Preserves input image content; only changes domain-related parts.

10.- Facial attribute transfer: Translating attributes like hair color, gender, age on CelebA dataset.

11.- Comparison to baselines: StarGAN outperforms DIAT, CycleGAN, IcGAN in visual quality.

12.- Multi-task learning effect: StarGAN benefits from learning multiple translations in one model.

13.- Mechanical Turk user study: Users preferred StarGAN over baselines for realism, transfer quality, identity preservation.

14.- Facial expression synthesis: Imposing target facial expression on input face image.

15.- Limited data augmentation effect: StarGAN leverages all domains' data; baselines limited to 2 at a time.

16.- Facial expression classification error: Lowest for StarGAN, indicating most realistic expressions.

17.- Number of parameters: StarGAN needs just 1 model; baselines need many (DIAT: 7, CycleGAN: 14).

18.- Pytorch implementation available.

19.- Dataset bias: CelebA has mostly Western celebrities; performance drops on Eastern faces & makeup.

20.- Applicability beyond faces: Tested on style transfer (e.g. Van Gogh) but results not shown.

Knowledge Vault built byDavid Vivancos 2024