>

>

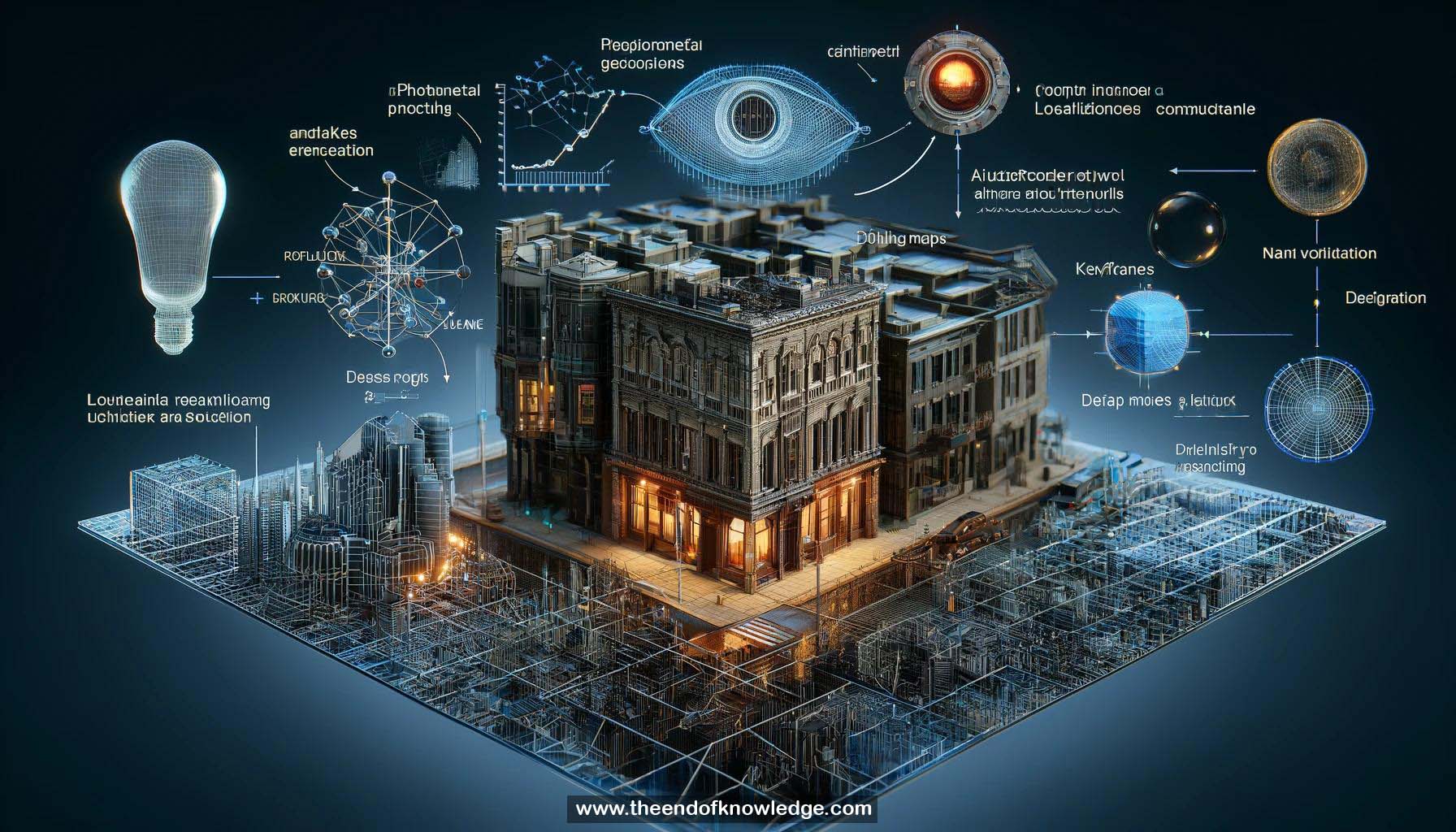

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- CodeSLAM: A deep learning and SLAM (Simultaneous Localization and Mapping) system using depth map representations.

2.- Sparse vs. dense SLAM representations: Sparse methods use key points; dense methods use point clouds, TSDFs, voxel grids, or meshes.

3.- Depth maps live on a subspace of all possible pixel values and have structural correlation with images.

4.- Autoencoder network: Encodes depth maps using image features to improve reconstruction and output uncertainty.

5.- Depth from monocular prediction: The network can modulate depth predictions using the code.

6.- Training: Used CNET dataset, Laplacian loss, Adam optimizer, and trained end-to-end from scratch.

7.- Code size: Diminishing returns with increased size; settled on 128 dimensions.

8.- Linear decoder and grayscale images: No gain from using RGB or nonlinear decoders.

9.- Predicted uncertainty: Highlights depth discontinuities in the depth map.

10.- Linear decoding: Depth is a function of code and image, split into zero code prediction and linear code term.

11.- Jacobian: Derivative of depth with respect to code is constant for a given image.

12.- Spatially smooth code perturbations: Perturbing a single code entry results in smooth depth changes.

13.- Keyframe-based SLAM: System uses pose and code variables for each keyframe.

14.- Dense bundle adjustment: Pairs keyframes, warps images using depth and relative pose, and minimizes photometric error.

15.- Joint optimization of pose and codes: A novel approach in dense SLAM.

16.- Optimization results: Photometric error decreases, and good reconstructions are achieved on CNET dataset.

17.- Speed: Iterations at 10 Hz, boosted by pre-computing Jacobians due to linear decoder.

18.- Real-world testing: Applied on New York dataset, jointly optimizing 10 frames.

19.- Visual odometry system: Tested on NYU dataset with a sliding window of 5 keyframes.

20.- Simple system: Only one optimization problem, no special bootstrapping.

21.- Zero code prior: Provides robustness, handling rotational-only motion without baseline.

22.- Future directions: Training on real data using self-supervised costs, closer coupling with optimization.

23.- Network improvements: Exploring different architectures and enforcing more structure.

24.- Demo: Co-author Jan demonstrated a preliminary live system.

25.- Generalization: Zero-code prediction worsens on dissimilar datasets; optimization allows adaptation to different scenes.

Knowledge Vault built byDavid Vivancos 2024