>

>

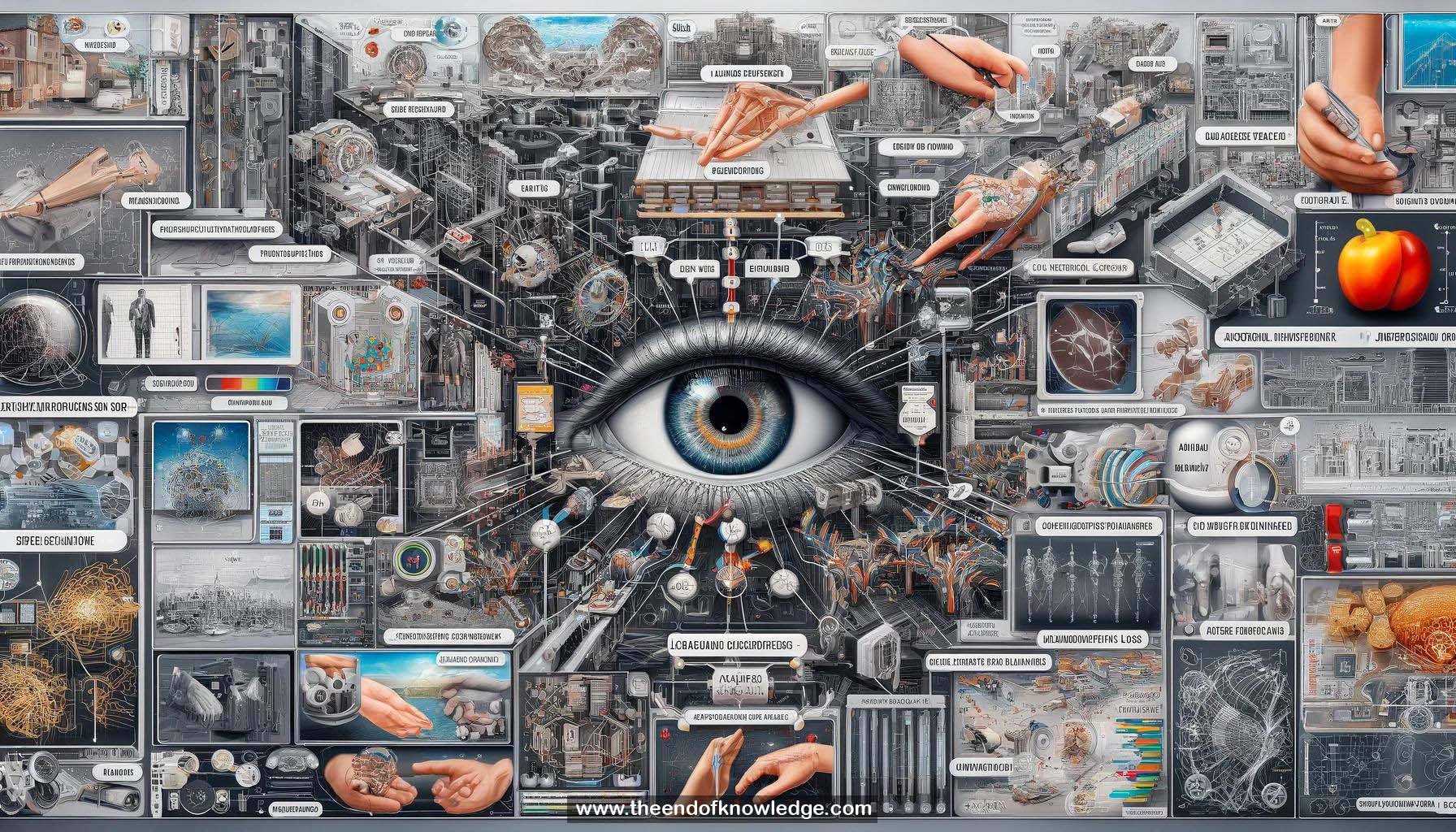

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- SimGAN: A data-driven approach to bridge the distribution gap between synthetic and real images.

2.- Refiner network: A fully convolutional neural network that outputs refined images that look realistic.

3.- Discriminator network: A two-class classification network that distinguishes between real and refined images.

4.- Alternating training: Refiner and discriminator networks are updated alternately to generate realistic images.

5.- Self-regularization loss: Minimizes the distance between synthetic and refined images to preserve annotation information.

6.- Eye gaze estimation: A task where the input is an image, and the output is the gaze direction.

7.- Hand pose estimation: A task where the input is a hand depth image, and the output is joint locations.

8.- Visual Turing test: Comparing the difficulty of distinguishing between synthetic vs. real and refined vs. real images.

9.- Discriminator loss: A two-class cross-entropy loss for classifying real and refined images.

10.- Refiner loss: Tries to fool the discriminator by generating refined images that appear real.

11.- Unstable training: Alternating training can be unstable due to moving targets for refiner and discriminator.

12.- Local adversarial loss: Using a fully convolutional discriminator to make local changes and reduce artifacts.

13.- Buffer of refined images: Using a history of refined images to update the discriminator and improve stability.

14.- Quantitative experiments: Evaluating the performance of ML models trained on synthetic, refined, and real images.

15.- Performance improvement: Refined images lead to better performance compared to synthetic images in gaze estimation.

16.- Outperforming limited real data: Refined images can outperform models trained on a limited amount of real data.

17.- Preserving annotations: SimGAN preserves annotations from synthetic images in the refined images.

18.- No correspondence required: SimGAN does not require correspondence between synthetic and real images.

19.- Reducing artifacts: Local adversarial loss and using a history of refined images help reduce artifacts.

20.- Fully convolutional networks: Both refiner and discriminator networks are fully convolutional.

21.- Synthetic data generation: Simulators can generate an almost infinite amount of synthetic data.

22.- Refining synthetic data: Synthetic data is refined by feeding it through the refiner network.

23.- Comparing performance: Performance is compared between models trained on synthetic, refined, and real data.

24.- Improving simulated data utility: SimGAN improves the utility of simulated data for training ML models.

25.- Blog post: Additional information about SimGAN is available on the Apple Machine Learning blog.

Knowledge Vault built byDavid Vivancos 2024