>

>

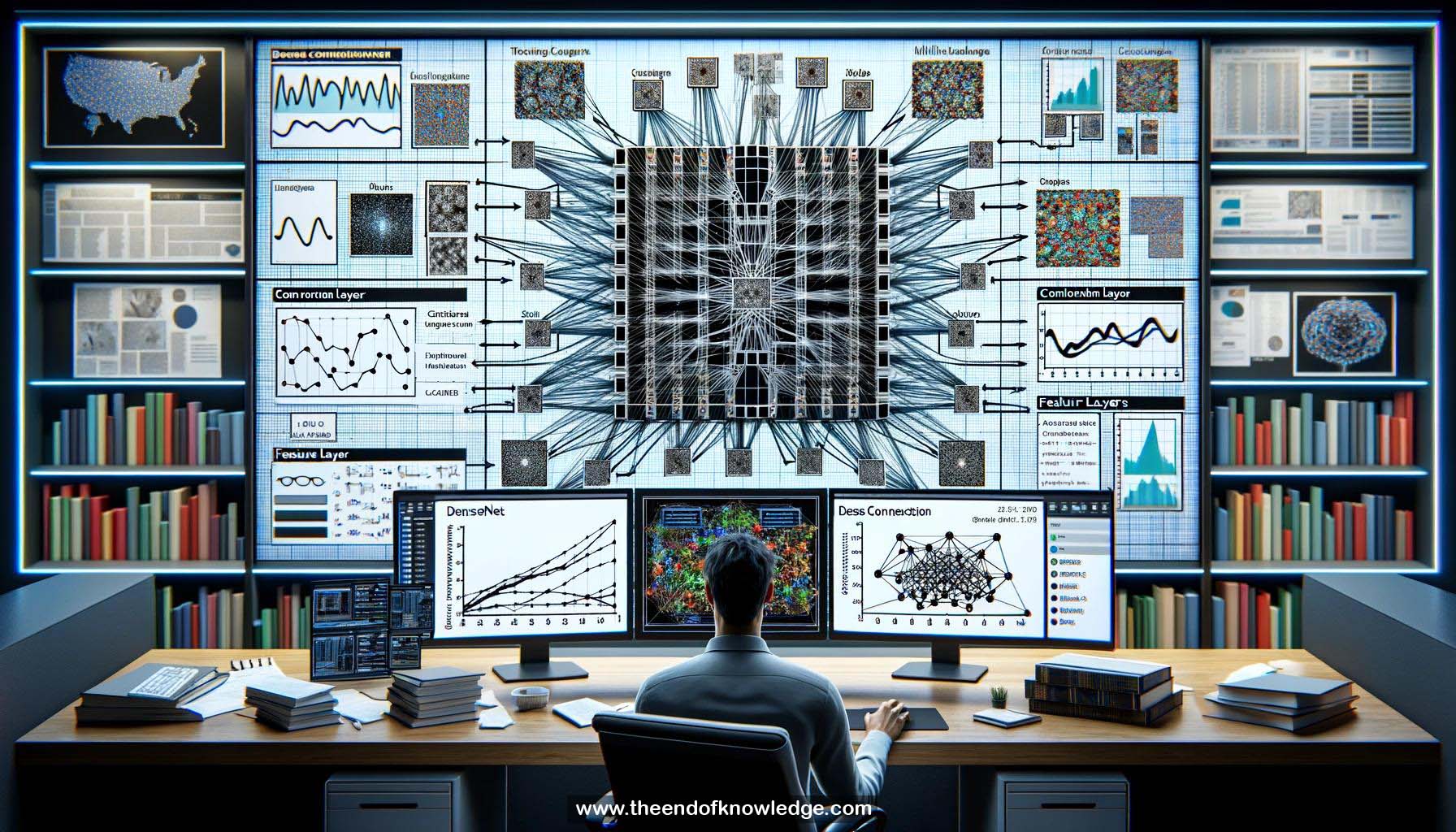

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Dense connectivity: Connects each layer to every other layer in a network, allowing for feature reuse and improved information flow.

2.- Low information bottleneck: Direct connections between layers reduce information loss as data passes through the network.

3.- Compact models: Dense connectivity allows for thinner layers and more compact models compared to traditional architectures.

4.- Computational and parameter efficiency: Dense networks require fewer parameters and less computation per layer.

5.- Growth rate (k): The number of feature maps each layer in a dense block generates. Typically kept small.

6.- Feature concatenation: Features from preceding layers are concatenated together as input to subsequent layers in a dense block.

7.- 1x1 convolutions: Used to reduce the number of input feature maps and improve parameter efficiency in deeper layers.

8.- Dense blocks: Dense networks are split into multiple dense blocks, with pooling or convolution layers in between.

9.- Implicit deep supervision: Earlier layers receive more direct supervision from the loss function due to dense connectivity.

10.- Diversified features: Feature maps in dense nets tend to be more diverse as they aggregate information from all preceding layers.

11.- Low complexity features: Dense nets maintain features of varying complexity, allowing classifiers to use both simple and complex features.

12.- Smooth decision boundaries: Using features of varying complexity tends to result in smoother decision boundaries and improved generalization.

13.- Performance on CIFAR-10: Dense nets achieve lower error rates with fewer parameters compared to ResNets.

14.- Performance on CIFAR-100: Similar trends as CIFAR-10, with dense nets outperforming ResNets.

15.- Performance on ImageNet: Dense nets achieve similar accuracy to ResNets with roughly half the parameters and computation.

16.- Reduced overfitting: Dense nets are less prone to overfitting, especially when training data is limited.

17.- State-of-the-art results: At the time of publication, dense nets achieved state-of-the-art performance on CIFAR datasets.

18.- Multi-scale DenseNet: An extension of dense nets that learns features at multiple scales for faster inference.

19.- Dense connections at each scale: Multi-scale dense nets introduce dense connectivity within each scale of features.

20.- Multiple classifiers: Multi-scale dense nets attach classifiers to intermediate layers to enable early exiting.

21.- Confidence thresholding: During inference, easier examples can exit early based on the confidence of intermediate classifiers.

22.- Faster inference: Multi-scale dense nets achieve 2.6x faster inference than ResNets and 1.3x faster than regular dense nets.

23.- Open-source code and models: The authors released their code and pre-trained models on GitHub.

24.- Third-party implementations: Many independent implementations of dense nets became available after publication.

25.- Memory-efficient implementation: The authors published a technical report detailing how to implement dense nets in a more memory-efficient manner.

Knowledge Vault built byDavid Vivancos 2024