>

>

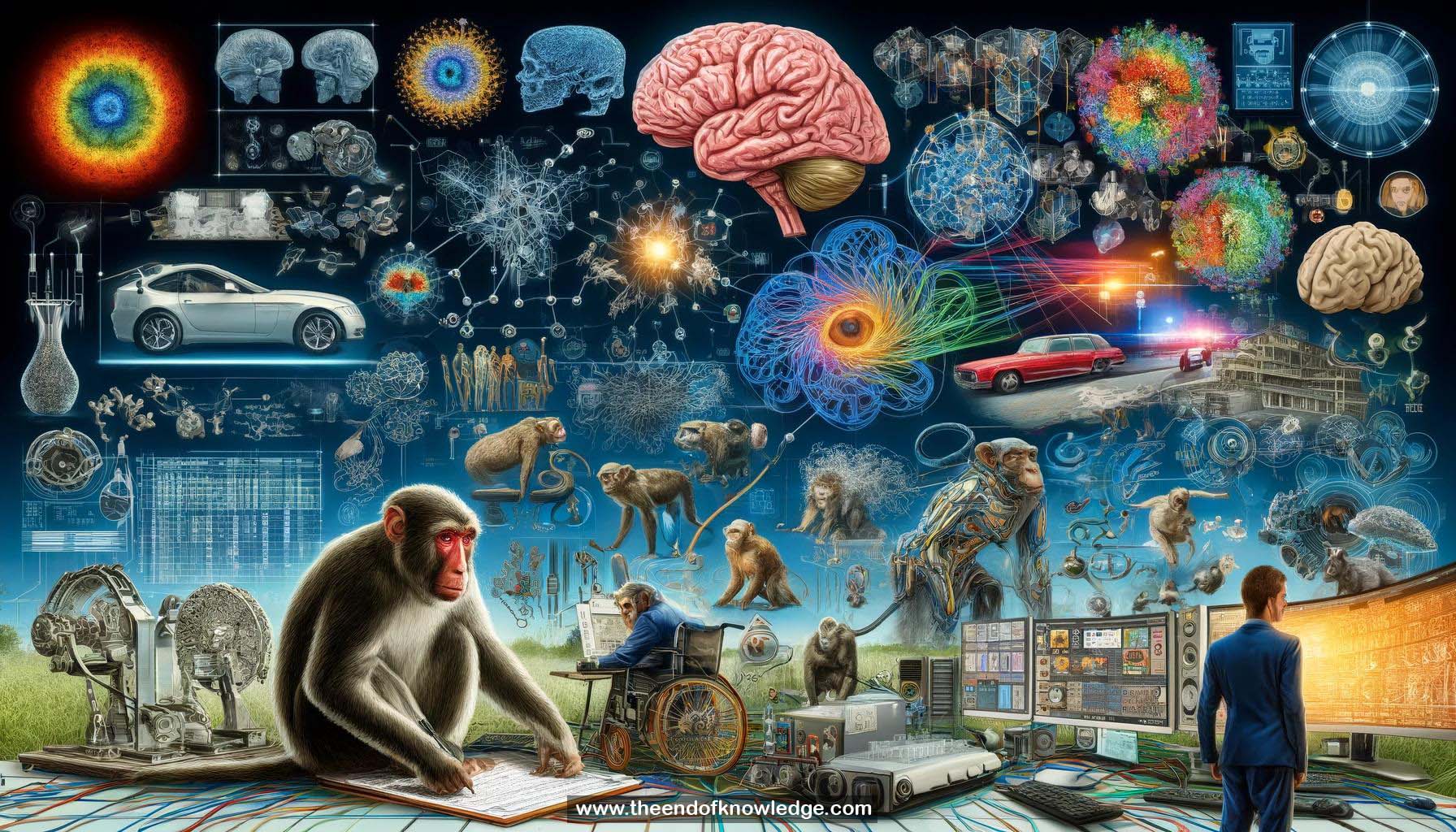

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Humans excel at object perception, identifying objects in scenes despite variations in appearance.

2.- Neuroscientists study the brain to understand the neural mechanisms underlying object perception.

3.- Reverse engineering approach: Build models based on brain constraints and compare with neural data.

4.- The ventral visual stream, including areas V1, V2, V4, and IT, is critical for object recognition.

5.- IT cortex neurons respond to complex object features, but their selectivity is not easily interpreted.

6.- Linear classifiers applied to IT neural population data can predict human-level object recognition performance.

7.- Approximately 500 IT features are sufficient to achieve human-level performance on core object recognition tasks.

8.- Deep convolutional neural networks (CNNs) have shown significant progress in fitting neural data from the ventral stream.

9.- Task-optimized CNNs tend to better predict neural responses in higher visual areas compared to shallower models.

10.- Recent very deep CNNs optimized for ImageNet performance do not necessarily better explain IT neural data.

11.- A goal is to build end-to-end models that accurately predict neural responses and match behavior.

12.- Large-scale chronic array recordings enable collecting extensive neural data from awake behaving animals.

13.- Advances in deep learning have led to a convergence between artificial and biological vision models.

14.- Comparing CNN and primate behavioral patterns on a large image set reveals a performance gap.

15.- "Unsolved" images for CNNs take primates around 30ms longer to accurately decode than "solved" images.

16.- The performance gap may be due to recurrent and feedback connections present in the brain but not CNNs.

17.- Disrupting neural activity could test the causal role of feedback in processing challenging images.

18.- Open questions remain on how the ventral stream develops and supports task learning.

19.- Neural data can constrain models, but the appropriate level of granularity (e.g., spike timing) is unclear.

20.- Analyzing neural responses to natural images (e.g., MS COCO dataset) can inform more robust vision models.

21.- Deep learning models have also shown promise in predicting responses in the auditory and somatosensory systems.

22.- The brain likely achieves a form of convolution without explicit weight sharing, possibly through learning and development.

23.- Continued collaboration between neuroscience and computer vision could lead to improved models of brain function and engineering applications.

24.- Detailed anatomical measurements (e.g., connectomics) could further inform biologically-constrained neural network architectures.

25.- Integrating deep learning and neuroscience can potentially advance brain-machine interfaces and neurological treatments.

Knowledge Vault built byDavid Vivancos 2024