>

>

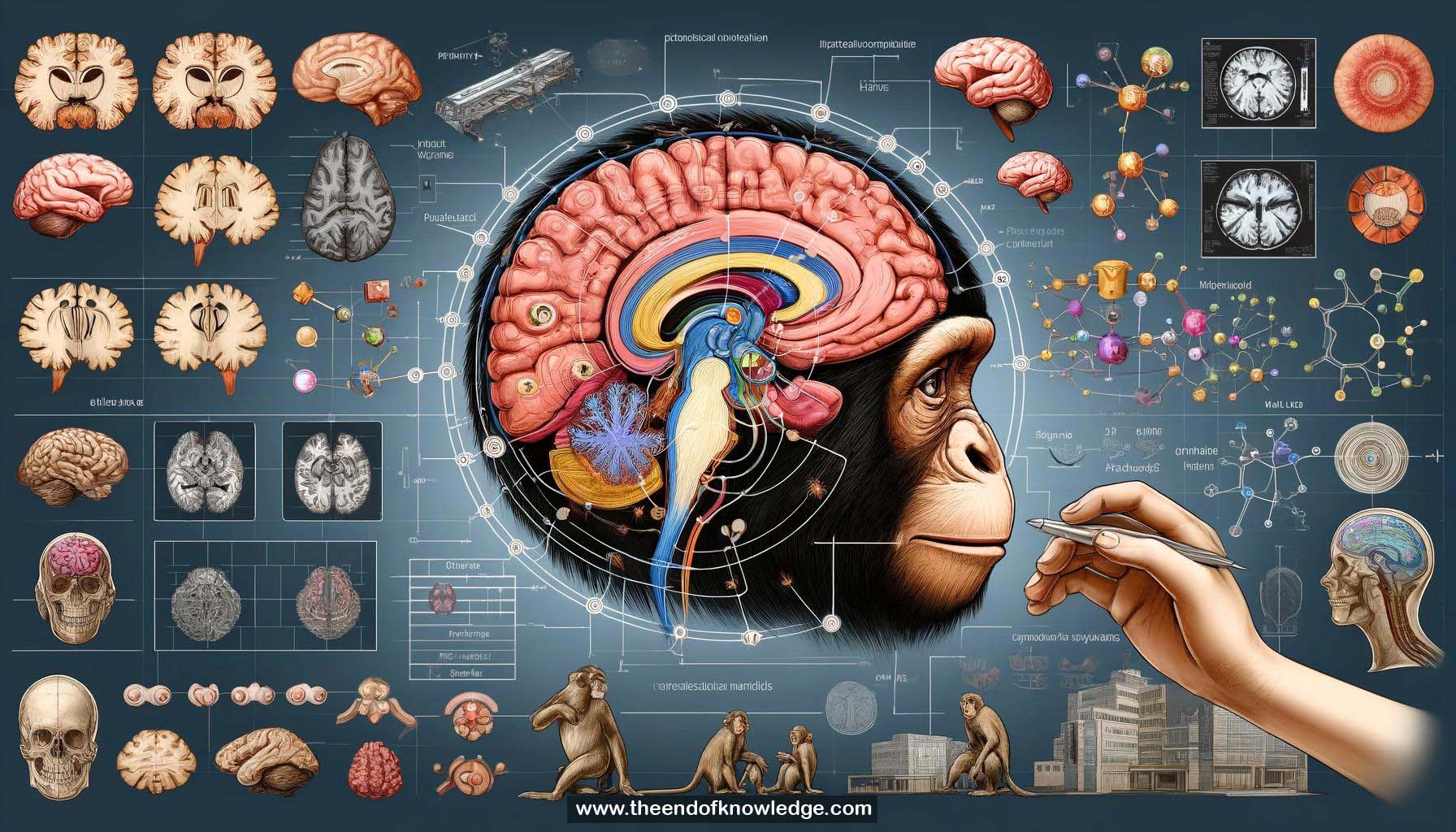

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Human vision is organized hierarchically, with 30-40 distinct visual areas arranged in an interconnected network.

2.- Each visual area represents certain information about the visual world, with neurons acting as basis functions in a high-dimensional space.

3.- Attentional influences occur throughout the visual system via feedforward and feedback connections between layers.

4.- Functional MRI (fMRI) measures slow hemodynamic responses in 3D voxels across the brain, allowing mapping of functional activity.

5.- Early and intermediate human visual system was delineated using fMRI over 20 years, identifying various functional visual areas.

6.- fMRI data shows rich, complex patterns of activity corresponding to different stimuli, posing a high-dimensional regression problem.

7.- Encoding models using ridge regression can predict fMRI responses to novel stimuli based on previously learned feature spaces.

8.- Decoding models, derived from encoding models, can reconstruct stimuli from brain activity patterns, e.g., decoding movies from visual cortex activity.

9.- Semantic representations in the brain are organized in rich gradients distributed across multiple areas, not just individual punctate regions.

10.- Semantic tuning across the brain dynamically shifts based on task demands, allocating representational resources to task-relevant information.

11.- Deep convolutional neural networks (CNNs) have advanced computer vision, mimicking aspects of biological vision.

12.- CNN layers can be used as regressors to predict brain activity in response to stimuli, outperforming conventional feature-based models.

13.- Early visual areas are best predicted by early CNN layers, while higher-level areas are predicted by later layers.

14.- Probing CNNs can reveal features represented in each visual area, e.g., curvature selectivity in V4, face selectivity in fusiform face area.

15.- Some discrepancies exist between CNNs and human vision, such as idiosyncratic categorization artifacts and unclear emergence of figure-ground organization.

16.- Attentional control in human vision influences processing throughout the hierarchy, while CNNs lack short-term attentional mechanisms.

17.- Weight sharing across retinotopic positions, akin to divisive normalization in biological vision, is a key feature of CNNs.

18.- Understanding reasoning and complex cognition in mammals lags behind vision research due to difficultly varying top-down state variables.

19.- Big data approaches are beginning to be applied to study complicated cognitive tasks in humans and animals.

20.- Color information is difficult to recover from V1 voxels in fMRI experiments with natural images due to luminance dominance.

21.- Studying vision using video stimuli is important, as natural vision is essentially video-based.

22.- CNNs trained on static images can still predict brain responses to movie stimuli, possibly due to slow fMRI hemodynamic responses.

23.- Better human brain data measuring neural activity in 3D is needed to fully leverage CNNs trained on movies.

24.- Two communities in computer vision: one favoring abstract, theoretical approaches, and another using biology for inspiration (e.g., Jitendra Malik).

25.- Workshops have been organized to bring together the biologically-interested computer vision community and the biological vision community.

Knowledge Vault built byDavid Vivancos 2024