>

>

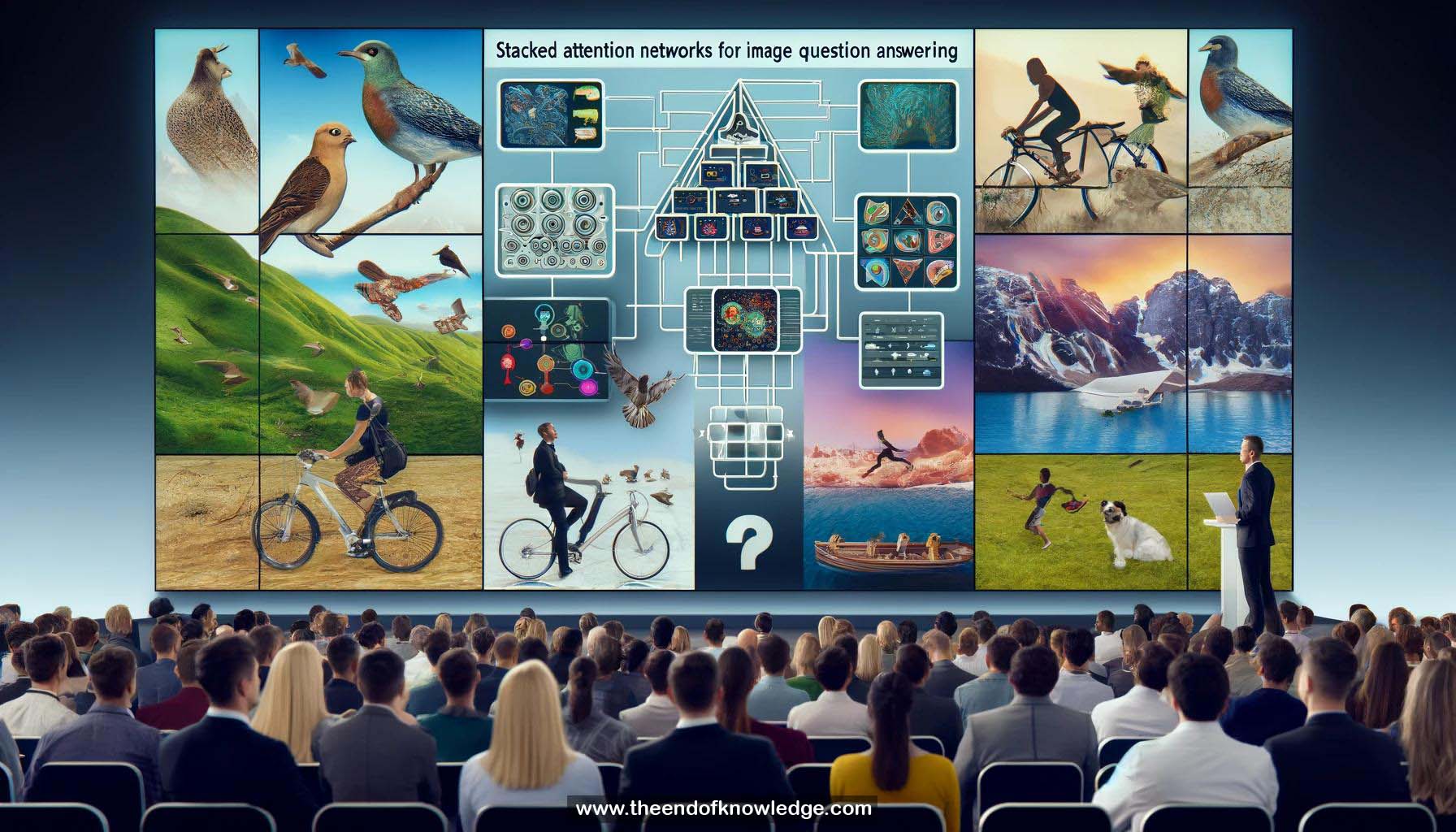

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- Image Question Answering (IQA): Answering natural language questions based on an image's content.

2.- IQA applications: Helping visually impaired understand surroundings.

3.- IQA challenges: Requires understanding relationships between objects and focusing on relevant regions.

4.- Multi-step reasoning: Progressively narrowing focus to infer the answer.

5.- Stacked Attention Network (SAN) model: 4 steps - encode question, encode image, multi-level attention, predict answer.

6.- Image encoding: Using last convolutional layer of VGG network to capture spatial features.

7.- Question encoding: Using LSTM or CNN to capture semantic and syntactic structure.

8.- First attention layer: Computes correlation between question entities and image regions.

9.- Aggregate weighted image features: Sums image features based on attention.

10.- Multimodal pooling: Combines pruned image and text features.

11.- Second attention layer: Further narrows focus to answer-relevant regions and suppresses noise.

12.- Answer prediction: Treats as 400-way classification using multimodal features.

13.- Benchmarks: Evaluated on Visual Question Answering (VQA), CoCoQA, DAQUAR datasets.

14.- VQA results: Major improvement over baselines, especially for "what is/color" type questions.

15.- Impact of attention layers: Using 2 layers of attention significantly outperforms 1 layer.

16.- LSTM vs CNN for question encoding: Perform similarly.

17.- Qualitative examples: Model learns to focus on relevant regions and ignore irrelevant ones.

18.- Error analysis: 78% pay attention to correct region, 42% still predict wrong answer.

19.- Error types: Ambiguous answers, label errors.

20.- Example errors: Confusion between similar objects, ground truth label mistakes.

21.- Increased interest in IQA: Many related papers at the conference.

22.- Comparison to captioning: IQA requires understanding subtle details and focused reasoning.

23.- SAN motivation: Provide capacity for progressive, multi-level reasoning.

24.- Visual grounding: SAN enables clearer grounding of reasoning in the image.

25.- Shared code: Available on GitHub.

Knowledge Vault built byDavid Vivancos 2024