>

>

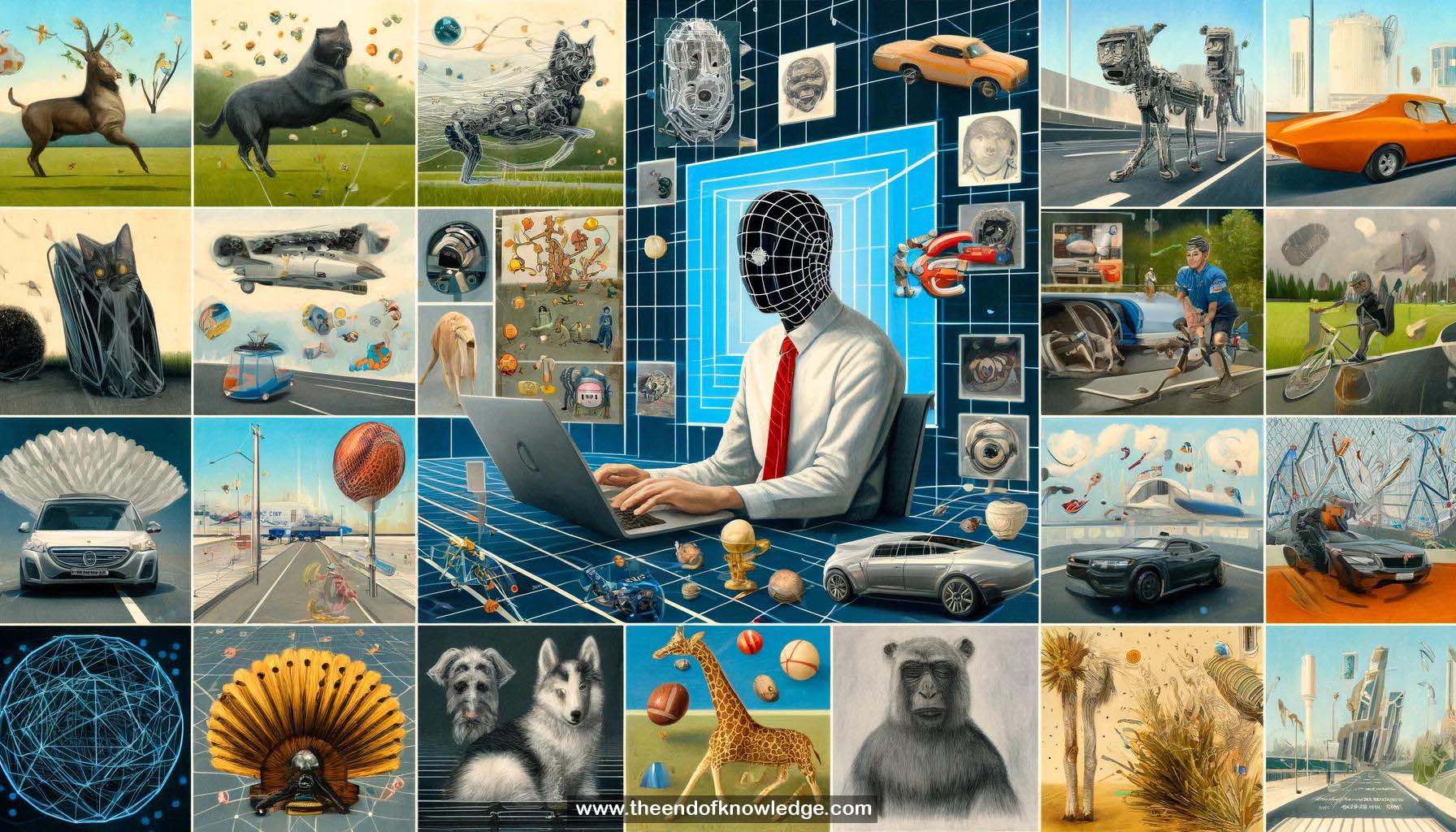

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

1.- YOLO (You Only Look Once) is a real-time, unified object detection system.

2.- Object detection involves drawing boxes around objects in an image and identifying them.

3.- Previous object detection methods like DPM and R-CNN were accurate but very slow (14-20 seconds per image).

4.- Recent work focused on speeding up R-CNN, with Fast R-CNN (2s/image) and Faster R-CNN (140ms/image, 7 FPS).

5.- YOLO processes images much faster, at 45 FPS (22ms/image), with a small tradeoff in accuracy.

6.- It uses a single neural network to predict detections from full images in one evaluation instead of thousands.

7.- The image is divided into an SxS grid, with each cell predicting B bounding boxes, confidence for those boxes, and C class probabilities.

8.- Bounding box confidence reflects if the box contains an object and how well the predicted box fits the object.

9.- Class probability map is like a coarse segmentation map, showing the probability of each class for objects in each cell.

10.- Multiplying class probabilities and bounding box confidence gives class-specific confidence scores for each box. Low-scoring boxes are thresholded out.

11.- Non-max suppression removes duplicate detections, leaving the final detections for the image.

12.- The fixed output size tensor allows the full detection pipeline to be expressed and optimized as a single network.

13.- The network predicts all detections simultaneously, incorporating global context about co-occurrence, relative size, and position of objects.

14.- The network is trained end-to-end to predict the full detection tensor from images.

15.- During training, ground truth box centers are assigned to grid cells, which predict those boxes.

16.- The cell's bounding box predictions are adjusted based on best overlap with ground truth. Confidence is increased for the best box.

17.- Confidence is decreased for bounding boxes that don't overlap with any objects.

18.- Class probabilities and box coordinates are not adjusted for cells without associated ground-truth objects.

19.- The network was pretrained on ImageNet and then trained on detection data with SGD and data augmentation.

20.- YOLO performs well on natural images with some mistakes. It generalizes well to artwork.

21.- YOLO outperforms DPM and R-CNN when trained on natural images and tested on artwork.

22.- YOLO was also trained on the larger Microsoft COCO dataset with 80 classes.

23.- The video demonstrates real-time detection on a laptop webcam, identifying objects like dogs, bicycles, plants, ties, etc.

24.- Detection breaks down if the laptop camera is pointed at its own screen due to recursion.

25.- YOLO's training, testing, and demo code is open source and available online.

26.- Future work includes combining YOLO with XNOR networks to develop a faster, more efficient version.

27.- The goal is to enable real-time object detection on smaller devices like CPUs and embedded systems.

28.- YOLO frames object detection as a regression problem, using features from the entire image to predict each bounding box.

29.- This is unlike sliding window and region proposal-based techniques that perform detection by applying a classifier multiple times.

30.- Predicting all bounding boxes simultaneously using features from across the image allows YOLO to learn contextual cues and still be fast.

Knowledge Vault built byDavid Vivancos 2024