>

>

Concept Graph & Resume using Claude 3 Opus | Chat GPT4o | Llama 3:

Resume:

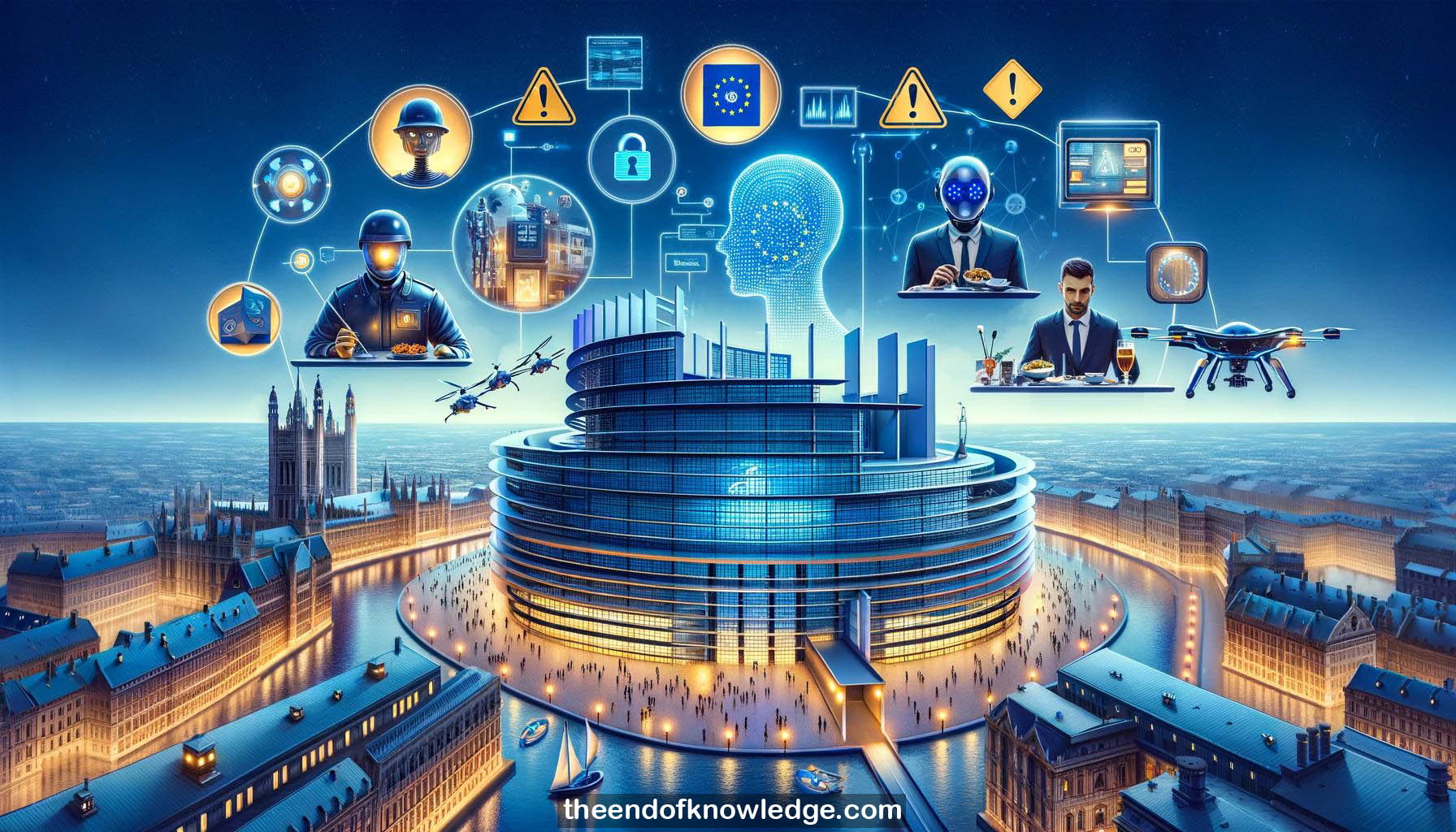

1.- The EU has proposed the Artificial Intelligence Act to regulate AI. It is the first attempt at horizontal AI regulation.

2.- The regulation defines an AI system broadly and lists AI techniques in an annex, regulating uses rather than the technology itself.

3.- Most AI systems are expected to fall under the minimal/no risk category and will not be regulated.

4.- Some AI systems have special transparency obligations, such as notifying humans when interacting with a bot or using emotion recognition.

5.- High-risk AI systems include those embedded in regulated products like toys and machinery, and standalone systems in 8 defined areas.

6.- High-risk systems must undergo a conformity assessment by the provider to verify requirements are met before being put on the EU market.

7.- Four types of AI practices are prohibited as an unacceptable risk - manipulation, exploitation of vulnerabilities, social scoring, and real-time biometric identification.

8.- Private companies could compile social scoring systems which would not be prohibited, only their use by public authorities is banned.

9.- Real-time biometric identification in publicly accessible spaces is prohibited with some exceptions. Post-event identification is high risk but allowed.

10.- The regulation applies whenever the AI system affects people in the EU, even if the provider and user are outside the EU.

Knowledge Vault built byDavid Vivancos 2024