>

>

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Llama 3:

Resume:

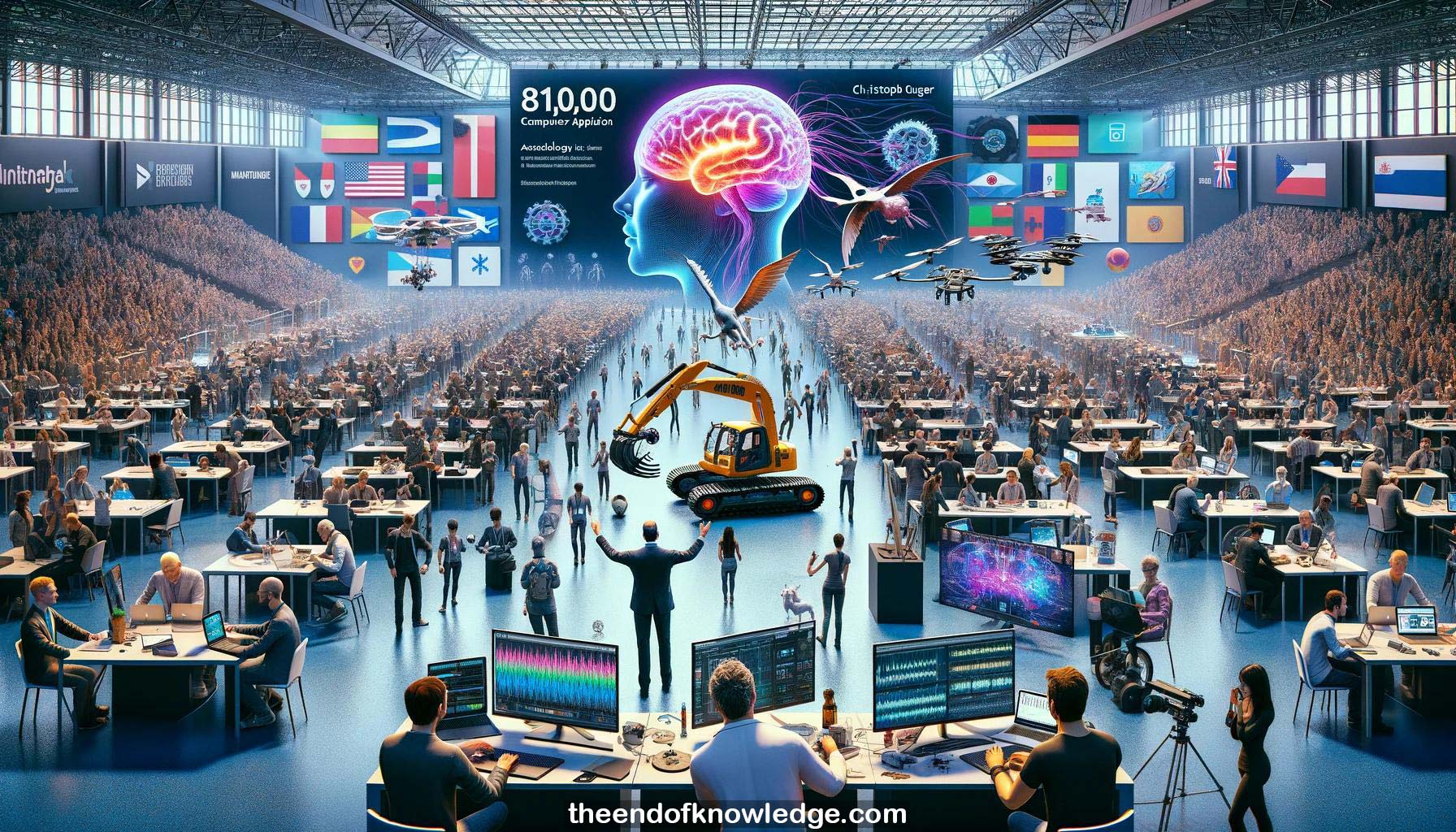

1.- BCI & Neurotechnology Spring School 2024 has 81,000 attendees from 118 countries with 140 hours of education and 85 speakers.

2.- Today is hackathon day with 24 hours to create BCI applications. It is a worldwide event with an expert jury.

3.- Hosting institutes span many countries including Austria, Netherlands, Denmark, UAE, Canada, USA, China, Italy, Colombia, Sweden, Poland, and Australia.

4.- Jury members come from University of Amsterdam, University of Timisoara, Bootsen Italy, Queen's University Canada, and University of Tennessee USA.

5.- Prizes include $1000 for 1st place and certificates for 2nd and 3rd. Categories cover gaming, programming, smart home control, art, etc.

6.- Past projects involved sewing machine headsets, prosthetic hands, drones, dream painting, VR/AR rehab, sphero robots, digger control, and robotic painting.

7.- Christoph Guger welcomes attendees and summarizes statistics. He shows a project controlling a digger with a unicorn speller.

8.- Martin Spuler from G.Tec gives a presentation on the hardware and software interfaces of the unicorn brain computer interface system.

9.- The unicorn amplifier has 8 EEG channels with 24-bit resolution and hybrid dry/gel electrodes. It works out of the box.

10.- The unicorn is used by many research institutions worldwide. Key features are 24-bit resolution, hybrid electrodes, and smart electrode placement.

11.- The unicorn software ranges from APIs to finished applications for research, art, robotics, education and more. It supports many platforms.

12.- Pre-processing EEG involves bandpass and notch filtering to remove noise and focus on 0.5-50 Hz frequencies of interest.

13.- EEG rhythms like delta, theta, alpha, beta and gamma reflect different brain states from deep sleep to high alertness.

14.- Visually evoked potentials like P300, SSVEP and c-VEP use visual stimuli to elicit detectable brain responses for BCI control.

15.- A live demo shows applying the unicorn headset, checking impedances, and visualizing clean EEG data with eyes open/closed.

16.- The Unicorn Speller uses P300 to select characters by flashing rows and columns. It can be used to control other applications.

17.- The Blondie Checker ranks images based on detecting P300 responses when a blonde face appears, showing the brain detects target stimuli.

18.- The Unity game engine interface allows creating BCI games that combine keyboard control with SSVEP control of the environment.

19.- Live demo of SSVEP control in Unity game and discussion of development tools and configuration options in the Unity framework.

20.- Tips for BCI game design include not having too many simultaneous stimuli, adjusting classifier parameters, and combining input modalities.

21.- The Unity package for Unicorn is installed via the Unity package manager. Prefabs make it easy to integrate into projects.

22.- Signal quality, BCI manager, and Flash controllers handle the core BCI pipeline. Developers can customize the UI and objects.

23.- Simulator mode allows testing games without connecting the BCI hardware. The framework supports sending data to other apps via LSL.

24.- Python, C#, C and other APIs allow flexible integration of Unicorn into custom applications following a basic data acquisition template.

25.- Stroke rehab datasets (motor imagery), P300 datasets, SSVEP datasets, and datasets from unresponsive patients (vibrotactile P300) are available.

26.- ECOG datasets of hand pose classification and video watching with categorization of visual stimuli provide an invasive BCI perspective.

27.- The stroke rehab paradigm provides closed-loop feedback via FES, with datasets showing changes pre/post therapy. A pipeline is described.

28.- P300 speller uses row/column flashing to detect target letter. Datasets from 5 subjects recorded with Unicorn are provided.

29.- SSVEP datasets with 4 frequencies (9-15 Hz) from 2 subjects viewing flickering stimuli are detailed, including an LDA classifier.

30.- Unresponsive patient data uses a 3-class tactile P300 paradigm (target wrist, non-target wrist, distractor ankle) to assess command following.

31.- Accuracy differences between sessions for unresponsive patients warrant further analysis for potential explanatory biomarkers.

32.- The invasive hand pose dataset, recorded from a high-density ECoG grid during a rock-paper-scissors task, spans sensorimotor cortex.

33.- High frequency activity (>50 Hz) provides a robust signal for hand poses, with data sampled at 1.2 kHz. Reference pipelines are shared.

34.- Another ECoG dataset involves a patient watching a video of a garden walk with various visual categories (faces, objects, digits, etc).

35.- Analyzing the garden walk ECoG data could reveal electrodes discriminative of visual categories, e.g. in temporal lobe.

36.- Next, attendees will move to Discord and other forums to network and begin the hackathon. Datasets and links are being distributed.

37.- The hackathon resumes presentations at 4pm Vienna time with NeuroTechX, then team presentations and awards tomorrow afternoon.

38.- Many teams are participating which is exciting. Christoph looks forward to the project results presented tomorrow.

Knowledge Vault built byDavid Vivancos 2024