>

>

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Llama 3:

Resume:

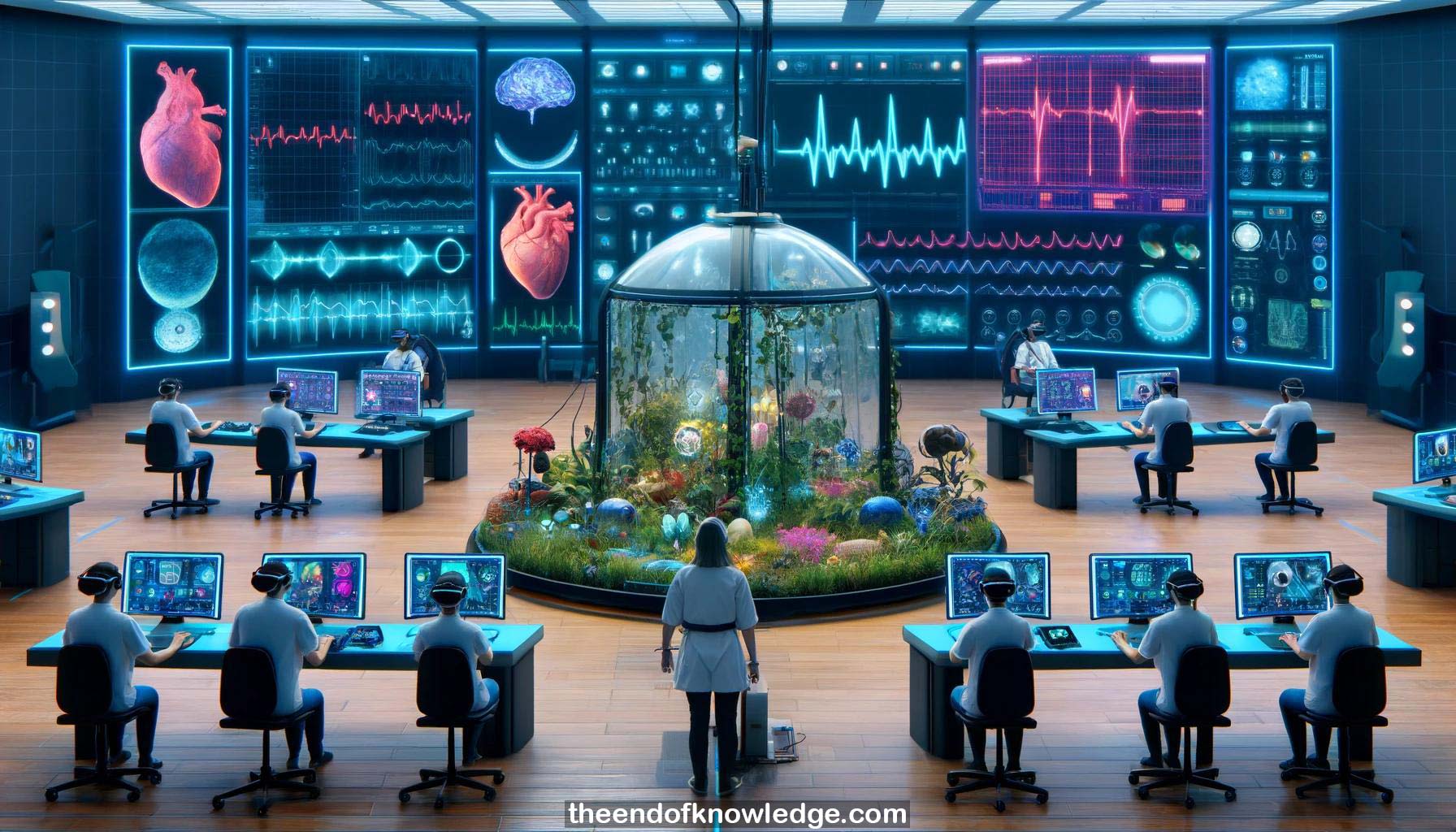

1.- Tiago Falk's research involves building tools to extract features from biosignals in real-time to update virtual environments or provide biofeedback.

2.- In 2018, they could overlay mental state, blink rates, heart rates in real-time to regulate the VR environment based on stress levels.

3.- They detected eye movements from EEG headset signals to estimate gaze direction with 80% accuracy and 10 degrees of error.

4.- In 2020, they redesigned hardware to be embedded, sanitizable, allowing completely remote at-home studies during pandemic lockdowns.

5.- They ran an at-home study with Half-Life: Alyx VR game, measuring EEG and sending surveys, finding correlates with game experience.

6.- Perception of time loss correlated with a combination of head movements, EEG features, and heart rate variability, indicating high engagement.

7.- They studied the impact of stimulating multiple senses (heat, vibration, wind, smell) on presence, immersion, realism, engagement, and overall experience.

8.- In VR gaming, stimulating more senses increased all those factors without affecting cybersickness. EEG showed more openness and pleasant feelings.

9.- In VR nature experiences, stimulating more senses, especially smell and heat, increased relaxation. Effects seen in self-reports and physiological signals.

10.- They tested allowing hospital nurses to de-stress in VR nature environments on breaks, finding improved relaxation with multisensory stimulation.

11.- In a study with 30 PTSD patients, 12 VR nature sessions improved heart rate variability, processing speed, attention and memory.

12.- EEG signatures after 5 sessions could predict if a patient would benefit from the VR PTSD intervention or not.

13.- They tested if multisensory VR could prime and improve subsequent motor imagery BCI performance in naïve users.

14.- Physically grasping and smelling oranges in VR right before imagined grasping boosted motor imagery BCI accuracy more than visual-only.

15.- Spatial filter weights increased more when multisensory stimulation happened right before the motor imagery, indicating priming of relevant brain activity.

16.- Future work will examine neural synchrony between multiple users socializing in VR/AR, but this raises privacy and security concerns.

17.- Effective passive BCIs require expertise in signal processing, experimental design, psychology, and machine learning, not just applying ML to raw data.

18.- Low-cost EEG-VR development is very feasible. There are many potential healthcare, entertainment, education, and industrial applications to still be explored.

19.- Companies can create virtually any requested smell. Their multisensory VR pod has 300 smell options made from essential oils.

20.- For the PTSD study, they measured ECG and are analyzing EEG, but did not use other sensors.

21.- Adding EEG measurement could enhance VR therapy compared to current commercial VR therapy solutions by providing medical-grade data.

22.- Exposure therapy for PTSD that recreates trauma-related triggers in VR is common, but they instead provided positive relaxing VR memories.

23.- No special permissions were needed to use the VR game Half-Life: Alyx in their research.

24.- They focus on altering mental state through stimuli rather than direct brain stimulation.

25.- Mismatches between visuals and smells in VR can break immersion. Labels like "orange" smell were changed to "citrus" to avoid this.

26.- The company providing smells for their research no longer uses specific labels to avoid potential mismatches with visuals.

27.- Having congruency between smells and visuals is important for VR experiences to feel realistic and immersive.

28.- Incongruent smells, like a New York beach smell with Caribbean beach visuals, caused people to prematurely end the VR experience.

29.- One person's "orange" smell might be perceived as "lemon" by someone else, so avoiding specific labels helps prevent jarring mismatches.

30.- There is a lot of future research potential at the intersection of VR/AR, passive BCIs, multisensory stimulation, and hyperscanning.

Knowledge Vault built byDavid Vivancos 2024