>

>

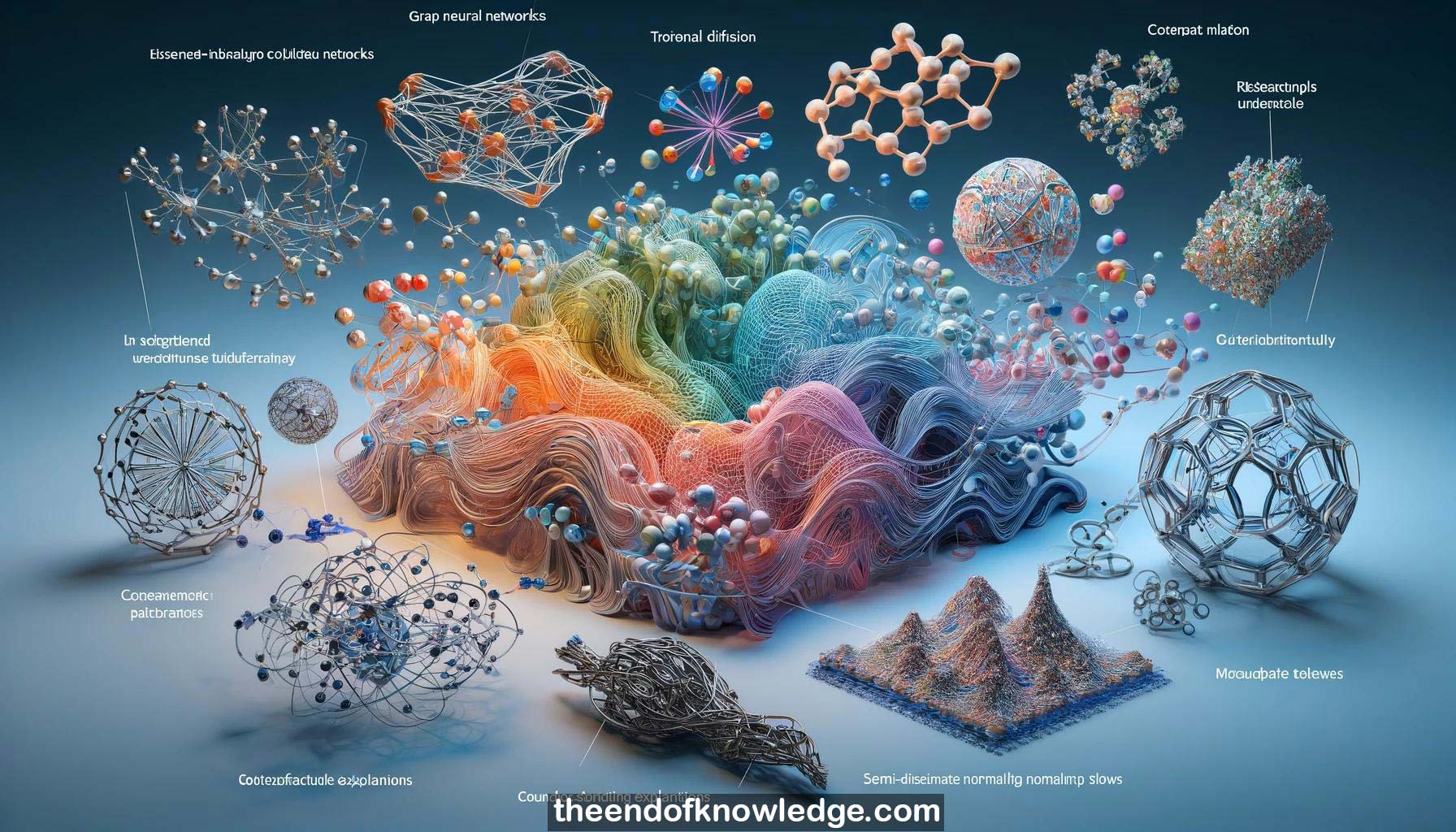

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Gemini Adv | Llama 3:

Resume:

1.-Max Welling discussed deep learning for molecules and PDEs using graph neural networks with equivariance properties.

2.-Equivariant graph neural networks combine graph neural networks with equivariance to model 3D molecular structures.

3.-Graph neural networks for PDEs enable solving many types of PDEs with learned stencils and frequency marching.

4.-Conditional generation remains a challenge - generated molecules must be chemically stable, non-toxic, and synthesizable.

5.-Ellen Zhong presented cryo-DRAGON, a deep generative model for reconstructing 3D protein structures from 2D cryo-EM images.

6.-Cryo-DRAGON uses coordinate-based neural networks, a VAE architecture, and exact pose inference to model heterogeneous protein structures.

7.-Cryo-DRAGON was used to discover new protein structures and visualize continuous protein dynamics from cryo-EM data.

8.-Future work includes ab initio reconstruction, exploratory data analysis, benchmarking, and incorporating protein sequence/structure information.

9.-Tristan Deleu introduced DAG G-Flow Nets for Bayesian structure learning of Bayesian networks.

10.-DAG G-Flow Nets provide an approximation of the posterior distribution of DAGs using generative flow networks.

11.-A new detailed balance condition for G-flow nets with only terminating states was introduced.

12.-DAG G-Flow Nets outperformed other Bayesian structure learning methods on both synthetic and real data.

13.-Bowen Jing and Gabriel Corso presented torsional diffusion, a diffusion model for molecular conformation generation.

14.-Torsional diffusion restricts diffusion to torsion angles, greatly reducing dimensionality compared to diffusing atomic coordinates.

15.-Torsional diffusion leverages the Fourier slice theorem and special functions on the hypertorus for equivariant generation.

16.-Torsional diffusion significantly outperformed existing rule-based and machine learning methods for conformation generation.

17.-Gimhani Eriyagama introduced MACE, a model-agnostic counterfactual explanation method for explaining predictions of arbitrary black-box models.

18.-MACE generates a local chemical space around an input molecule and labels counterfactuals using the black-box model.

19.-Counterfactual explanations provide intuitive, actionable insights into model predictions for drug-like molecules.

20.-The open-source XMol package provides an easy-to-use implementation of the MACE algorithm.

21.-Prateek Tiwari presented denoising diffusion probabilistic models (DDPMs) for generating sensible molecular conformations and trajectories.

22.-DDPMs learn distributions over molecules by diffusing to noise and then learning to denoise samples.

23.-DDPMs capture the Boltzmann distribution and generate samples from new regions of the energy landscape.

24.-For modeling non-Markovian dynamics from time series data, path-sampling LSTMs provide state-of-the-art results.

25.-Adding physics-based constraints to path-sampling LSTMs improves generation quality by reducing data noise.

26.-Bahijja Tolulope Raimi presented DDRM, an unsupervised method for solving inverse problems using pre-trained diffusion models.

27.-DDRM operates in spectral space, enabling denoising and inpainting for general degradation matrices.

28.-DDRM outperforms previous unsupervised inverse problem solvers in both PSNR and perceptual quality.

29.-Ricky T.Q. Chen introduced semi-discrete normalizing flows via differentiable Voronoi tessellation for modeling bounded supports.

30.-Voronoi tessellation enables flexible partitioning of a continuous space for dequantization and disjoint mixture modeling.

Knowledge Vault built byDavid Vivancos 2024