>

>

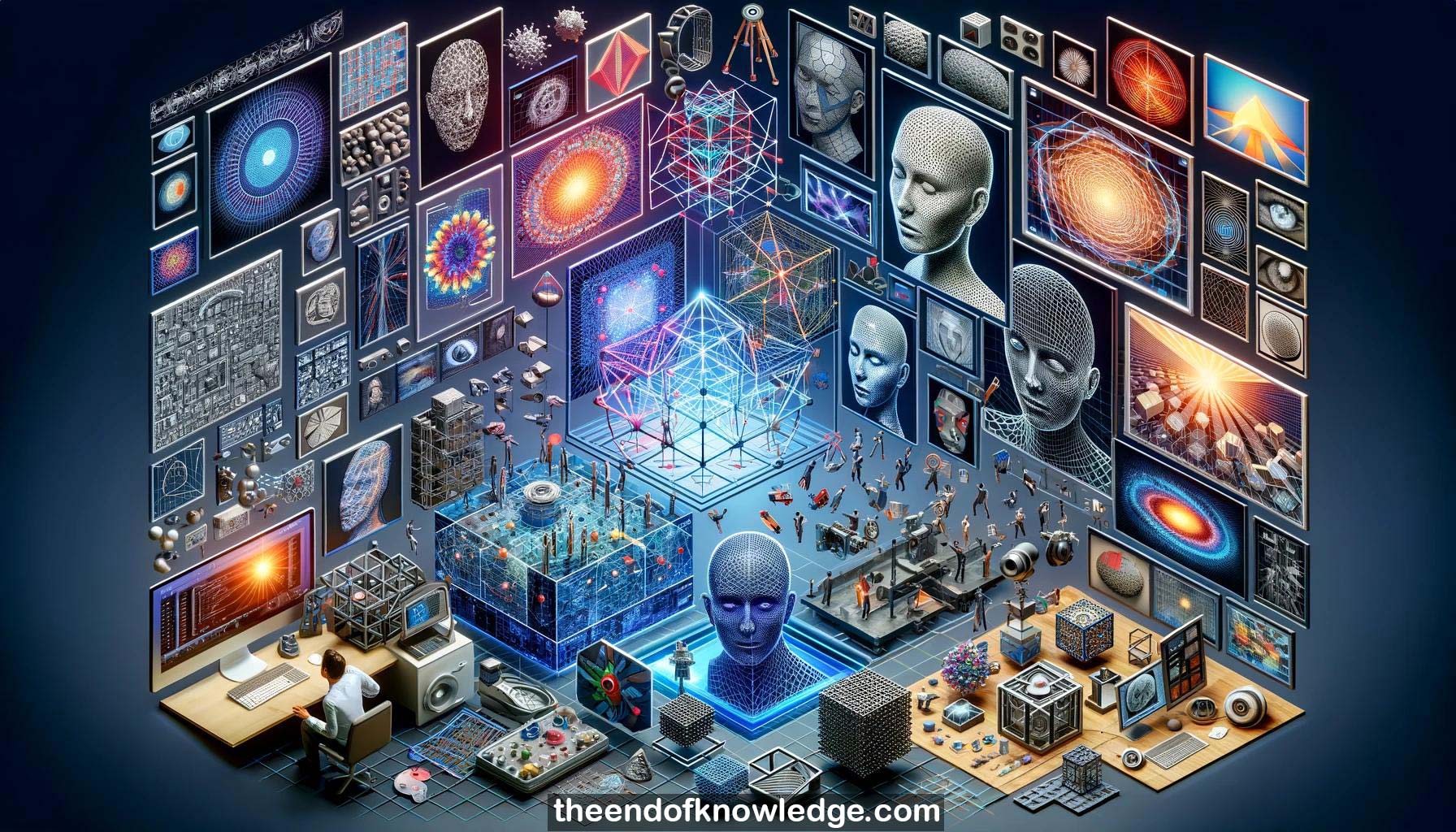

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Gemini Adv | Llama 3:

Resume:

1.-Lourdes Agapito discusses how to learn 3D representations of the world from just images or videos, without 3D annotations.

2.-Structure from motion and multi-view stereo are classic examples of learning 3D from 2D observations, using geometric optimization methods.

3.-Neural networks can now be used to infer 3D representations, trained with 2D losses like photometric consistency between synthesized and actual views.

4.-The 3D representations can be discrete voxels, point clouds, meshes, or implicit functions like signed distance fields represented by neural networks.

5.-Agapito's research focuses on learning deformable 3D models that capture how object shapes vary over time and across object categories.

6.-Low-rank embeddings can be learned from 2D observations to efficiently represent 3D deformations of objects like faces, without 3D scan data.

7.-Photometric losses comparing re-rendered images to input video frames enable learning detailed deformable 3D face models for applications like multilingual video synthesis.

8.-Object-aware 3D scene representations combine 3D reconstruction with 2D object detection to attach semantic labels to 3D geometry.

9.-Implicit neural representations like DeepSDF can represent full 3D shapes from partial observations by leveraging pre-trained shape priors.

10.-Neural radiance fields (NeRF) use fully-connected networks to represent 3D scenes and enable novel view synthesis from a set of input images.

11.-Challenges remain in learning 3D representations that are useful for embodied agents safely interacting with humans in the real world.

12.-Robots need to anticipate human actions and incorporate physical priors, not just recognize 3D geometry, to assist humans without explicit commands.

13.-Generative 3D models should disentangle factors like shape, texture, lighting and deformation to enable controlled editing and synthesis of novel objects.

14.-Techniques like ConvNeRF enable category-level 3D reconstruction from a single image by learning shape and texture priors from image collections.

15.-3D reconstruction of dynamic scenes and deformable objects like the human body remains an open challenge compared to static scenes.

16.-Realistic editing of facial expression, emotion and body language in synthesized talking head videos is an unsolved problem.

17.-Scaling facial animation to work from a small number of photos rather than several minutes of training video is an active research area.

18.- Synthesizing complete dynamic scenes with people interacting with objects is extremely challenging and an important open problem.

19.-3D-aware neural scene representations should be extended to predict object affordances, semantics and physical properties, not just geometry and appearance.

20.-Self-supervised learning of 3D representations should explore integrating multiple modalities like vision, language, audio and interaction to reduce annotation requirements.

21.-The computer vision, graphics, robotics and machine learning communities should collaborate to develop broadly useful 3D scene representations for perception and interaction.

Knowledge Vault built byDavid Vivancos 2024