>

>

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Gemini Adv | Llama 3:

Resume:

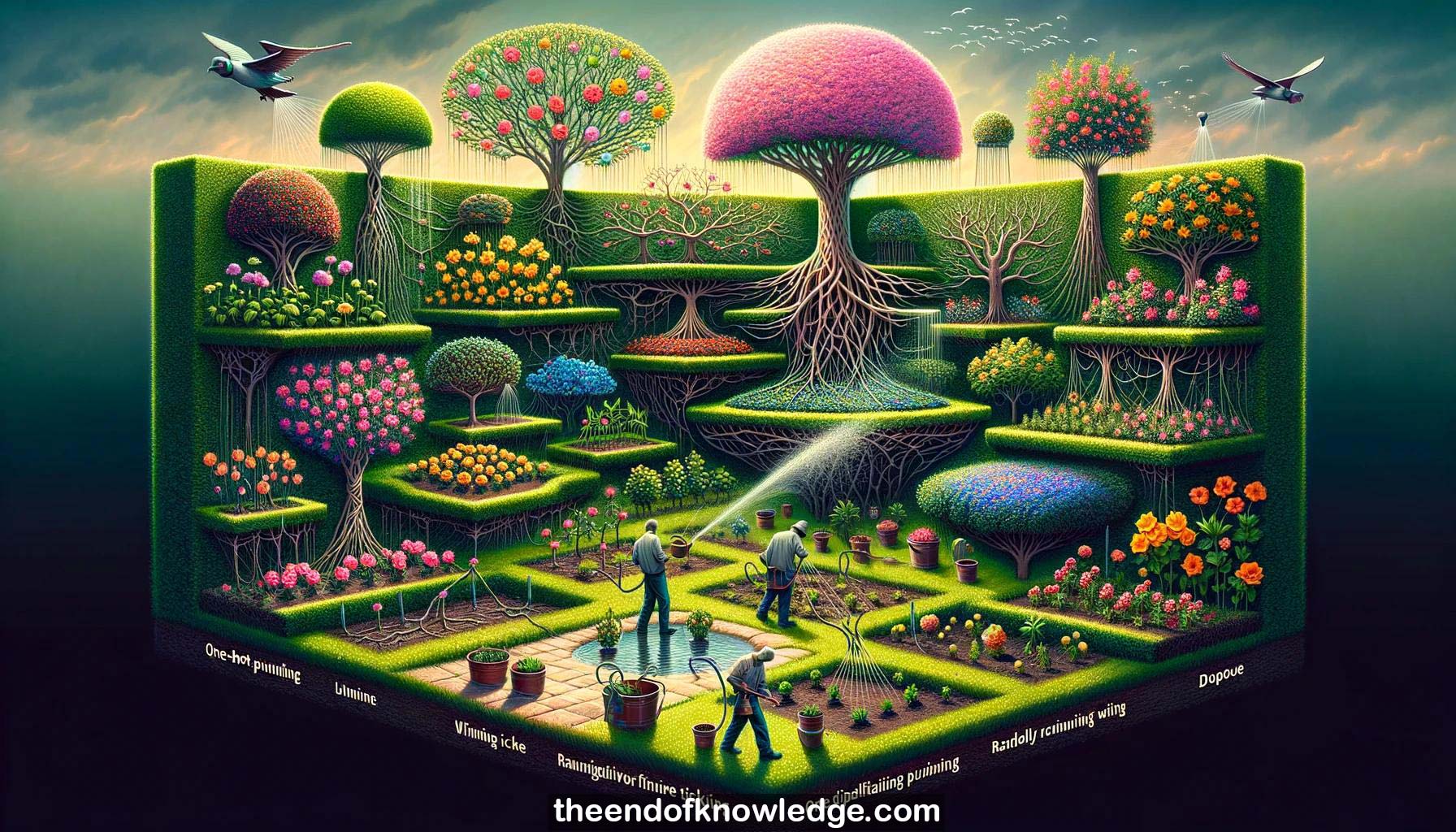

1.-The lottery ticket hypothesis proposes that dense neural networks contain sparse subnetworks that can be trained in isolation to full accuracy.

2.-Iterative pruning involves training, pruning, and resetting a network over several rounds, gradually sparsifying it while attempting to maintain accuracy.

3.-Winning tickets are sparse subnetworks that train faster and reach higher accuracy than the original network when reset and trained in isolation.

4.-Randomly reinitializing winning tickets degrades their performance, showing the importance of a fortuitous initialization for enabling effective training of sparse networks.

5.-_One-shot pruning, where a network is pruned just once after training, can find winning tickets but not as small as iterative pruning.

6.-In convolutional networks, iterative pruning finds winning tickets 10-20% the size of the original network, showing dramatically improved accuracy and training speed.

7.-Training the pruned networks with dropout leads to even greater accuracy improvements, suggesting pruning and dropout have complementary regularizing effects.

8.-On deeper networks like VGG-19 and ResNet-18, iterative pruning requires lower learning rates or learning rate warmup to find winning tickets.

9.-Winning tickets reach higher test accuracy at smaller sizes and learn faster than the original networks across fully-connected and convolutional architectures.

10.-Winning tickets found through iterative pruning match the accuracy of the original network at 10-20% of the size on the architectures tested.

11.-Winning tickets that are randomly reinitialized perform significantly worse, indicating the importance of the original initialization rather than just the architecture.

12.-The gap between training and test accuracy is smaller for winning tickets, suggesting they generalize better than the original overparameterized networks.

13.-Different iterative pruning strategies were evaluated, with resetting network weights each round performing better than continuing training without resetting weights.

14.-The iteration at which early stopping occurs on the validation set is used as a proxy metric for the speed of learning.

15.-Adam, SGD, and SGD with momentum optimizers were tested at various learning rates, all yielding winning tickets with iterative pruning.

16.-Slower pruning rates (e.g. removing 20% per iteration vs 60%) lead to finding smaller winning tickets that maintain performance.

17.-Different layer-wise pruning rates were compared for convolutional networks, with fully-connected layers pruned faster than convolutional layers for best results.

18.-Gaussian initializations with different standard deviations were tested; winning tickets were found in all cases with iterative pruning.

19.-Larger Lenet networks yielded winning tickets that reached higher accuracy, but relative performance was similar across different sized Lenets.

20.-Winning tickets were found when training with and without dropout, though presence of dropout affected learning speed in the unpruned networks.

21.-Pruning just convolutional or fully-connected layers alone was less effective than pruning both for reaching small winning ticket sizes.

22.-Winning ticket initializations form bimodal distributions shifted away from zero as networks are pruned, unlike the original Gaussian initializations.

23.-Units in winning tickets have similar levels of incoming connectivity after pruning, while some units retain far more outgoing connectivity.

24.-Adding Gaussian noise to winning ticket initializations only gradually degrades accuracy, showing robustness to perturbations in their initial weight values.

25.-Winning ticket weights consistently move further from their initializations compared to weights pruned early, suggesting pruning finds fortuitous initialization trajectories.

26.-Globally pruning across all layers performs better than layer-wise pruning for finding small winning tickets in very deep networks (VGG-19, ResNet-18).

27.-Learning rate warmup enables finding winning tickets at larger learning rates in deep networks when standard iterative pruning struggles.

28.-Evaluating different warmup durations, 5k-20k iterations of warmup improved results with 20k (ResNet-18) and 10k (VGG-19) working best.

29.-The lottery ticket hypothesis may provide insight into the role of overparameterization and the optimization of neural networks.

30.-Future work aims to leverage winning tickets to improve training performance, design better networks, and advance theoretical understanding of neural networks.

Knowledge Vault built byDavid Vivancos 2024