>

>

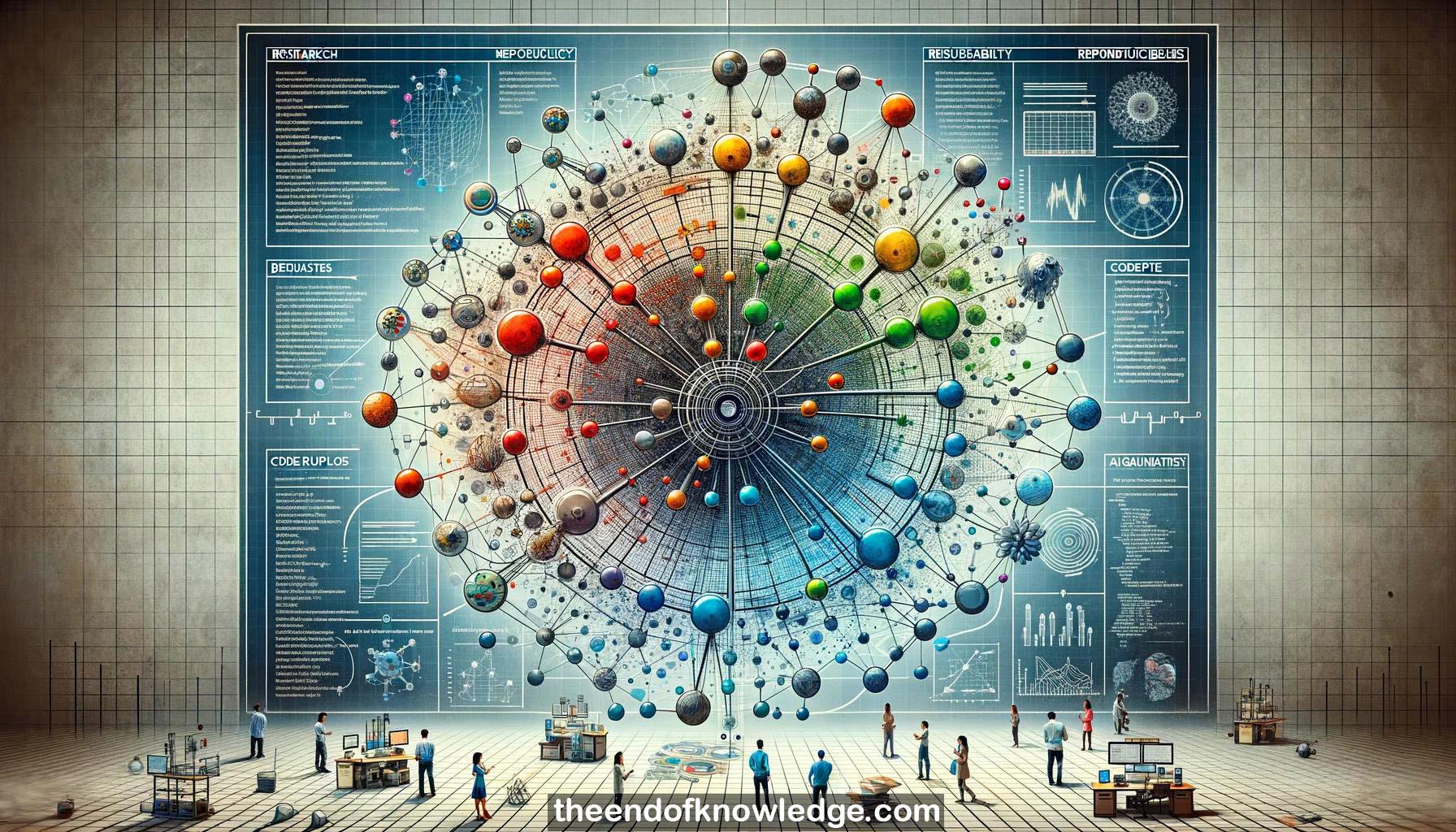

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Gemini Adv | Llama 3:

Resume:

1.-Dr. Joelle Pineau gives a keynote on reproducibility, reusability and robustness in reinforcement learning (RL) at the ICLR conference.

2.-Reproducibility means being able to duplicate prior study results using the same materials the original investigator used.

3.-A Nature survey found 50-80% of scientists in various fields have failed to reproduce others' experiments, indicating a reproducibility crisis.

4.-True scholarship involves sharing the complete software, data, and instructions to generate results, not just communicating findings.

5.-RL research has exploded from 35 people in 2000 to 13,000 papers in 2016, making it hard to evaluate contributions.

6.-AlphaGo's impressive Go results were hard for other teams to replicate due to inaccessibility of games, code and compute resources.

7.-RL is poised to tackle important real-world problems but needs better practices around characterizing and sharing results.

8.-Enabling reproducibility requires sharing reusable software, datasets and experimental platforms developed from the start of projects.

9.-Math conjectures like Fermat's last theorem took centuries to prove, wasting generations of effort that could have tackled other problems.

10.-RL baseline algorithms like TRPO, PPO and DDPG show very different performance across similar simulators, complicating comparisons.

11.-Multiple open-source implementations of the same RL algorithms yield drastically different results, even on the same tasks.

12.-Hyperparameters like network structure, reward scaling and normalization have huge and interacting effects on RL algorithm performance.

13.-The number of samples used to measure expected reward of learned RL policies is often very small in published work.

14.-Using too few samples to measure RL policy performance introduces positive bias and underestimates variance.

15.-Even when using the same code and hyperparameters, RL results vary significantly just from changing the random seed.

16.-RL can potentially tackle crucial real-world problems but needs to improve reproducibility to realize its potential impact.

17.-Facebook AI Research released ELF OpenGo, an open-source codebase and models for a strong Go playing bot to enable reproducibility.

18.-The ICLR 2018 Reproducibility Challenge involved 124 teams from 10 universities aiming to reproduce results from 95 ICLR papers.

19.-70% of Reproducibility Challenge participants believed there was a reproducibility crisis in ML before the challenge.

20.-About 55% of challenge participants successfully reproduced some results, 33% reproduced most, but found the task very difficult.

21.-Many challenge participants used donated cloud compute credits to facilitate reproducing results requiring significant resources.

22.-43% of challenge participants communicated with authors, and most were moderately to highly confident in their conclusions.

23.-Over 60% of authors planned to update their ICLR submissions based on feedback from the reproducibility challenge.

24.-79% of authors said they would volunteer for future reproducibility challenges for the valuable feedback it provides.

25.-Competing interests between scientific reproducibility and commercial IP can be navigated by choosing receptive partners.

26.-Prominently labeling reproducible papers and setting up incentives could encourage authors to make work more reproducible.

27.-Reproducibility is often partial and hard to summarize binary, so still requires reading details provided by reproduction attempts.

28.-Questioning one's own positive and negative results and carefully documenting all steps improves reproducibility.

29.-Re-running code right before paper submission catches issues from code changes made after generating results.

30.-Pineau argues reproducibility requires rigor and diligence, with no magic solutions, and should be a key focus across ML.

Knowledge Vault built byDavid Vivancos 2024