>

>

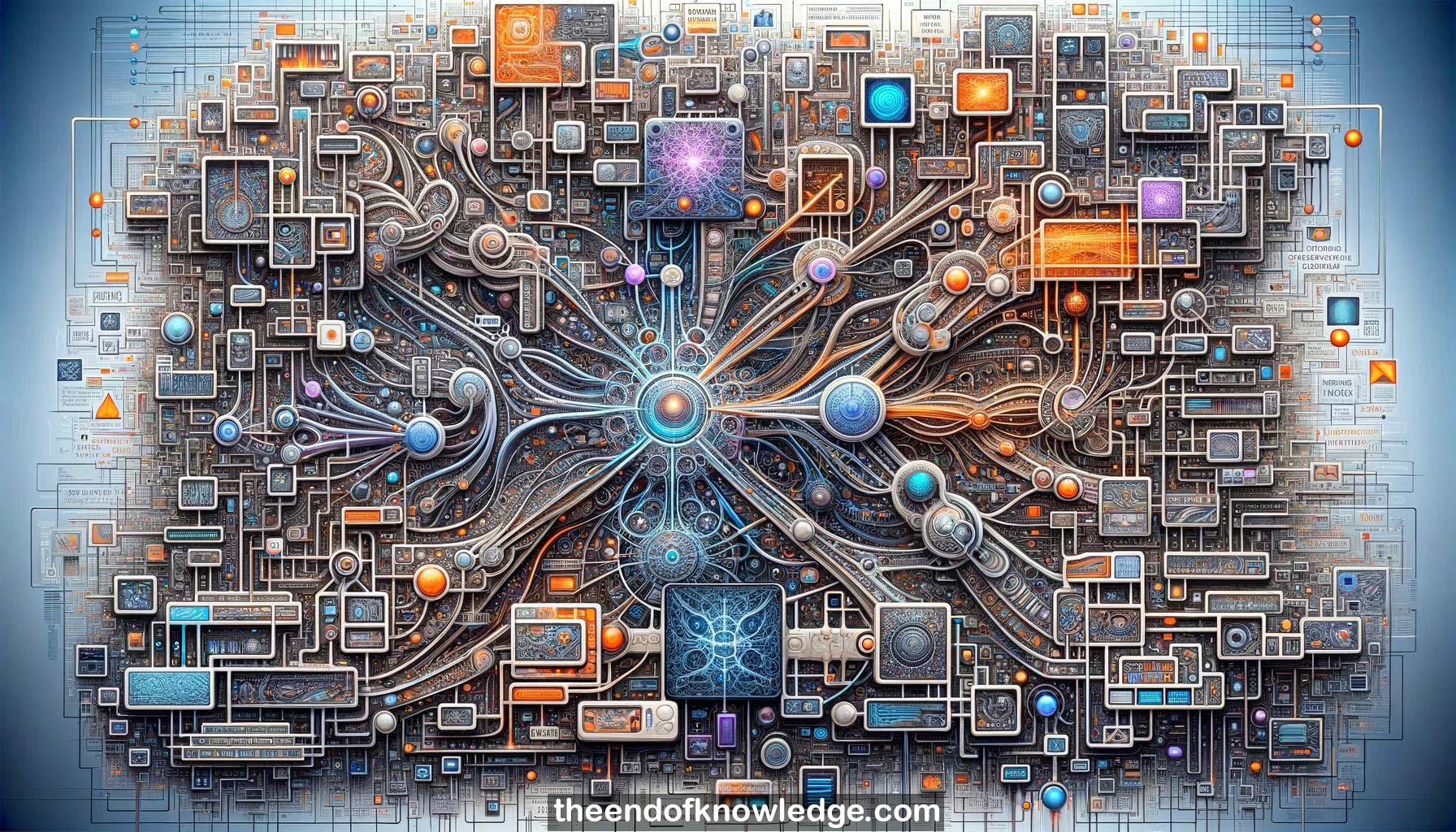

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Gemini Adv | Llama 3:

Resume:

1.-RNNs with multiplicative units like LSTM and GRU work well and are widely used for tasks involving sequential data.

2.-RNNs are increasingly trained end-to-end, with raw inputs fed in and raw outputs produced, reducing the need for feature engineering.

3.-RNN memory can be fragile, with new information overwriting what's stored. Computational cost also grows with memory size.

4.-External memory allows the network to have less fragile, more flexible memory that is separate from computational cost.

5.-Neural Turing Machines, Memory Networks, and Neural Machine Translation were early examples of neural networks with external read/write memory.

6.-Differentiable Neural Computers (DNCs) are a newer example, with more sophisticated memory access mechanisms like content-based addressing and temporal linking.

7.-DNCs outperformed previous models on tasks like traversing London Underground connections, despite never seeing that structure during training.

8.-DNCs use multiple access mechanisms in combination, like content-based lookup to fill in missing information from a query.

9.-DNCs passed 18/20 bAbI tasks, but failed on basic induction for unknown reasons, highlighting areas for further research.

10.-Scaling up external memory systems has been challenging due to computational cost, but sparse access methods help efficiency.

11.-Back-propagation through time (BPTT) has issues for RNNs like increasing memory cost with sequence length and infrequent weight updates.

12.-Truncated BPTT is commonly used but misses long-range interactions. Approximations to RTRL are promising but not yet practical.

13.-Synthetic gradients predict error gradients using local information, allowing decoupled training of network components without full BPTT.

14.-Synthetic gradients make truncated BPTT more efficient, enabling training on much longer sequences that were previously impractical.

15.-Synthetic gradients enable asynchronous updates and communication between modules ticking at different timescales in a hierarchical RNN.

16.-For typical RNNs, computation steps are tied to input sequence length, which is limiting for complex reasoning tasks.

17.-Adaptive Computation Time (ACT) allows the network to learn how long to "ponder" each input before producing an output.

18.-ACT separates computation time from data time, analogous to how memory networks separate computation from memory.

19.-ACT reveals informative patterns in data, like spikes in computation at uncertain points rather than just where loss is high.

20.-ACT shows that networks spend little compute on incompressible information, and more on difficult inputs or salient image regions.

21.-It's unclear if differentiable systems are fundamentally different from manually written programs, or if they can fully replicate programming abstractions.

22.-Currently learned neural programs seem much simpler than human-level programming with concepts like subroutines and recursion.

23.-Bridging implicit neural representations with symbolic programming abstractions is an open challenge, optimization alone may be insufficient.

24.-The speaker believes computers will eventually learn to program themselves, but the path to get there is uncertain.

25.-Automatic curriculum learning, or learning what to learn next, is an important challenge as we move beyond large supervised datasets.

26.-Reinforcement learning especially needs sophisticated mechanisms to guide data collection, as data is scarcer and trials are costly.

27.-Computers have memory hierarchies (registers, caches, RAM, disks) that match usage patterns, which could benefit neural memory systems.

28.-LSTM controller memory acts like registers or cache, while external read/write memory is more like RAM. Read-only memory could be added.

29.-Rewriting fast, frequently accessed neural memory may need to be penalized differently than slow, infrequently rewritten memory.

30.-Recreating the evolved memory hierarchy of modern computers may be useful in developing neural architectures with memory.

Knowledge Vault built byDavid Vivancos 2024