>

>

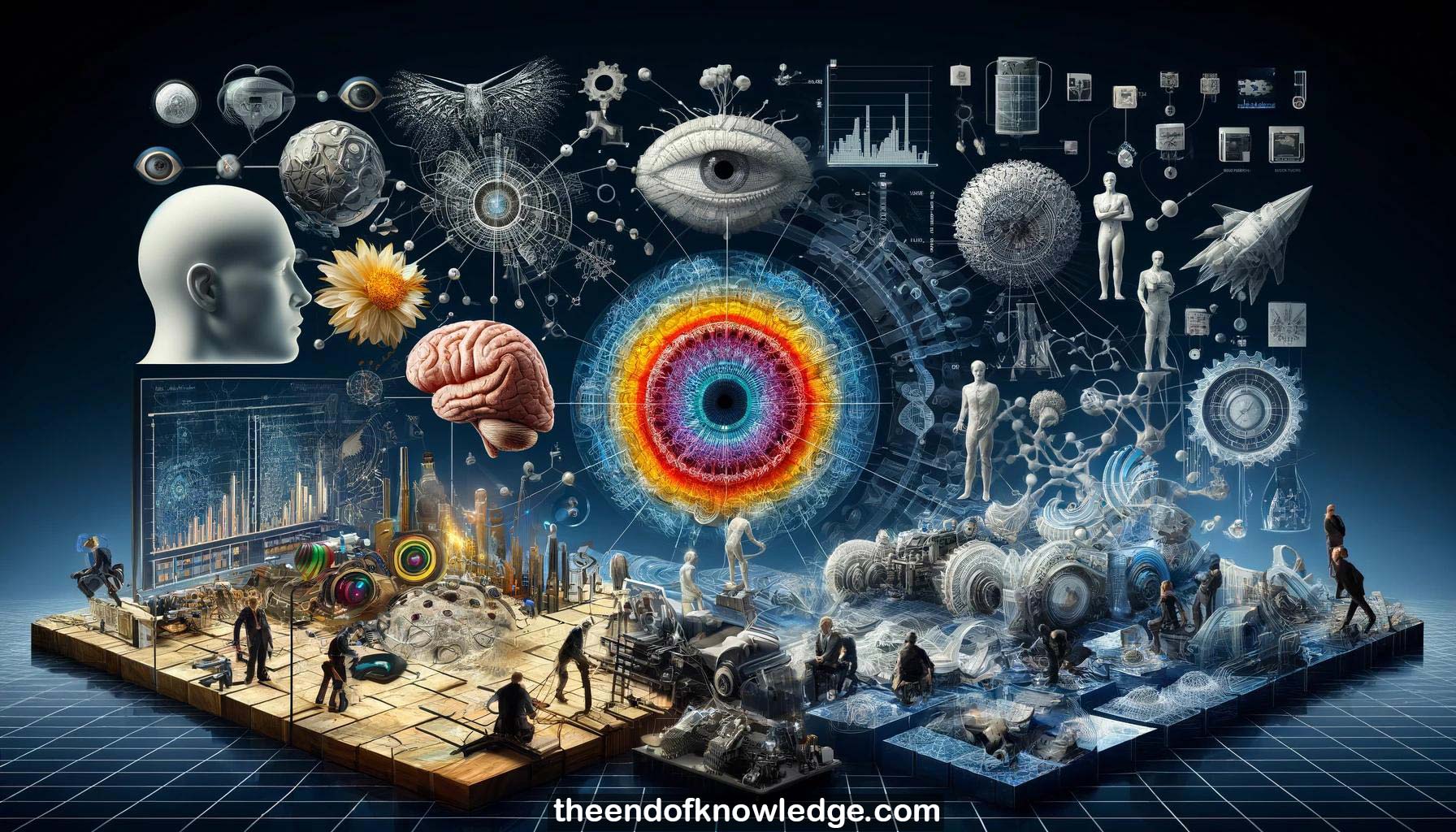

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Gemini Adv | Llama 3:

Resume:

1.-The speaker, Eero Somancelli, is an investigator at HHMI and professor of neuroscience, mathematics, and psychology at NYU.

2.-Somancelli's work focuses on understanding visual representations in the brain and how they allow/limit perception and inform engineered vision systems.

3.-When looking at an image, light lands on the retina and is processed by cells into responses sent to the brain.

4.-Throughout this process, visual information is transformed, summarized and combined with other internal information like memories and intentions to perceive the image.

5.-Visual information is sent to the primary visual cortex at the back of the brain, one of the largest brain areas.

6.-In the 1950s-60s, Hubel & Wiesel discovered neurons in V1 are selective for orientation - a defining characteristic when thinking of V1.

7.-V1 measures local orientation patches in the environment and reports the dominant orientation of each patch to the rest of the brain.

8.-Much V1 input goes to the even larger V2 area, but its functional properties have been unclear and mysterious for decades.

9.-People assumed V2 combines V1's local orientation information to find boundaries/contours to isolate objects, but few V2 cells respond to that.

10.-Somancelli thinks V2's purpose relates to representing visual texture - V2 devotes enormous resources to vision and can't just mimic V1.

11.-The visual world is dominated by texture - small patches of roughly homogenous structures like grass, rock, fur rather than clean boundaries.

12.-In the 1960s, Bela Julesz proposed modeling texture perceptually/computationally using a limited set of image statistics that define texture appearance.

13.-Julesz said textures with matching statistics should look identical to humans, aiming to identify the minimal set of statistics the brain represents.

14.-Julesz tested binary texture statistics up to 3rd order by hand in the 1960s, found a counterexample, and abandoned the theory.

15.-In the late 1990s, Somancelli revisited Julesz's ideas with postdoc Javier Portilla using a multi-scale model based on primate V1 physiology.

16.-Their model measures correlations across scale, position and orientation from responses of V1-like filters to capture ~700 texture statistics.

17.-Synthesizing textures that match model statistics produces images that appear nearly identical to the original, despite throwing away most image information.

18.-This statistical matching works well for a wide variety of visual textures; explicit representation of features/objects is not needed.

19.-Analogous syntheses from deep nets produce unrecognizable "fooling" images, while this V1-based model maintains some original structure in the synthesized image.

20.-With postdoc Josh McDermott, an analogous auditory texture model captured realistic perception of sound textures using biologically-motivated filters and statistics.

21.-Physiologically-motivated choices like cochlear compression and logarithmic filter spacing noticeably improve perceptual realism of synthesized sounds vs simplified versions.

22.-Returning to vision, globally averaging statistics fails for inhomogeneous images, but averaging in smoothly overlapping regions handles arbitrary scenes.

23.-Overlapping pooling regions were chosen to match primate V2 receptive field sizes that grow linearly with distance from the gaze center.

24.-Synthesizing images with locally matching texture statistics in these V2-like pooling regions produces images indistinguishable from the original in peripheral vision.

25.-Large distortions in the synthesized images are invisible when not directly viewed - they are in the "perceptual null space"."

26.-This relates to humans' discrete eye movements when reading text - each hop moves the eyes about one pooling region's width.

27.-Understanding texture representation could allow optimizing typography so text information is more visible and requires fewer, larger eye movements to read.

28.-Repeated hierarchical "filtering and statistics" computations, as seen in deep nets, might be sufficient to explain much of biological vision.

29.-Letvin proposed in 1976 that texture representation, redefined, could be the primitive elements that visual form representation is constructed from.

30.-Open challenges include learning these texture models unsupervised, invertible generative formulations, and augmenting them with local gain control for perceptual quality metrics.

Knowledge Vault built byDavid Vivancos 2024