>

>

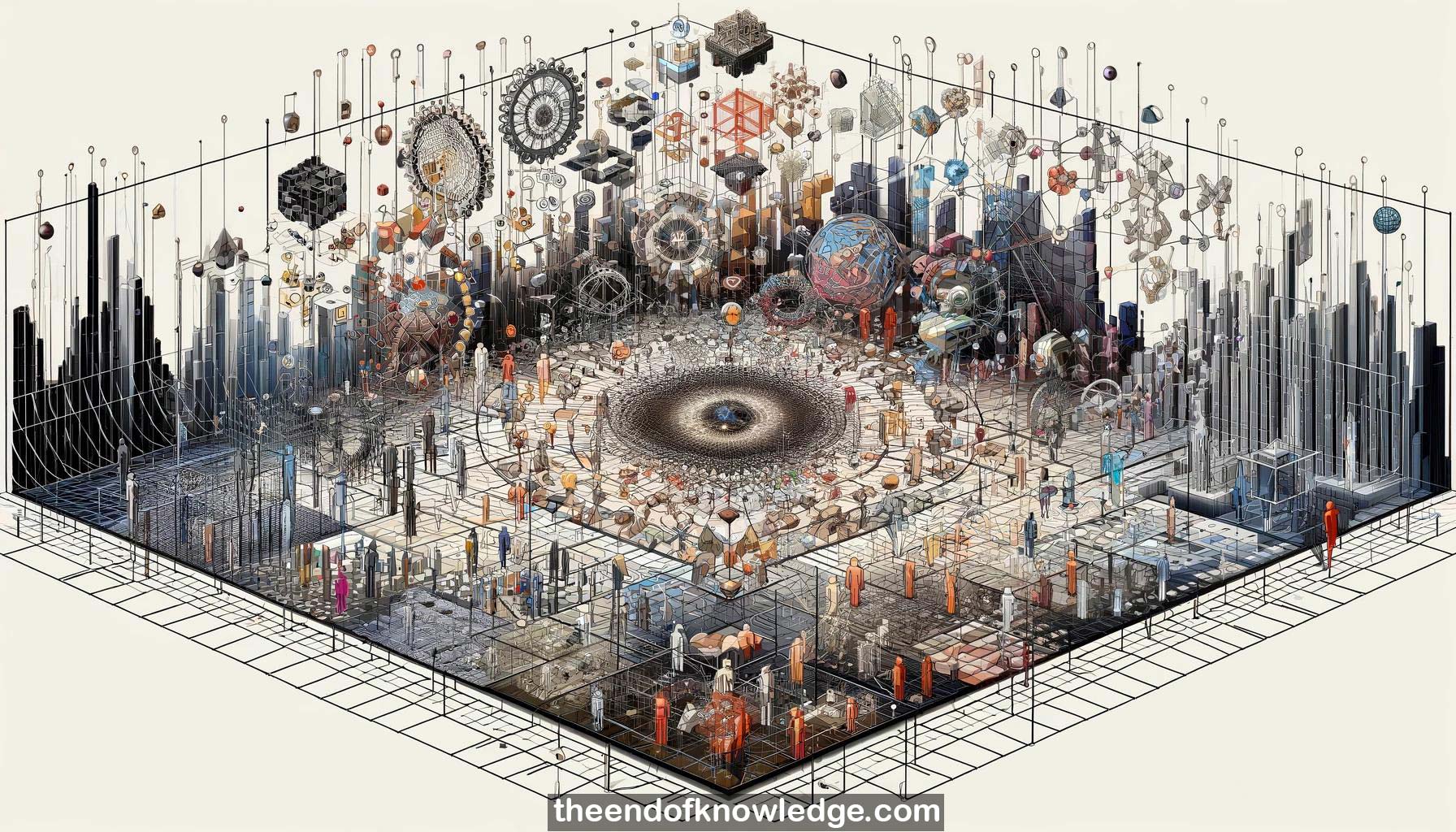

Concept Graph & Resume using Claude 3 Opus | Chat GPT4 | Gemini Adv | Llama 3:

Resume:

1.-The talk presents work in progress on using symmetry group theory as a foundation for machine learning, especially representation learning.

2.-In geometry, an object's symmetry is a transformation mapping the object to itself. Symmetries can be composed and satisfy group axioms.

3.-Continuous symmetry groups are called Lie groups. An example is the group of symmetries of Euclidean space, which preserve distances between points.

4.-A symmetry of a function is an input change that doesn't change the output. This is the key notion for learning representations.

5.-Symmetry is powerful in mathematics, physics, search/optimization, model tracking in vision, lifted probabilistic inference, but underused so far in machine learning.

6.-A symmetry of a classifier is an input representation change preserving the class. Important variations are the targets; unimportant ones are their symmetries.

7.-Learning and composing a target function's symmetries to trivialize it is the goal. This enables learning good representations with less data.

8.-Benefits: reduces sample complexity, generalizes algorithms, leads to formal results, enables deep learning via composition, applies across learning paradigms.

9.-ConvNets are a limited case translating a feature map over an image. Replacing translation by arbitrary symmetry groups generalizes them significantly.

10.-The affine group of linear image transformations is a natural next step after translation, including rotations, reflections, scaling. This yields Deep Affine Networks.

11.-One Deep Affine Network layer applies every affine transform to the image, computes features on each, and pools over local affine neighborhoods.

12.-Computational feasibility requires interpolating features between computed ones at control points and using nearest neighbor search. Ball trees enable this efficiently.

13.-On rotated MNIST digits, 1-layer Deep Affine Nets greatly outperform 1-layer ConvNets with less data by handling rotations directly vs approximately.

14.-Next steps: more layers, richer distortions, real-world images; develop subproduct nets; combine with capsule theory for part-whole composition.

15.-Semantic parsing maps sentences to logical formulas. Symmetries (synonyms, paraphrases, active/passive voice, etc.) preserve a sentence's meaning.

16.-Sentence orbits under syntactic transformations correspond 1-1 with meanings, avoiding explicitly representing meanings and allowing easier learning from sentence pairs.

17.-A semantic parser finds the most probable sentence orbit (meaning). New meanings create new orbits. This can be done efficiently using orbit composition structure.

18.-Learning discovers the symmetries (semantic parser) from sentence pairs. The goal is a minimal generating set to efficiently cover complex symmetries.

19.-Logical inference rules are symmetries of knowledge bases, so reasoning may also fit into this symmetry-based semantic parsing framework.

20.-Symmetry group theory is foundational for machine learning alongside probability, logic, optimization. Combining them unleashes its potential, as shown in these initial examples.

21.-Lack of paraphrase data is an issue, but machine translation corpora could help. Symmetry learning also applies naturally to vision-language connections.

22.-Symmetry algebra is general, not requiring perfect symmetries in the domain. It relates to recent work comparing deep vs shallow networks.

23.-Strong symmetries make data points equivalent, abstracting away distinguishing information permanently, which is often desirable for clearly separating target classes.

24.-Real-world problems often require "fuzzy" invariance, preserving discrimination for the end task. This can be handled via probabilistic orbits or discrimination optimization.

25.-In summary, symmetry group theory provides a powerful foundation for representation learning and extends naturally to many areas, with rich potential.

Knowledge Vault built byDavid Vivancos 2024