>

>

Lex Fridman Podcast #434 - 20/06/2024

Lex Fridman Podcast #434 - 20/06/2024

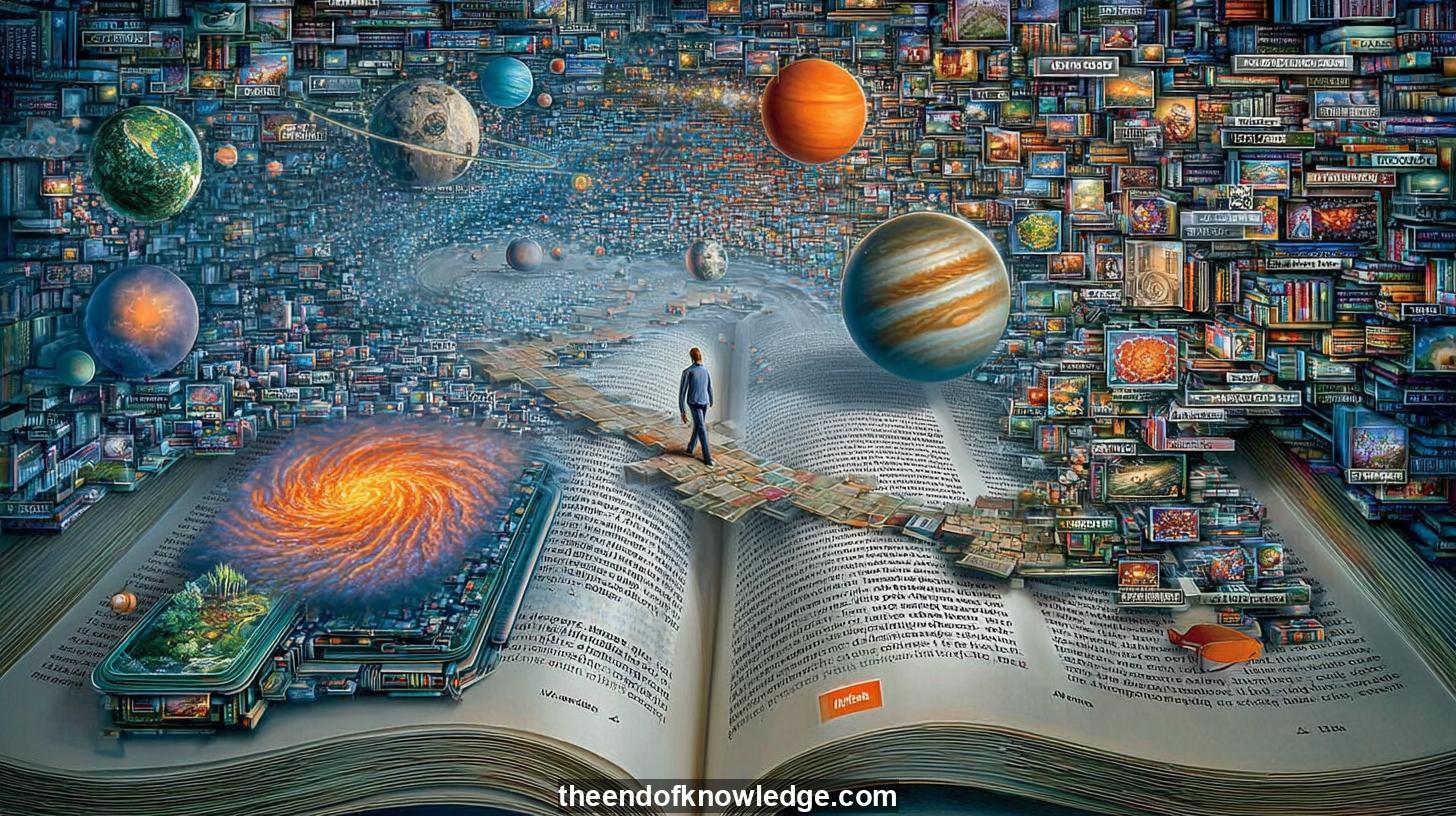

Concept Graph using Moonshot Kimi K2:

Resume:

Aravind Srinivas recounts how Perplexity began as an experiment to make large language models reliable for everyday questions by forcing them to cite every claim, much like an academic paper. The insight came when the founding team, lacking insurance knowledge, realized that raw GPT answers could mislead; anchoring each sentence to web sources reduced hallucinations and turned chatbots into trustworthy research companions. This marriage of search retrieval and disciplined generation became the product’s core, evolving from a Slack bot to a public answer engine that prioritizes verifiable knowledge over opinion.30 Key Ideas:

1.- Perplexity forces LLMs to cite every sentence, reducing hallucinations like academic papers.

2.- Search retrieves relevant web snippets, feeding an LLM that writes concise, footnoted answers.

3.- The product becomes a knowledge-discovery engine, surfacing follow-up questions to deepen curiosity journeys.

4.- Early Slack bot revealed GPT inaccuracies, inspiring citation-based accuracy inspired by Wikipedia.

5.- Founders applied peer-review discipline: every claim must be traceable to multiple reputable sources.

6.- Ad-driven models incentivize clicks over clarity; Perplexity explores subscription and subtle, relevant ads.

7.- Google’s AdWords auction maximizes revenue; lower-margin innovations are avoided, creating opportunity gaps.

8.- Perplexity avoids “10 blue links,” betting direct answers will improve exponentially with better models.

9.- Latency obsession mirrors Google’s early days; P90 metrics and kernel-level GPU tweaks keep responses snappy.

10.- Open-source LLMs enable experimentation on small reasoning models, challenging giant pre-trained paradigms.

11.- Chain-of-thought bootstrapping lets small models self-improve by generating and refining rationales.

12.- Future breakthroughs may decouple reasoning from memorized facts, enabling lighter yet powerful inference loops.

13.- Human curiosity remains unmatched; AI can research deeply but still relies on people to ask novel questions.

14.- Massive inference compute—million-GPU clusters—will unlock week-long internal reasoning for paradigm-shifting answers.

15.- Controlling who can afford such compute becomes more critical than restricting model weights.

16.- Perplexity Pages converts private Q&A sessions into shareable Wikipedia-style articles, scaling collective insight.

17.- Indexing blends crawling, headless rendering, BM25, embeddings, and recency signals for nuanced ranking.

18.- Retrieval-augmented generation grounds answers solely in retrieved text, refusing unsupported speculation.

19.- Hallucinations arise from stale snippets, model misinterpretation, or irrelevant document inclusion.

20.- Tail-latency tracking and TensorRT-LLM kernels optimize throughput without sacrificing user experience.

21.- Model-agnostic architecture swaps GPT-4, Claude, or Sonar to always serve the best available answer.

22.- Founders advise starting from genuine obsession, not market fashion; passion sustains founders through hardship.

23.- Early Twitter search demo showcased relational queries, impressing investors and recruits with fresh possibilities.

24.- Users loved self-search summaries; viral screenshots propelled initial growth beyond hacky Twitter indexing.

25.- Minimalist UI balances novice clarity and power-user shortcuts, learning from Google’s clean early interface.

26.- Related-question suggestions combat the universal struggle of translating curiosity into articulate queries.

27.- Personalized discovery feeds aim to surface daily insights without amplifying social drama or engagement bait.

28.- Adjustable depth settings let beginners or experts tailor explanations, democratizing complex topic access.

29.- Long-context windows promise personal file search and memory, yet risk instruction-following degradation.

30.- Vision extends to AI coaches that foster human flourishing, resisting dystopias of fake emotional bonds.

Interview byLex Fridman| Custom GPT and Knowledge Vault built byDavid Vivancos 2025