Concept Graph using Moonshot Kimi K2:

graph LR

classDef cognition fill:#ffd28a, font-weight:bold, font-size:14px

classDef grammar fill:#8affc7, font-weight:bold, font-size:14px

classDef color fill:#8ad8ff, font-weight:bold, font-size:14px

classDef change fill:#d7a8ff, font-weight:bold, font-size:14px

classDef legalese fill:#ff9ecf, font-weight:bold, font-size:14px

classDef brain fill:#9eff9e, font-weight:bold, font-size:14px

Main[Language

Insights]

Main --> Cog1[Pirahã lacks exact numbers 1]

Cog1 -.-> Cognition

Main --> Gram1[Dependency grammar maps links 2]

Gram1 -.-> Grammar

Main --> Gram2[Short dependencies universal 3]

Gram2 -.-> Grammar

Main --> Leg1[Center-embeddings hurt all 4]

Leg1 -.-> Legalese

Main --> Col1[Color terms track industry 5]

Col1 -.-> Color

Main --> Cog2[Gibson vs Chomsky 6]

Cog2 -.-> Cognition

Main --> Br1[Left brain isolates language 7]

Br1 -.-> Brain

Main --> Cog3[LLMs lack grounding 8]

Cog3 -.-> Cognition

Main --> Gram3[Syntax eases memory load 9]

Gram3 -.-> Grammar

Main --> Cog4[Morphology no barrier 10]

Cog4 -.-> Cognition

Main --> Cha1[English shift V-final to V-medial 11]

Cha1 -.-> Change

Main --> Leg2[Passive voice OK 12]

Leg2 -.-> Legalese

Main --> Cha2[Change driven by contact 13]

Cha2 -.-> Change

Main --> Cog5[Amazonian quantifiers 14]

Cog5 -.-> Cognition

Main --> Gram4[Copying beats movement 15]

Gram4 -.-> Grammar

Main --> Cog6[Infants master any grammar 16]

Cog6 -.-> Cognition

Main --> Gram5[Noisy channel robust 17]

Gram5 -.-> Grammar

Main --> Leg3[Legalese is performative 18]

Leg3 -.-> Legalese

Main --> Br2[Lesions spare reasoning 19]

Br2 -.-> Brain

Main --> Br3[Same network for speech 20]

Br3 -.-> Brain

Main --> Br4[Klingon uses brain circuits 21]

Br4 -.-> Brain

Main --> Gram6[Distance predicts cost 22]

Gram6 -.-> Grammar

Main --> Cha3[Contact borrows words 23]

Cha3 -.-> Change

Main --> Gram7[UG weakens 24]

Gram7 -.-> Grammar

Main --> Cog7[L2 difficulty from distance 25]

Coc7 -.-> Cognition

Main --> Gram8[Poetry uses morphology 26]

Gram8 -.-> Grammar

Main --> Cha4[Irregular verbs entrenched 27]

Cha4 -.-> Change

Main --> Cog8[Field learning via labeling 28]

Cog8 -.-> Cognition

Main --> Cha5[Death by economy 29]

Cha5 -.-> Change

Main --> Cog9[Translation needs concepts 30]

Cog9 -.-> Cognition

Cognition --> Cog1

Cognition --> Cog2

Cognition --> Cog3

Cognition --> Cog4

Cognition --> Cog5

Cognition --> Cog6

Cognition --> Cog7

Cognition --> Cog8

Cognition --> Cog9

Grammar --> Gram1

Grammar --> Gram2

Grammar --> Gram3

Grammar --> Gram4

Grammar --> Gram5

Grammar --> Gram6

Grammar --> Gram7

Grammar --> Gram8

Color --> Col1

Change --> Cha1

Change --> Cha2

Change --> Cha3

Change --> Cha4

Change --> Cha5

Legalese --> Leg1

Legalese --> Leg2

Legalese --> Leg3

Brain --> Br1

Brain --> Br2

Brain --> Br3

Brain --> Br4

class Cog1,Cog2,Cog3,Cog4,Cog5,Cog6,Cog7,Cog8,Cog9 cognition

class Gram1,Gram2,Gram3,Gram4,Gram5,Gram6,Gram7,Gram8 grammar

class Col1 color

class Cha1,Cha2,Cha3,Cha4,Cha5 change

class Leg1,Leg2,Leg3 legalese

class Br1,Br2,Br3,Br4 brain

Resume:

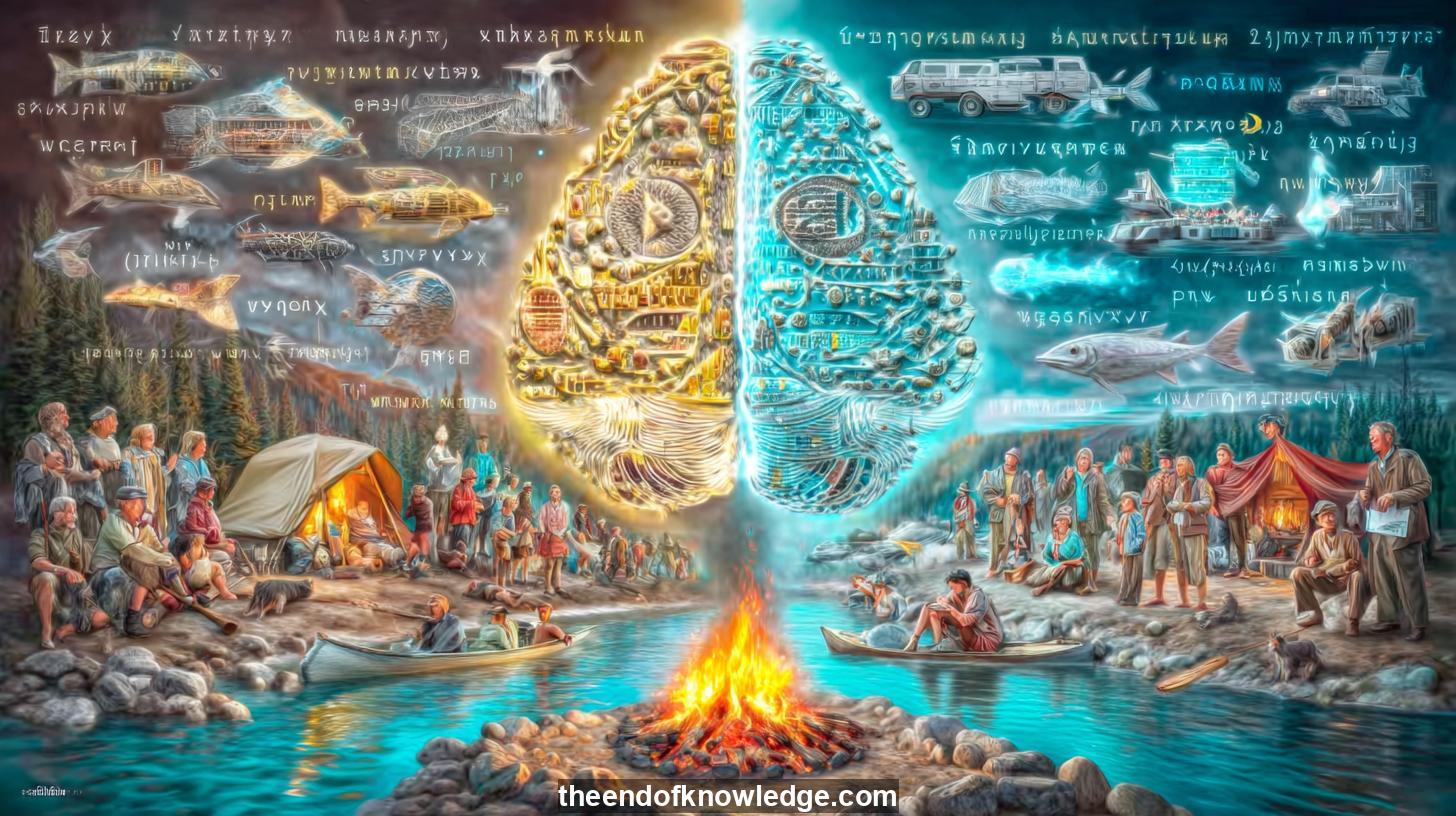

The conversation with Edward “Ted” Gibson, psycholinguistics professor at MIT and head of the MIT Language Lab, explores why human languages take the shapes they do and how culture, cognition, and communication pressures sculpt grammar. Gibson recounts his own path from mathematics and computer science into linguistics, describing how the formal puzzle of syntax first captivated him in childhood English classes and later pulled him into computational linguistics at Cambridge. He emphasizes that his engineering mindset treats language as a system to be reverse-engineered, preferring transparent formalisms like dependency grammar over the richer but more opaque phrase-structure grammars championed by Noam Chomsky.

A central theme is the tension between form and meaning. Gibson argues that while large language models excel at capturing statistical regularities in form, they remain largely disconnected from grounded meaning. He illustrates this with cross-linguistic evidence: Pirahã lacks exact number words, yet speakers perform precise matching tasks without counting, underscoring that linguistic categories are cultural inventions driven by communicative need rather than cognitive necessity. Similarly, color vocabularies expand only when industrial artifacts demand finer distinctions. These examples support the view that language is a negotiated, evolving technology optimized for efficient transmission rather than a fixed mental organ.

The dialogue also contrasts Gibson’s experimental, data-driven methodology with Chomsky’s introspective approach. Using fMRI, corpus studies, and fieldwork, Gibson shows that dependency length minimization—a proxy for working-memory load—is a universal design principle across 60 languages, while center-embedded structures like those in legalese systematically impair comprehension. Legal prose, he suggests, may retain such complexity as a performative marker of authority rather than for deliberate obfuscation. Finally, he speculates on future machine translation and interspecies communication, urging humility: human language is just one solution among many to the noisy-channel problem of conveying thought across minds.

30 Key Ideas:

1.- Pirahã culture lacks exact number words yet performs matching tasks without counting.

2.- Dependency grammar formalism transparently captures word-to-word relations via tree structures.

3.- Cross-linguistic corpus studies reveal universal preference for short dependency lengths.

4.- Center-embedded clauses in legalese impair comprehension across laypeople and lawyers.

5.- Color vocabulary size correlates with industrial need rather than perceptual capacity.

6.- Gibson’s experimental methods contrast with Chomsky’s introspective linguistic theorizing.

7.- fMRI evidence isolates left-lateralized language network from general cognition.

8.- Large language models excel at form but not grounded meaning or reasoning.

9.- Syntax evolves to minimize working-memory load during production and comprehension.

10.- Morphological complexity varies culturally without affecting learnability for infants.

11.- English shifted from verb-final with case marking to verb-medial without cases.

12.- Passive voice in legal documents does not hinder understanding once frequency is controlled.

13.- Language change over centuries reflects contact, economy, and identity pressures.

14.- Isolated Amazonian languages retain unique quantifier systems absent exact numerals.

15.- Lexical copying theory offers simpler learning models than transformational movement rules.

16.- Children acquire any human language with equal ease regardless of morphological density.

17.- Shannon’s noisy-channel framework explains robustness of word order against mishearing.

18.- Legalese complexity emerges performatively, not conspiratorially, according to surveys.

19.- Brain lesions abolishing language spare non-linguistic reasoning and symbolic tasks.

20.- Reading network activation is identical for spoken and written comprehension tasks.

21.- Constructed languages like Klingon activate the same neural circuitry as natural tongues.

22.- Dependency distance predicts processing cost measured via reading times and fMRI signal.

23.- Cultural contact accelerates vocabulary borrowing but leaves core syntax conservative.

24.- Universal grammar claims weaken when movement operations are replaced by lexical redundancy.

25.- Second-language difficulty arises from distance to native grammar, not intrinsic complexity.

26.- Poetry exploits freer word order in morphologically rich languages like Russian.

27.- Irregular verbs in English cluster among high-frequency forms due to historical entrenchment.

28.- Field linguists learn new tongues through social immersion and basic object labeling.

29.- Language death stems from diminished economic utility, not grammatical inadequacy.

30.- Future translation systems must bridge concept gaps beyond surface form alignment.

Interview byLex Fridman| Custom GPT and Knowledge Vault built byDavid Vivancos 2025

>

>

Lex Fridman Podcast #426 - 21/04/2024

Lex Fridman Podcast #426 - 21/04/2024